🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ589 | |

| 2 | Ṁ239 | |

| 3 | Ṁ90 | |

| 4 | Ṁ63 | |

| 5 | Ṁ60 |

People are also trading

https://jackcook.com/2023/09/08/predictive-text.html

looks to be about 30M params. Hard for me to call this a “large” language model…

@BTE can you clarify what the resolution criteria will be? Is it about chatbots as @DavidBolin suggests?

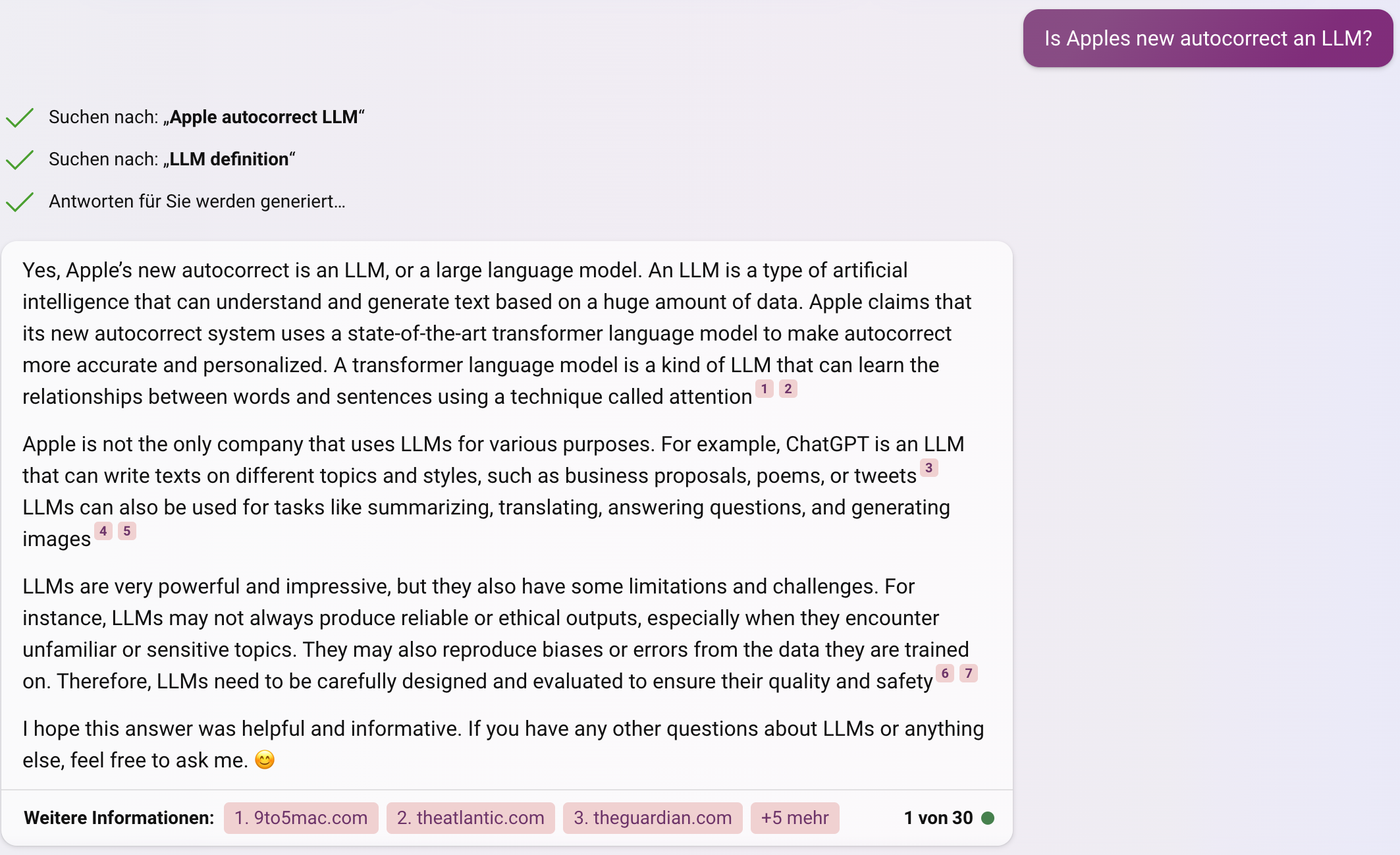

"The iPhone's keyboard on iOS 17 leverages a transformer model, which OpenAI (the company behind ChatGPT) uses in their own language models, to learn from what you type on your keyboard to better predict what you might say next, whether it's a name, phrase or curse word."

https://www.cnet.com/tech/mobile/ios-17-finally-lets-you-type-what-you-ducking-mean-on-your-iphone/

or

"The machine learning technology that Apple is using for autocorrect has been improved in iOS 17. Apple says it has adopted a "transformer language model," that will better personalize autocorrect to each user. It is able to learn your personal preferences and word choices to be more useful to you."

https://www.macrumors.com/guide/ios-17-keyboard/

or

"“Another core part of the Keyboard is Dictation, which gets a new transformer-based speech recognition model that leverages the Neural Engine to make dictation even more accurate,” said Craig Federighi, Apple’s SVP of software engineering, during Monday’s keynote that kicked off WWDC23."

https://www.cultofmac.com/819643/hands-on-ios-17-autocorrect-intelligent-input/

@gigab0nus or probably even better from the original source where I knew it from (incl. timestamp): https://www.youtube.com/live/GYkq9Rgoj8E?feature=share&t=1628

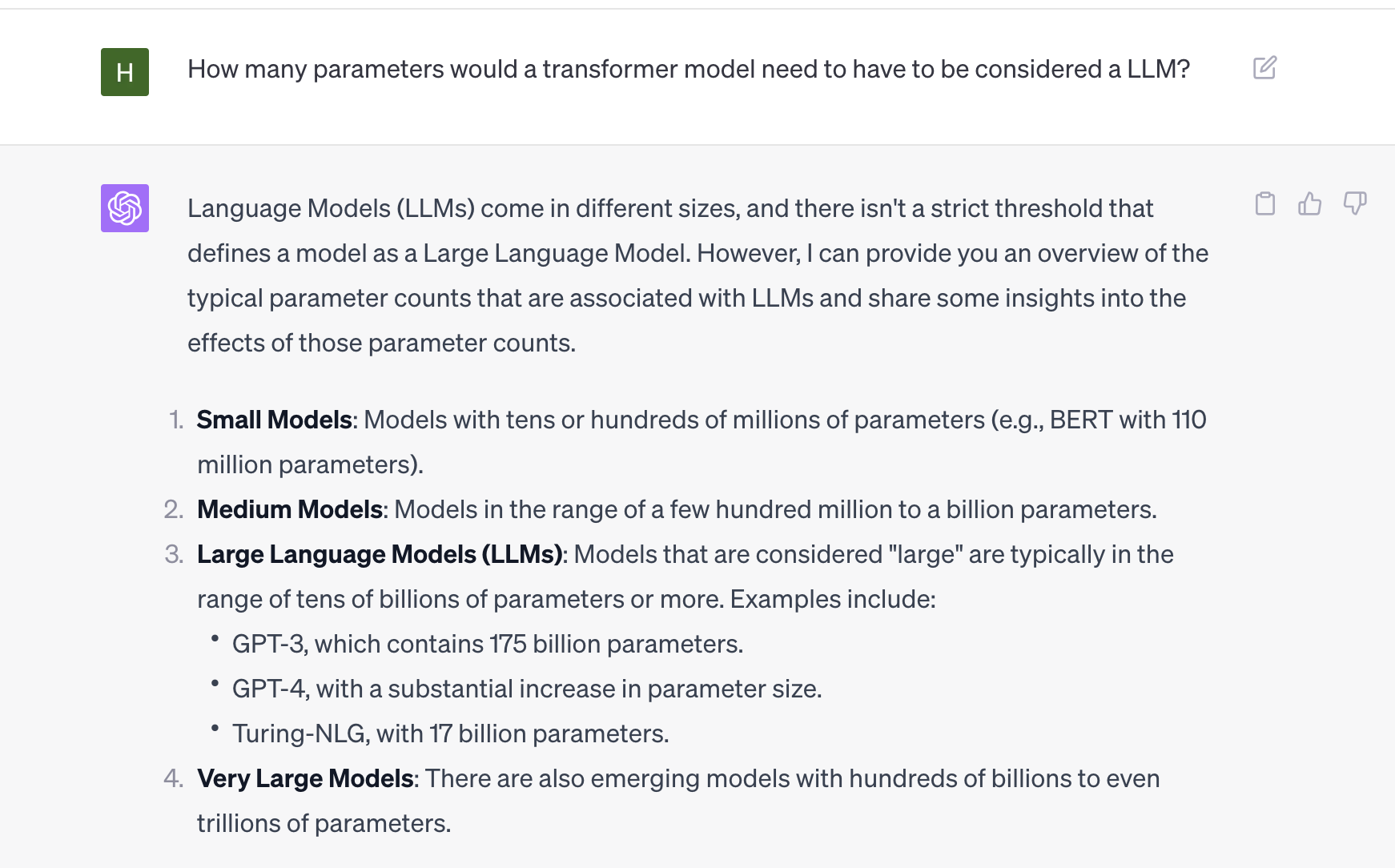

@gigab0nus How large does a Language Model need to be to be considered a LLM? It seems unlikely to me that a model designed to run locally and in real time would be very large, but I could be wrong. For comparison, the model from Google Keyboard is a one-layer LSTM with only 670 hidden neurons (source: https://arxiv.org/abs/2305.18465), though it's designed to run in devices that are probably a lot less capable than the latest Apple products.

@kcs There is some irony in "embedded LLMs" because embedded usually means small. I don't think there is a consensus on minimal size for the word "large" in LLM. The main point why I would consider this YES is:

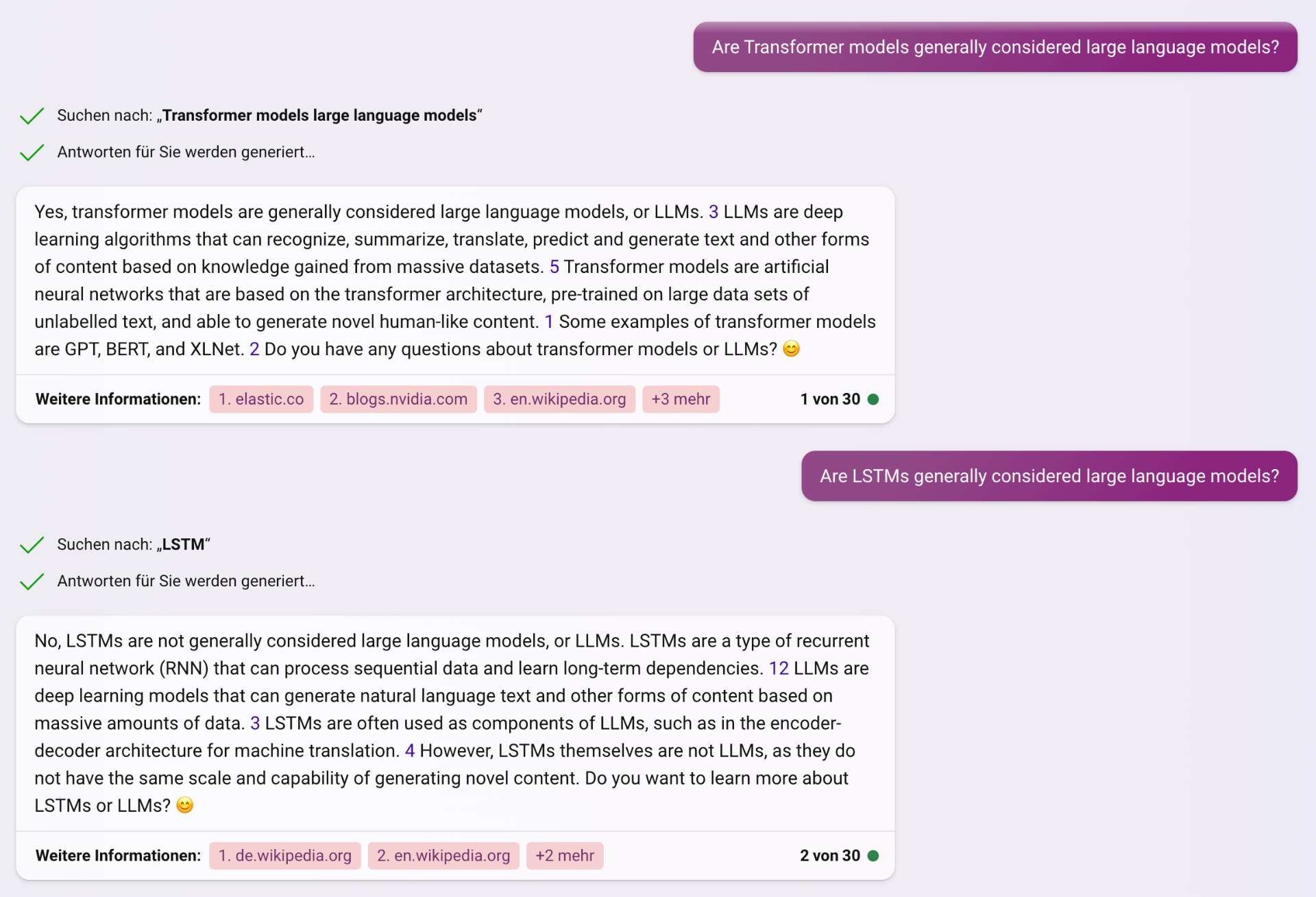

@gigab0nus I don't think it makes a lot of sense to automatically assume a model is an "LLM" just because it's a Transformer, especially in the case of an autocorrect which is a much simpler task than the long-form text generation of GPT et al. At the same time, though, I doubt Apple will release many details about the specific architecture they used, so I guess one could argue that it being a Transformer is evidence enough. There probably isn't even a consistent definition of "LLM" anyway

@gigab0nus At the current point LLM practically means "transformer + language" while during LSTM times the scientific community only spoke of Natural language processing. But yeah in general I agree with your point. This is not well defined.

@kcs so yeah transformer alone is not LLM, since AlphaFold also uses a transformer and there is stuff like image transformers.

@chilli According to that, we might never know if it is an LLM. Where did you get that from?

At least colloquial understanding is this:

@chilli Even when we could settle on those numbers, I would argue that a compressed version of an LLM is still the LLM, even if it has massively reduced parameter counts.

@kcs Open source models with 7B parameters can run this fast with no real issue

GPT-2 (1.5B) was considered an LLM by wikipedia and most sources. So I think the bar is around 1B parameters. It could definitely have that much.

@gigab0nus No idea about "counting," but autocorrect always used language models, and it is just a question of the size.

It is obvious that this is not the original meaning of the question, since autocomplete doesn't talk to you.

But I'm not betting here, so I don't care if they count it or not.

@ShadowyZephyr Do you have any source on 7B models running this fast? On my experiments using llama.cpp on my i7 cpu, generation runs reasonably fast for the first few tokens, but then grinds to a halt as the context window increases. I'd be very interested if there's a way to make it faster.

@DavidBolin autocorrect did not always use that type of language models, those referred to as "LLMs." Those are the ones that have transformers.

@kcs Yeah I doubt Apple's has a high context window, that is absolutely not necessary for this application, and I'm sure Apple can optimize farther for their own hardware. https://twitter.com/thiteanish/status/1635678053853536256