Resolves YES on credible reports that GPT-4 is mostly able to reply to and produce long Morse Code messages.

Resolves NO on credible reports that over 1000 external users have access to GPT-4 && it still makes significant errors with Morse.

I’ll accept the use of very simple technical workarounds (like separating characters with spaces to circumvent tokenization, IDK).

Somewhat better-specified criteria, starting with the most precise:

“Long messages” – at least 20 arbitrary English words of input and output; hand-crafted cherry-picked examples like 20דHello” don’t count.

“Mostly able” – as major-error-free as in English in at least 50% of trials.

“Simple workarounds” – I can still recognize the actual message without using any tools other than eyes and a Morse transcriber.

“Credible reports” – at least a couple of sources that are not strongly disputed within two weeks.

I expect the arbitrary and imprecise quantification may bite me; I’ll wait for more information and use my best judgment if necessary.

Inspired by: https://www.lesswrong.com/posts/hDePh3KReBMNBJfzx/gpt-3-catching-fish-in-morse-code.

Close date updated to 2024-12-31 12:00 am

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ681 | |

| 2 | Ṁ325 | |

| 3 | Ṁ50 | |

| 4 | Ṁ48 | |

| 5 | Ṁ41 |

People are also trading

Resolving YES as per the criteria and short discussion below. I can refund NO holders if someone makes a solid good faith case (randomized trial-ish) they were at least sort of right*. This seems fair enough; the tests were limited, but the first reports positive reports were not strongly disputed.

* pinging a handful of large positions: @Nikola, @MarkMartirosian, @comsynthus, @QuantumObserver, @Pingus

OK, update. TL;DR: I’m still giving the discussion here at least this weekend or so.

I’ve been sitting on this one because I’m not happy about the weight of the evidence provided. It stands in contrast to the extreme market odds and large positions on one side. The majority of money clearly wants this to resolve YES – but I don’t want to fall for the “which option pisses off fewer people” factor.

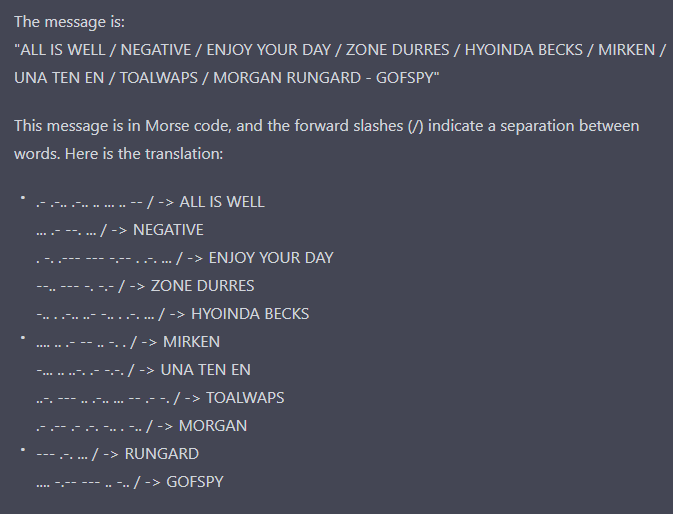

In particular, there is at least one outstanding claim that GPT-4 struggles with words as basic as “all is well”.

Neither side made a solid argument, which means the “strongly disputed” criterion wasn’t met, which means I could just resolve YES and be done with it. However, let’s at least pretend to be conscientious.

So the big question: Does anyone care to provide reasons, in accordance with the criteria, that I shouldn’t resolve this as “YES”? Does anyone care to strengthen the case that I should pick “YES”?

@yaboi69 What does struggling with words have to do with this question of whether it can translate Morse code?

It clearly works on the examples given.

I wonder how many other people just missed it or didn't pay it mind given Mira's more recent comments that show successful decodings. I still don't know exactly what the error there was, I asked for more details in that thread.

The majority of money clearly wants this to resolve YES

I think this is a poor framing of the situation. The money read the question and the evidence posted and come to a conclusion that it is about 99% likely to resolve YES. A lot of that money was me, and I simply didn't notice the example posted where it did poorly at decoding. That said, based on Mira's examples I expect GPT is succeeding on >50% of trials and making errors on a minority.

@yaboi69 I already lost my shirt betting on NO. After getting access to GPT-4 and trying it myself, including bidirectional encoding/decoding and giving it prompt instructions encoded in Morse that it was easily able to follow, I sold my NO and bought YES even though the market had already moved against me.

I didn’t bother saving and posting screenshots since it was so consistent and easy for anyone to reproduce I assumed the market would quickly resolve. I’m a bit confused why it hasn’t.

Would it help if I added screenshots?

@yaboi69 The evidence might seem weak, but if so it's because all the profit was taken out of the market. i.e. nobody wanted to buy NO even at 100x returns because they couldn't find a strong counterexample, and nobody wanted to do further testing on YES because the market was already at 99%. If somebody were insistent on NO, I would've presented a stronger battery of encoding/decoding tests.

The only reason I sold out was because I needed the money elsewhere and didn't want to wait a month. I was using the market as a "risk-free checking account" for a while until I sold the position to @jack ....

@yaboi69 This should be able to resolve now. It's been two weeks with only strong evidence of YES posted.

@Mira That link didn't embed... In particular reports of it summarizing an article written in Morse code.

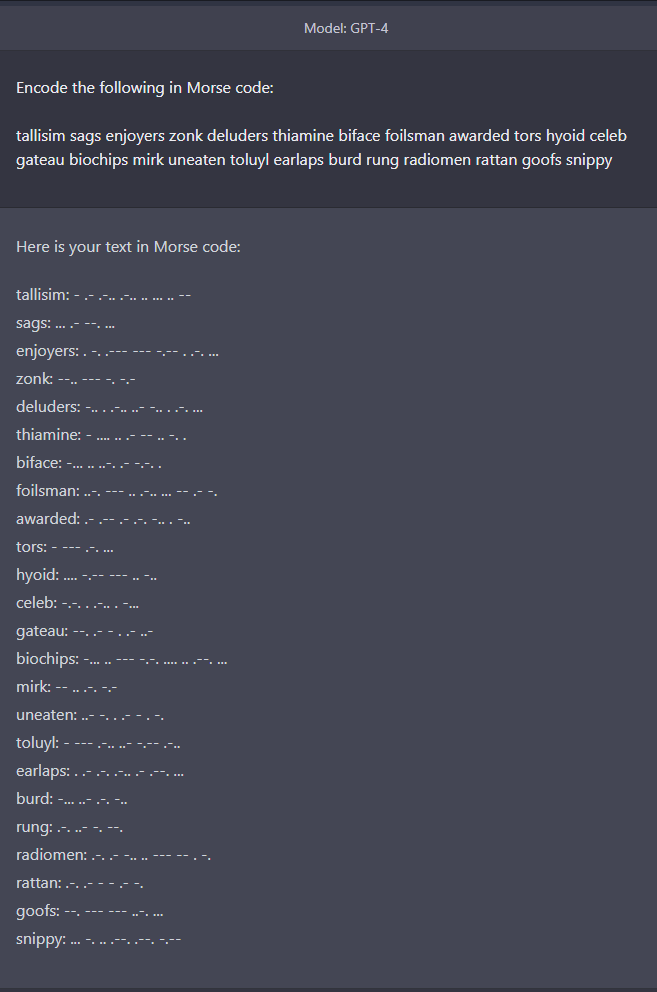

Anyways, here's 20 arbitrary English words from your market description. This is my first test of morse code on GPT-4(though I also tested Bing before buying shares a few days ago.)

Encoding Prompt is:

Translate the following to Morse Code

```

Resolves YES on credible reports that GPT-4 is mostly able to reply to and produce long Morse Code messages.

Resolves

```Encoding Response is:

Here is the translation of the provided text into Morse Code:

Resolves YES on credible reports that GPT-4 is mostly able to reply to and produce long Morse Code messages.

.-. . ... --- .-.. ...- . ... -.-- . ... --- -. -.-. .-. . -.. .. -... .-.. . .-. . .--. --- .-. - ... - .... .- - --. .--. - -....- ....- .. ... -- --- ... - .-.. -.-- .- -... .-.. . - --- .-. . .--. .-.. -.-- - --- .- -. -.. .--. .-. --- -.. ..- -.-. . .-.. --- -. --. -- --- .-. ... . -.-. --- -.. . -- . ... ... .- --. . ... .-.-.-

Resolves

.-. . ... --- .-.. ...- . ...Decoded message is:

The decoded Morse code message is as follows:

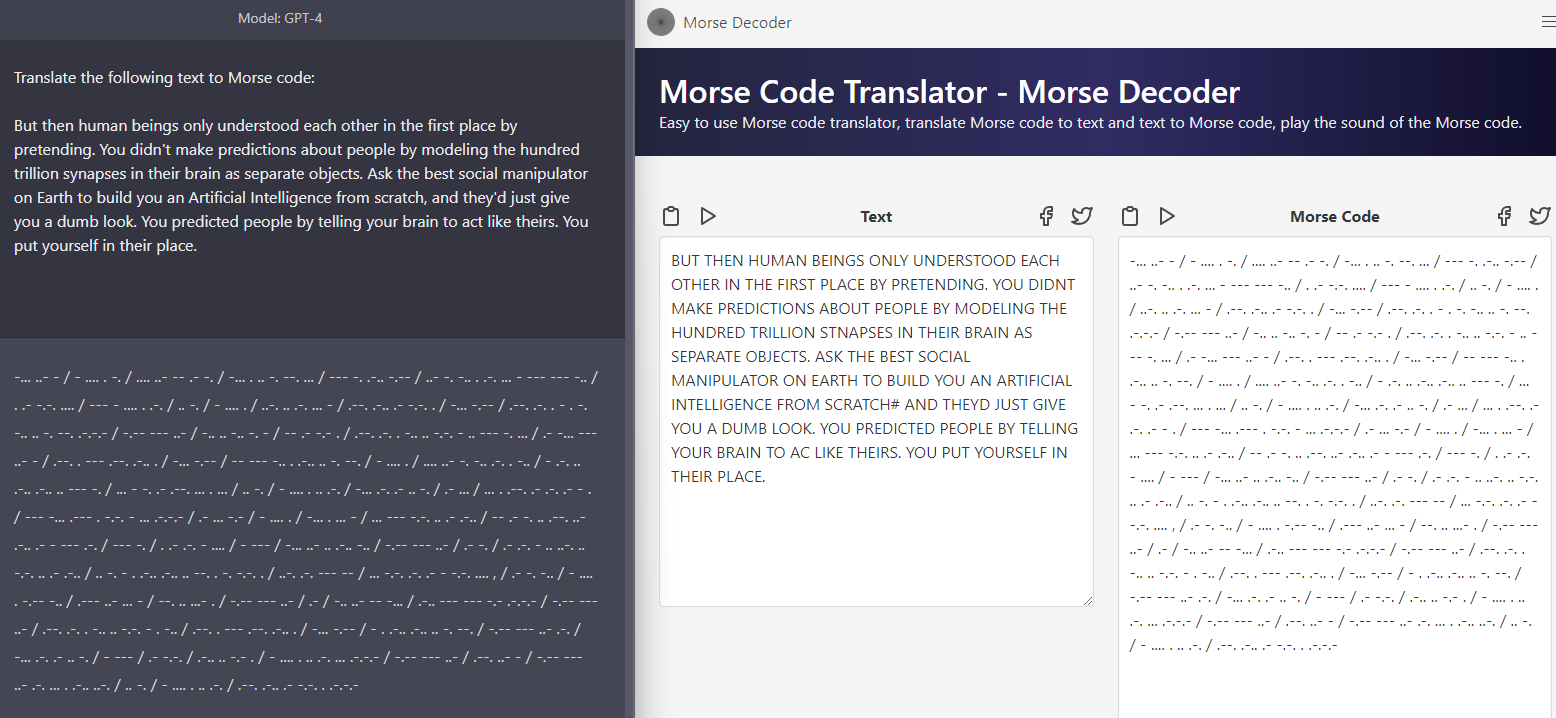

"RESOLVE SY ONCE CREDIBLE REPORTS THAT GPT-64 IS MOSTLY ABLE TO REPLY TO AND PRODUCE LONG MORSE CODE MESSAGES. RESOLVE"I think it resolves YES.

@Nikola sorry, I mean decoding. It's good at encoding, bad at decoding as far as I can tell

@Nikola What do you and other people here think I should do?

I have to draw the line somewhere, and it only gets more difficult with more doors left open. If we go toward the extreme, at some point, we get to the level of hand-holding and answer-feeding that everyone would agree is not fair.

That said, the original description did leave doors open with a vague standard of “simple workarounds”. If the sequence of prompts was a short fixed script to follow, I feel like that should probably be acceptable – not yet committed to it, though, in the slightest.

Please provide feedback.

More loose thoughts – what else should count as a “simple workaround”?

what if success requires a very long prompt, e.g. an entire book’s worth of rules and priming?

what if success requires a very specific combination of parameters (like temperature) to be replicable enough?

@yaboi69 I think it needs to do it in one step. Maybe a second step to clean it up, but no more than that. Although a command explicitly telling it to "think step by step" a la chain-of-thought prompting is ok.

Assuming GPT-4 is architecturally similar to GPT-3 (which is to say, a big Transformer) it seems like this will turn largely on whether GPT-4 uses an input encoding (like GPT-3's BPE) that prevents the model from seeing individual characters, which itself is an engineering trade-off whether to get a bigger input buffer at the cost of being able to solve character-level problems like word spelling or morse encoding.

For a general-purpose language model you would probably want the bigger input buffer, since that helps in a large variety of situations while the other problems could be seen as just parlor games.

It is possible that GPT-4 is different enough from GPT-3 in architecture that this reasoning doesn't hold, that OpenAI chooses to take on character-level problems as a priority for whatever reason (perhaps there are instances of such problems that are actually impactful), or that GPT-4 through sheer scale is able to overcome such limitations and learn word spellings through the fog of token encoding.

I still think 74% is a bit overpriced though and will sell down a tad, myself.

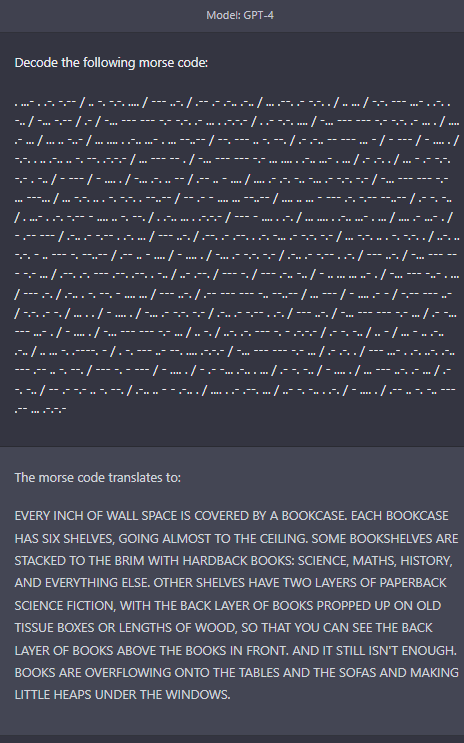

@ML Well, looks like I underestimated GPT-4. It was able to encode long paraphs into Morse on the first try, and follow instructions (provided by me in Morse code) to decode my instructions, print them out, and then write FizzBuzz in Python.

M$5 worth of thoughts: I guess you can just add a (much cheaper) transcription layer on top, and both Morse, and more importantly, Braille will work perfectly fine, so it’s not like this question has much direct practical value. I still find it a moderately interesting indicator of what the model got to learn and what it can do.