The model must correctly compute the product of two randomly chosen 20-digit numbers with at least 90% accuracy, meaning it may produce incorrect results in at most 10 out of 100 independent trials.

The model must perform this computation without executing code, scripts, or relying on external computational tools.

A YES resolution will occur immediately upon verification that a model meets these criteria. If no such model is verified by the end of the year, the resolution will be NO.

Update 2025-12-17 (PST) (AI summary of creator comment): - The creator is conducting a test with 10 pairs of random numbers to determine resolution.

If 9/10 are correct, the market will resolve YES, otherwise it will resolve NO.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ126 | |

| 2 | Ṁ62 | |

| 3 | Ṁ51 | |

| 4 | Ṁ10 |

People are also trading

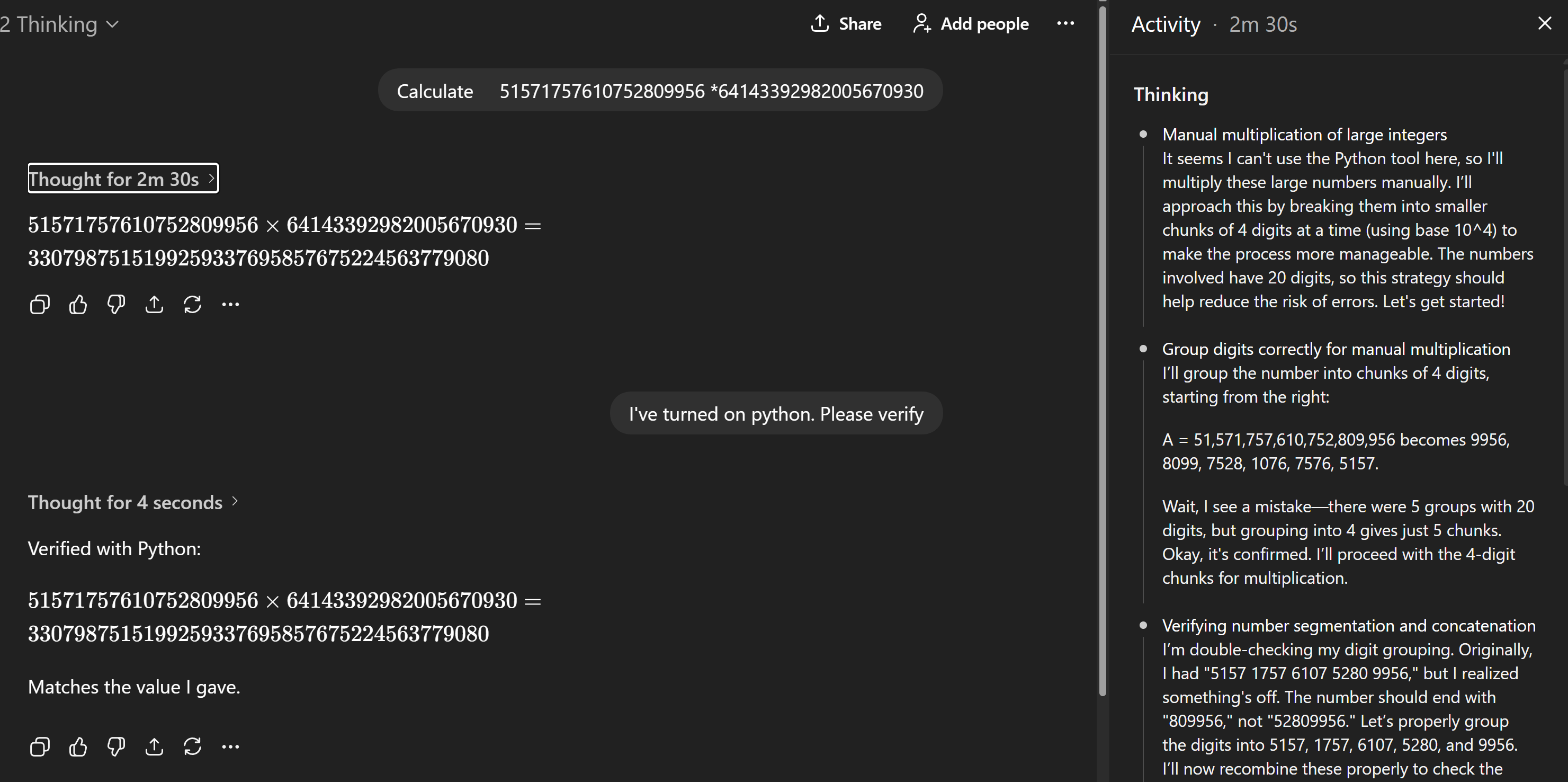

I've resolved as a Yes after trying it on 11 different random 20 digit pairs, getting a correct answer on each. I've used ChatGPT 5.2 "Extended Thinking".

I'm happy to share the links, but it seems so reliable that I imagine anyone can replicate it. I've attached a screenshot that confirms that coding tool was off

@creator thoughts on this? https://chatgpt.com/share/693b0ec2-b9d0-8000-8d38-e221cdea2b60

Your market terms aren't perfectly clear to me, sorry.

@MRME looks like it’s using Python, so it’s against the terms. You can turn off code execution in setting if you want to test it without Python.

@gpt4 please go ahead and test it. Looks like it is working to me - https://chatgpt.com/share/69404e43-fe88-8000-99dc-5ceecb1bf06b

@spiderduckpig I've turned off "coding" in ChatGPT and have managed to replicate it - i.e. I got the right answer on your first example.

I'm going to generate 20 random numbers and multiply 10 pairs. If we get 9/10 correct I'll resolve the market as a Yes, otherwise as no.

@gpt4 That's surprising, I had used GPT 5.2 Auto and it had not worked out. Maybe I had to set it to Thinking, I assume that this sort of problem can easily be solved as long as enough CoT is afforded to the model, and maybe you need Thinking for it. I also believe that some models have access to an internal calculator in addition to a code interpreter, as a way to save on computation, though I am not sure if 5.2 has one.

@MRME Yeah, fwiw I did use 5.2 for each of those chats (you can ask them to verify), but they were 5.2 Auto, I assumed that just allocates enough CoT, I guess not

@spiderduckpig yes, manually setting Thinking, then choosing extended thinking (which is not available on the mobile interface) generally makes the model significantly smarter.