Resolves YES if via some "magic" (read: technology), I am able to understand in English what my dog is saying.

Or if I can speak in English and my dog is able to hear that in dog language.

Yes, I know we can communicate with animals and I can read the eyes or the behaviour of the dog to know what it's saying. This market is explicitly about speech.

"my dog" is just a placeholder of course. Doesn't have to be my dog, could by any dog.

People are also trading

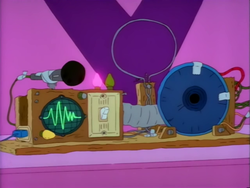

This needs clearer resolution criteria before I can participate. I would consider this as being “able to understand in english what my dog is saying”. https://youtu.be/-ZDM1tQn5hg

@AustinThomas as described below, it's about a fairly robust model of translation of barks (even if for one species, but shouldn't be a single dog) to English (or vice versa), rather than dog barking in a way that sounds similar to a word.

@firstuserhere It’s understood that barks do not have meaning like human language, but communicate emotional states. So if a program could interpret a bark as being between say happy or aggressive, that would satisfy your criteria?

@AustinThomas no that wouldn't. It could use those emotional states/sentiment analysis and combine it with other relevant factors maybe like the number of times it barked, use the context of the bark etc to create a cohesive sentence that communicates the state of the dog, as if spoken in english

@firstuserhere I don't know if what you're looking for is possible. As another user mentioned, if you screamed, it couldn't be translated into Chinese. You could measure the pitch, duration, or frequency of the scream, but that wouldn't help translate it into Chinese. I get that dogs may vocally communicate, but the specific meaning of each bark, whimper, etc. is not fixed and can vary depending on the individual dog and situation.

I think that even if we were able to develop a system for translating dog barks into human speech, it is unlikely that the resulting output would be a "cohesive sentence" because dogs are not thinking within a framework of language, like how the scream is not within the framework of language.

@AustinThomas perhaps. It's unlikely for sure. It's still possible to maybe extract a few meaningful features and sort of glue them together by taking context into consideration, though that seems like a lot of work

@AustinThomas it's only that the time frame is long enough that I'm betting YES here, sold my YES shares for the 2025 market.

If your dog managed to learn to push buttons like the TikTok dog would that count? Because Imho the tech is there - the question is just: will you put in the work to train your dog?

My hot bullshit take: Language is an exercise that requires exercise and practice that is informed by trial and error. Your dog isn't speaking in the sense of using a different set of signals - you couldn't precisely translate their non verbal communication into spoken language anymore than you could translate human body language and non verbal communication into spoken language.

Your essentially proposing mind reading the dog, but unlike a human the dog doesn't even have the framework/architecture of verbal language to tap into.

@JustNo wait, what's the tiktok dog?

Also, this isn't about other sophisticated forms of communication like the dog learning to push buttons, it's about there existing a form of technology that can translate between barks and english. Yes, the dog may not use just barks to communicate, but if we're able to sufficiently decode that part of the dog communication, it's still a major breakthrough.

Is it really mind reading? A translator, or a machine translation system also translates language to language, but we don't call it mind reading. Why? Many philosophers say that thoughts =/= language.

Anyway, this market is mostly for tracking ability to translate barks into english or english to barks, or something like that

@firstuserhere Piper the talking dog (I don't actually know much about this, but I've been impressed by what I've seen.) https://www.tiktok.com/@pipermad/

Let me take another swing at this. Here's my priors:

We can translate language to language, because they are fundamentally very similar.

Dogs barking are not speaking a language, per say. Just because they are making sounds, doesn't mean they are doing the same thing as using words. If I scream in pain, you can't translate that into Chinese.

As a result:

You might be able to make a "dog whisper-er" AI that could help you understand your dog, but it won't be "translating".

A dog behavior expert (human or AI) can coach you on how to communicate with your dog, but the most effective tools are going to be tone and body language and gestures - dogs don't communicate everything in sound, so you can't talk to them in just a series of barks.

@JustNo Interesting!

1. Okay so, language to language is one thing, but we can combine modalities now. We have image to language, language to image, audio, video also now. We even saw some examples of positive transfer: learning x in <modaility 1> and transfering that knowledge to <modality 2>.

2. Sure dog barking is sounds, but is it speech? It doesn't need to be a language to communicate. If the dog has a different type of sound for happy bark vs sad bark vs pain bark (as i think they do, imo) then it's very much possible to understand that

3. So, we may not translate with a dog whisperer but we could have it decode into a set of "emotions" to model for the internal representation of whatever "dog" means. And then have something fill in the gaps - connect these subspaces containing these "emotions" decoded from the speech/sounds of the dogs

4. Yes, agreed, there's a lot more to communication than the voices. Maybe with multiple modailities + positive transfer, that's also not out of the question. But this market is surely focused on speech only, not sure quite how to frame the communication one