People are also trading

@firstuserhere seeing a new pfp is so disorienting 😅 and it's nice that you're back

anyone with access to Devin will be able to test on SWE Bench, right?

@firstuserhere Do you have any info beyond what was posted on their blog?

"Devin was evaluated on a random 25% subset of the dataset. Devin was unassisted, whereas all other models were assisted (meaning the model was told exactly which files need to be edited)."

- https://www.cognition-labs.com/introducing-devin

This sounds exactly like how they tested GPT-4.

"GPT-4 is evaluated on a random 25% subset of the dataset."

So to me that's valid and fair. The wording on the blog implies Cognition ran the benchmark themselves. I could understand waiting for independent verification although it might be too cost-prohibitive for others to run so we might wait forever in that case.

@SIMOROBO actually you might be right, i will read more about it, made the comment without checking in-depth

@firstuserhere Yeah I'd love a source for the "only pull requests" claim. my impression was that it's a random 25% subset.

@Nikola The SWE-Bench dataset is pull requests. Any random subset is only pull requests.

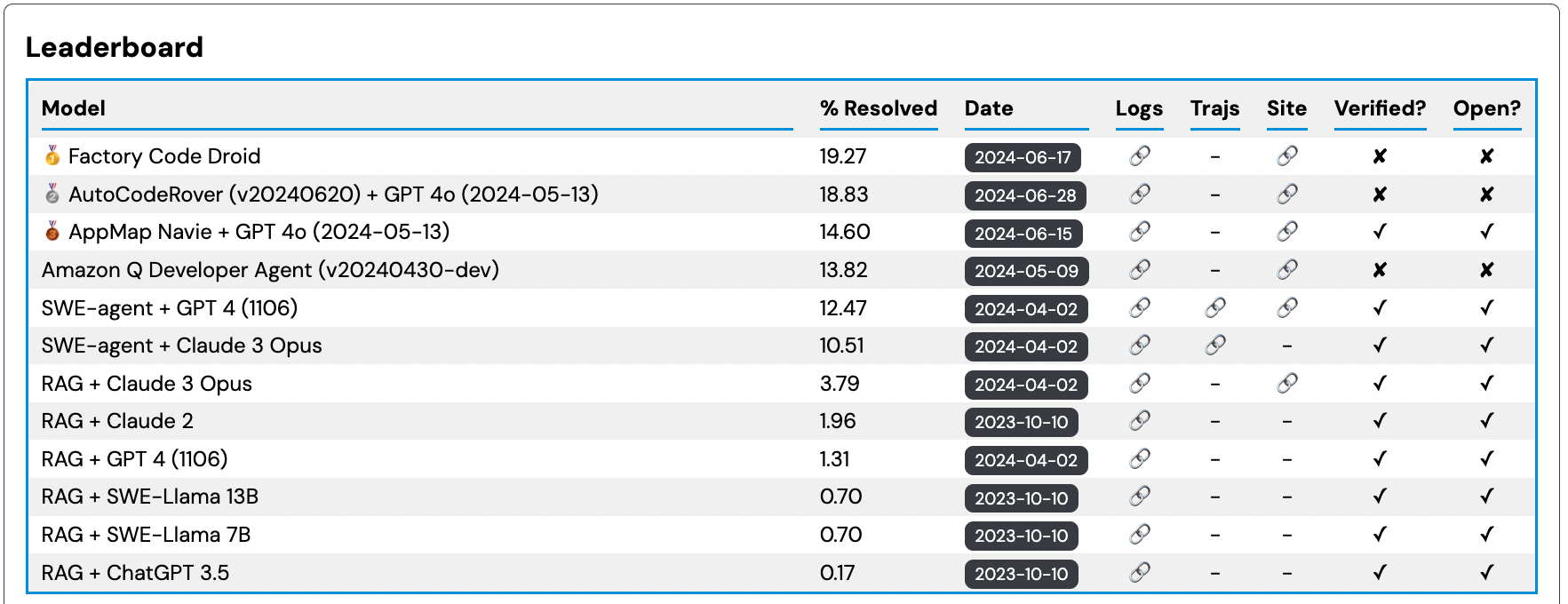

SWE-bench is a dataset that tests systems' ability to solve GitHub issues automatically. The dataset collects 2,294 Issue-Pull Request pairs from 12 popular Python repositories. Evaluation is performed by unit test verification using post-PR behavior as the reference solution.

From https://www.cognition-labs.com/blog

We evaluated Devin on SWE-bench, a challenging benchmark that asks agents to resolve real-world GitHub issues found in open source projects like Django and scikit-learn.

Devin correctly resolves 13.86%* of the issues end-to-end, far exceeding the previous state-of-the-art of 1.96%. Even when given the exact files to edit, the best previous models can only resolve 4.80% of issues.

We plan to publish a more detailed technical report soon—stay tuned for more details.