Resolves when an AI completes the main storyline. Full completion of every single puzzle in the game is not necessary.

The point of the game is to be dropped into a new world with no explanation, so the AI must not be given any explicit instructions, or any outside help after starting the game. But it can restart the game and save its own context from previous attempts.

Update 2025-03-06 (PST) (AI summary of creator comment): Updated Valid Completion Criteria:

The game is considered completed when the AI reaches the elevator that takes you back to the start and resets the game.

If there are multiple endings, any ending that is recognized as completing the main storyline will count.

People are also trading

While it's not the hardest type of game for modern AI (no fast reaction required and the puzzles are relatively self-contained), I give about 50% odds that someone even makes a serious attempt at this specific game before 2028.

It's also exceedingly likely it gets stuck at a single required puzzle, making it impossible to progress. Current systems are bad at self-correcting in such situations, look at Claude getting stuck at mt moon.

@ProjectVictory Yeah, I thought about changing the rules so that the AI is showed to get some help when it's stuck, but that makes it difficult to quantify exactly how much help is allowed.

@jcb I think there might be multiple endings? But what I had in mind is reaching the elevator that takes you back to the start and resets everything.

If there are other valid endings, any of them would count.

@Twig Yeah, that's unavoidable, so I would still count it.

I could be wrong, but I think having the solutions in the training set probably won't help that much.

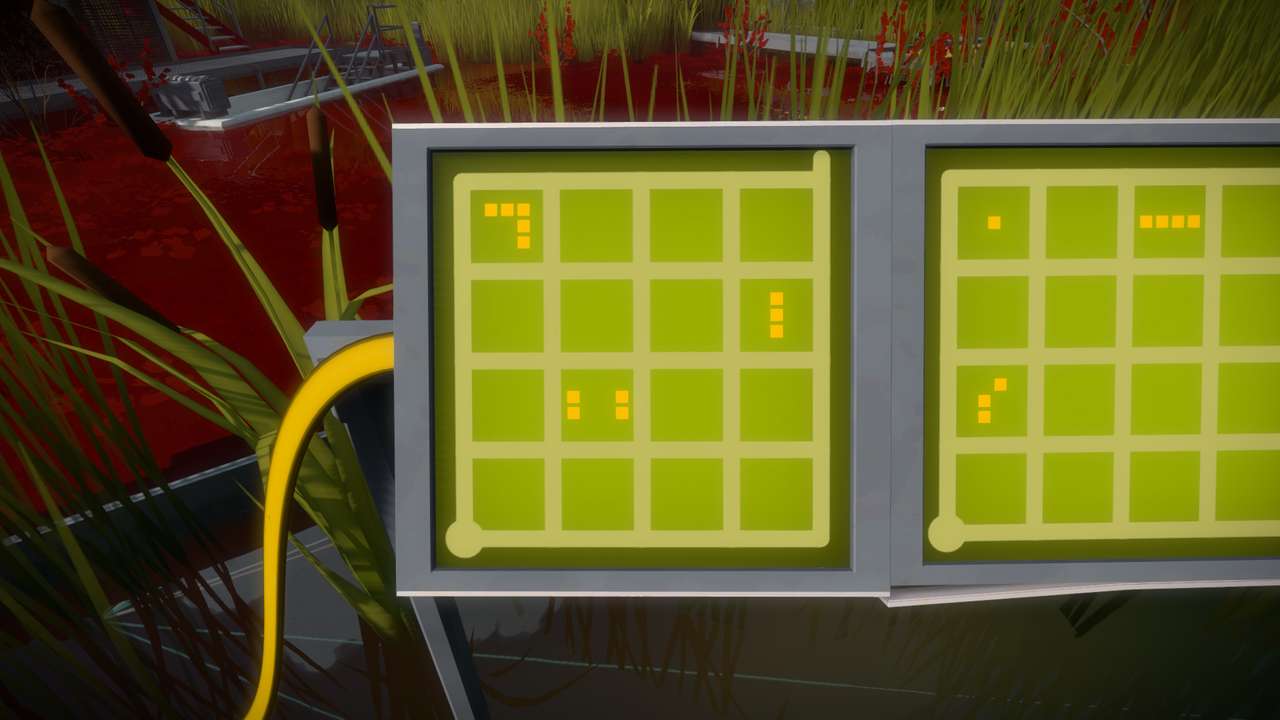

@TimothyJohnson5c16 It seemed to me that it would be very helpful, since a model with vision could plausibly recognize puzzles from a screenshot and regurgitate a walkthrough.

But in casual testing, I have thus far been unable to get Claude or ChatGPT (o3-mini) to provide the correct solution to puzzles presented as images. Sometimes, they are able to recognise the game and provide general tips (for instance, the tetrimino rules), but memorization of the exact solution does not seem to have occurred, even when using puzzle screenshots taken directly from public walkthroughs.

Weakly predicting NO for 2028 at 55% based on this.

I would like to see the results from full o1 if anyone with access would like to test. For instance, Swampy Boots here: