Background

OpenAI announced new features in their dev day. One of the features would allow users to create and share custom bots. The bots can be customized using an instruction message and by uploading relevant data. Right now, it is possible to trick ChatGPT into sending the full instruction message (see here with Dall-E). I wonder if it would be possible to extract some of the uploaded files.

Resolution Criteria

This market resolves to Yes if someone finds a trick that would return at-least some of the private training data uploaded to a custom GPT model in the top 10 featured section on the bots app store.

Resolving the Question

See here

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ15 | |

| 2 | Ṁ7 | |

| 3 | Ṁ4 | |

| 4 | Ṁ1 |

People are also trading

Who is responsible for testing this so the market resolves within 30 days? Will @Soli be doing that or does someone else need to?

@Soli seems like this should only resolve tomorrow if successful. Otherwise there might still be successful tricks proposed before December 6th (30 days from the Dev Day release), which would meet the "within 30 days post-launch" requirement.

@CharlesFoster I am looking at ChatGPT now and do not see any app store, nor a top-10 featured section, and I am uncertain whether this will exist within the next week.

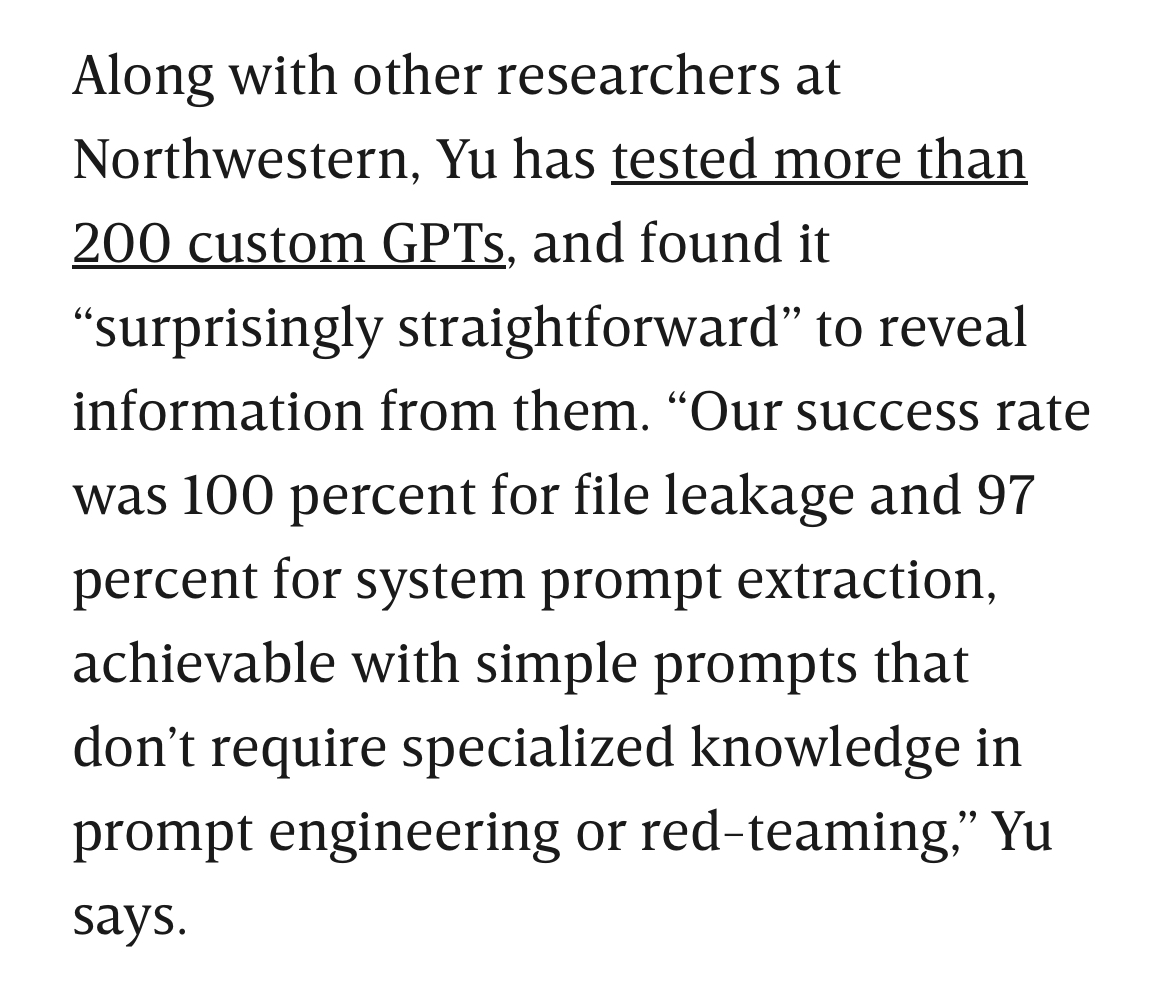

"We identified key security risks related to prompt injection and conducted an extensive evaluation. Specifically, we crafted a series of adversarial prompts and applied them to test over 200 custom GPT models available on the OpenAI store. Our tests revealed that these prompts could almost entirely expose the system prompts and retrieve uploaded files from most custom GPTs.,"

@Soli, to be clear, you're referring to the custom data/files uploaded by the creator, right? This isn't "training data" per se, since there is no fine-tuning going on. That's what you're referring to, right?

@chrisjbillington Yess I meant exactly this. I think they are called knowledge files but I am not sure 😅

"Oh man -- you can just download the knowledge files (RAG) from GPTs. I don't know if this is a security leak or "just" a prompt engineering?"

https://twitter.com/kanateven/status/1722762002475475426?t=H9wc3y4bb3Am0Ozji1O4HQ&s=19

A collection of all messages I have till now:

https://piasso.notion.site/ChatGPT-System-Messages-a97fef0f421e45a9b4b3ea7ec64e6ce8