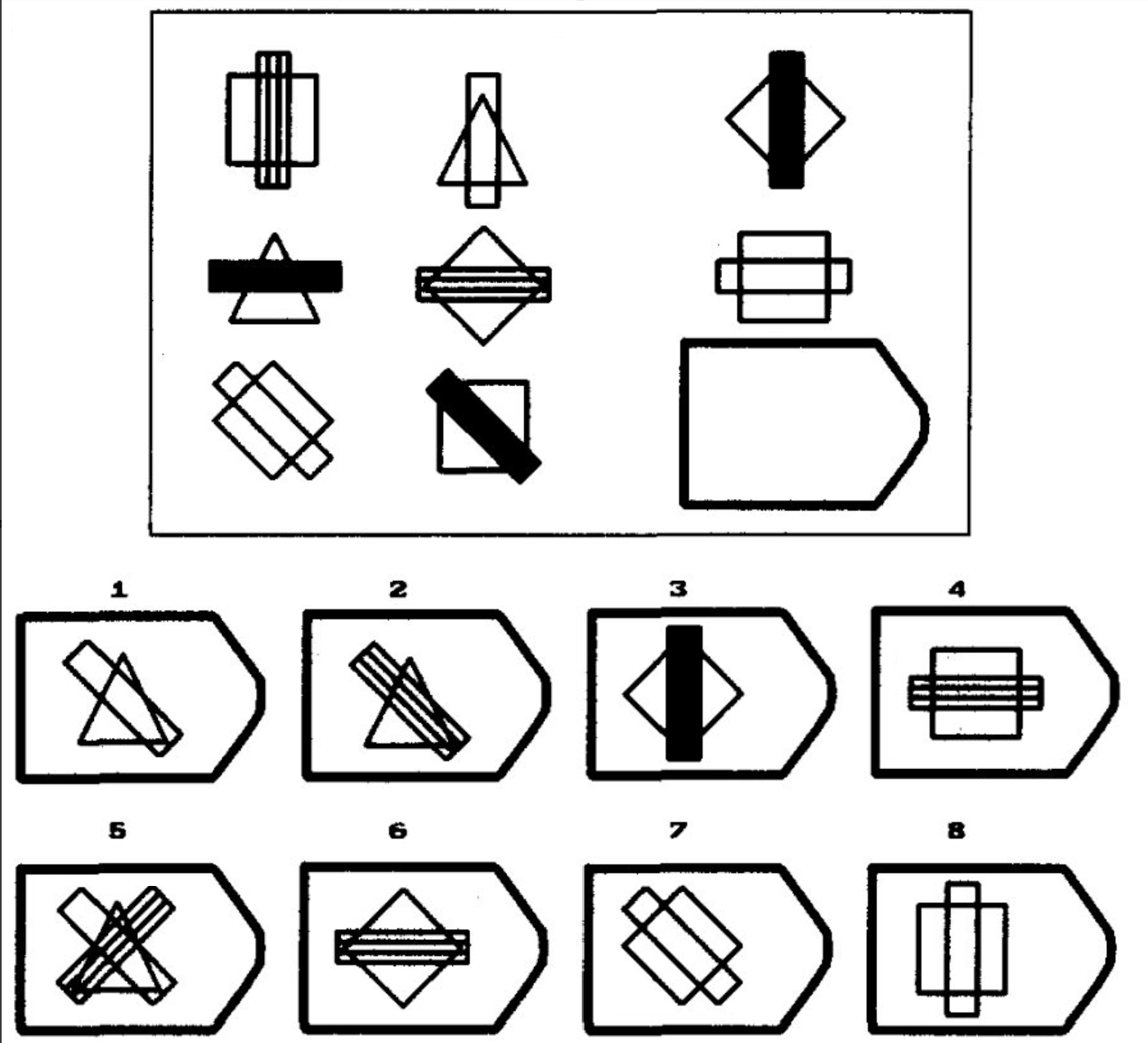

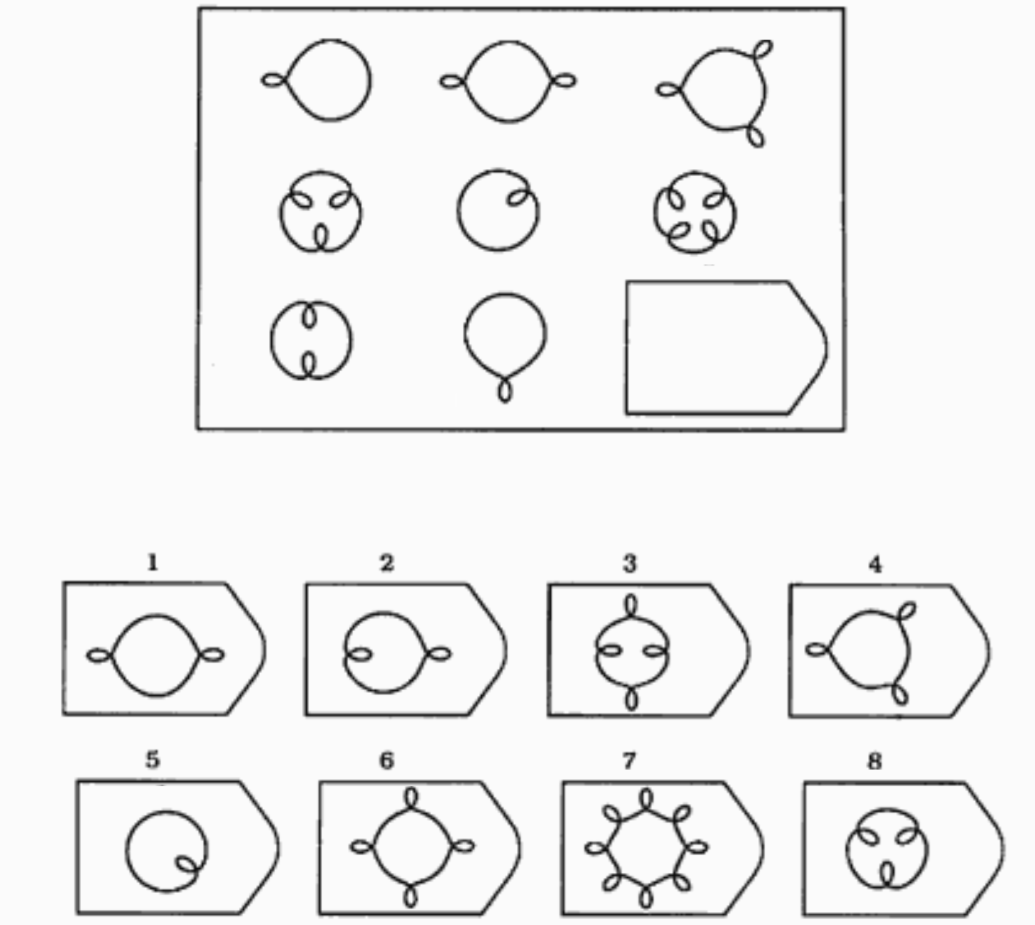

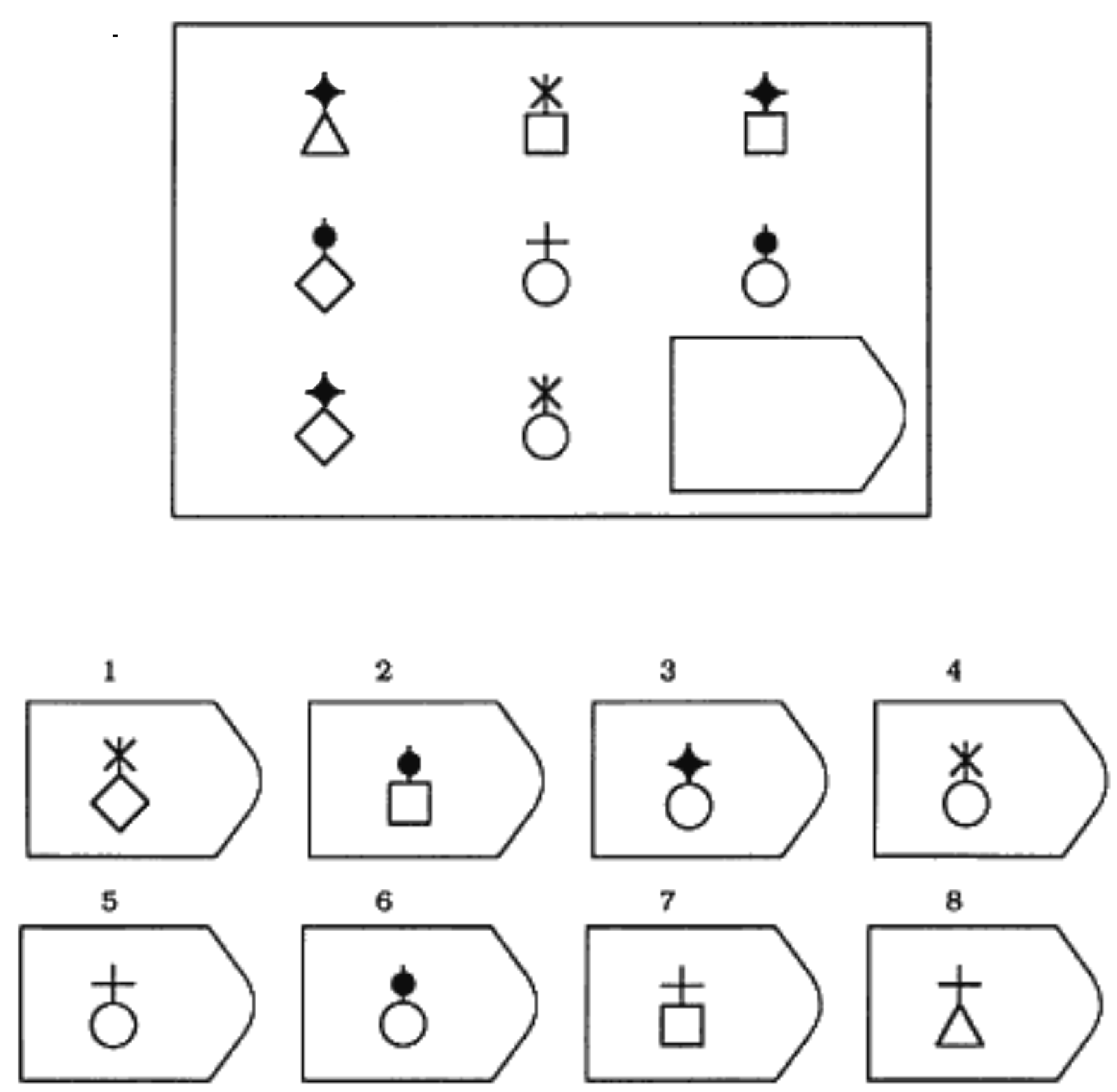

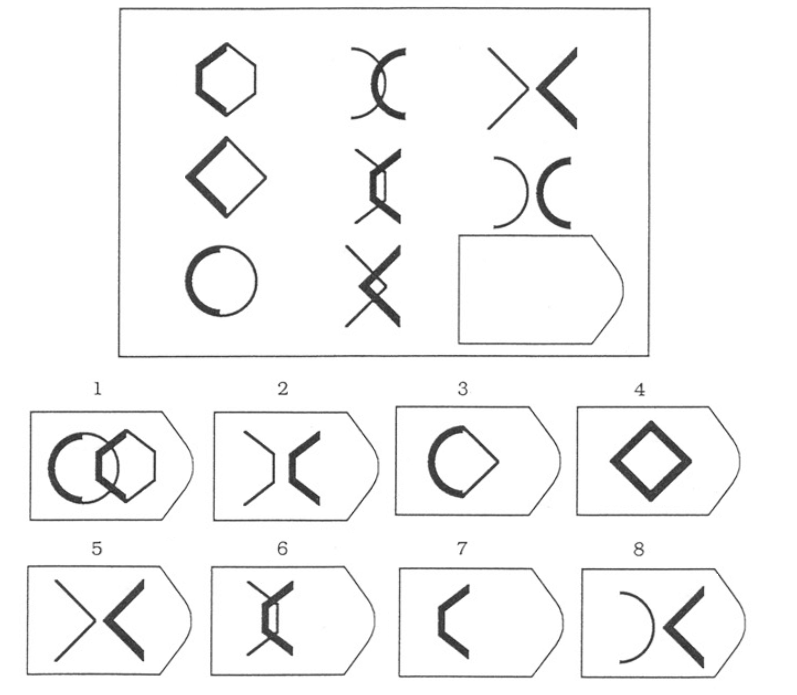

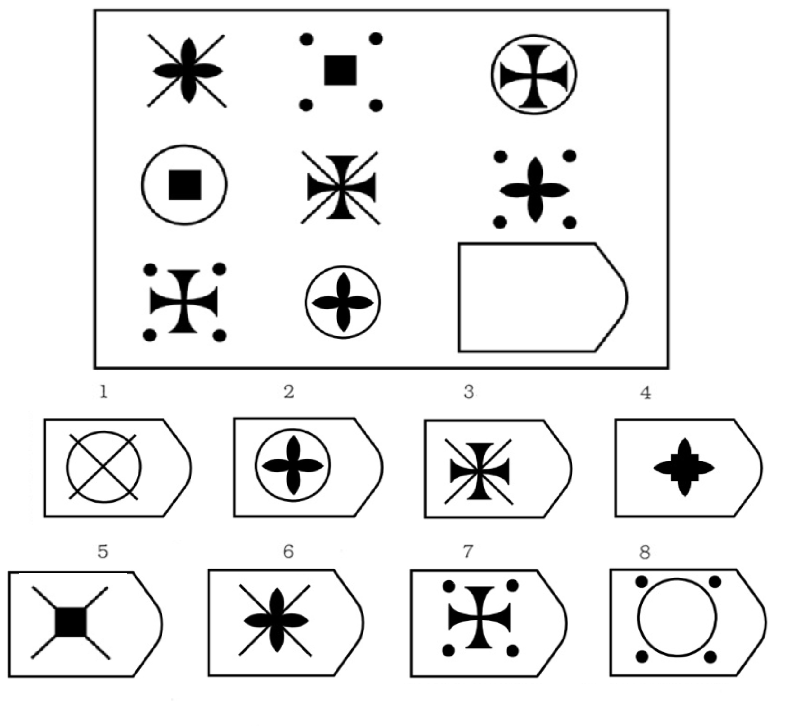

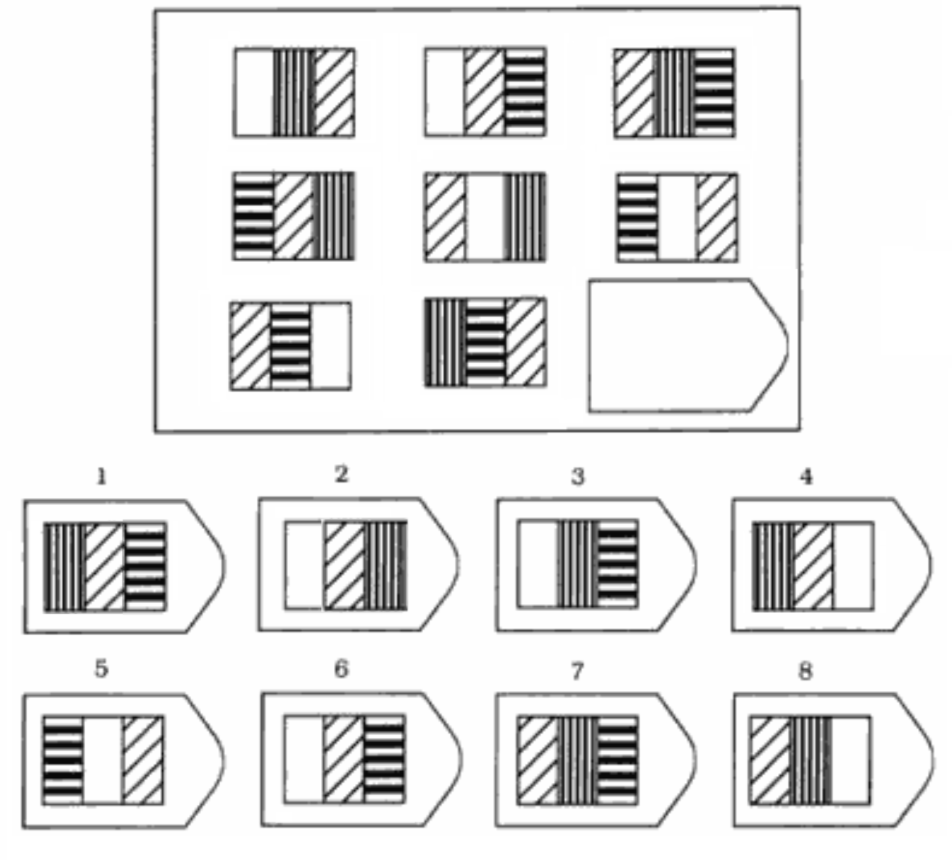

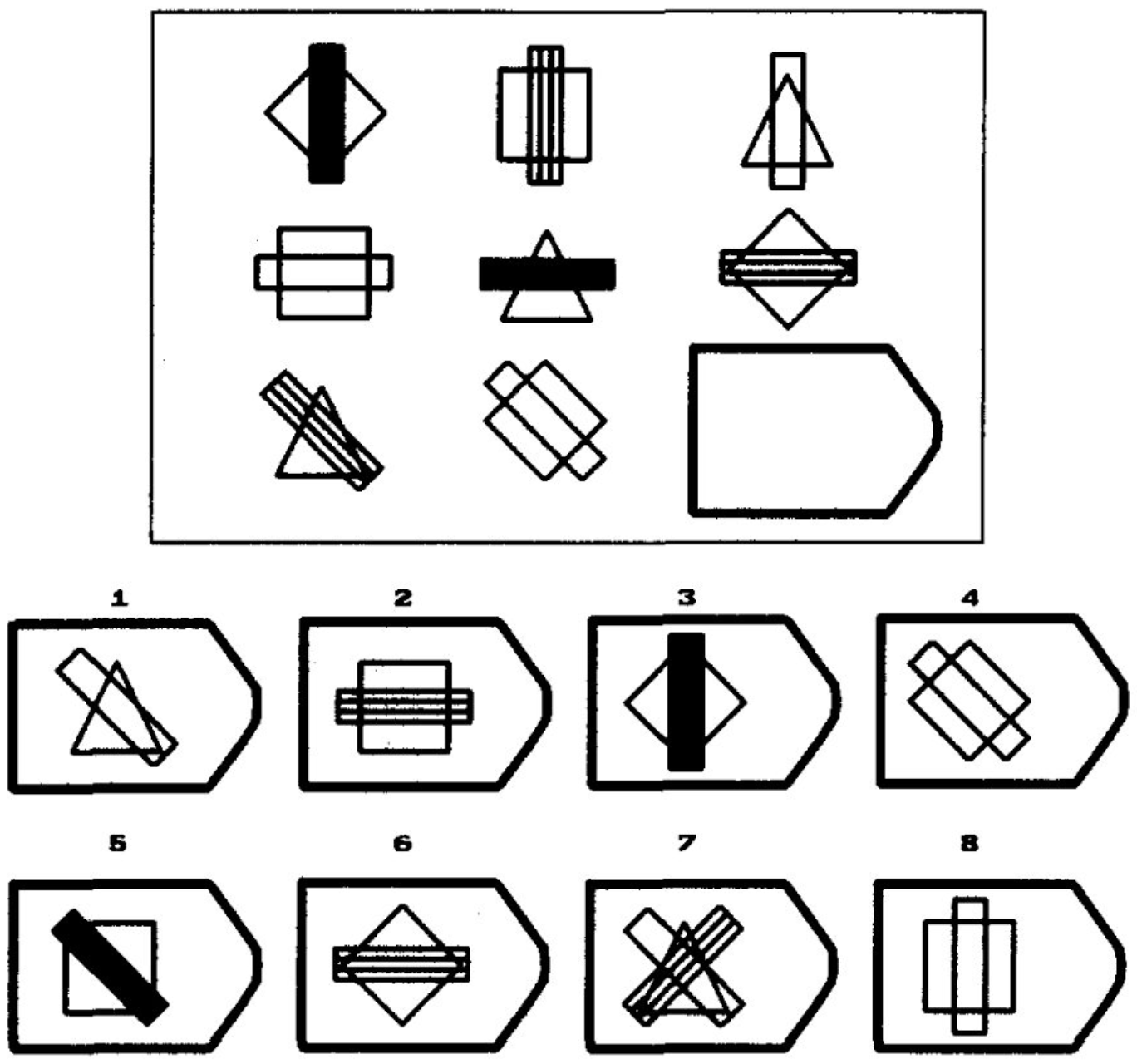

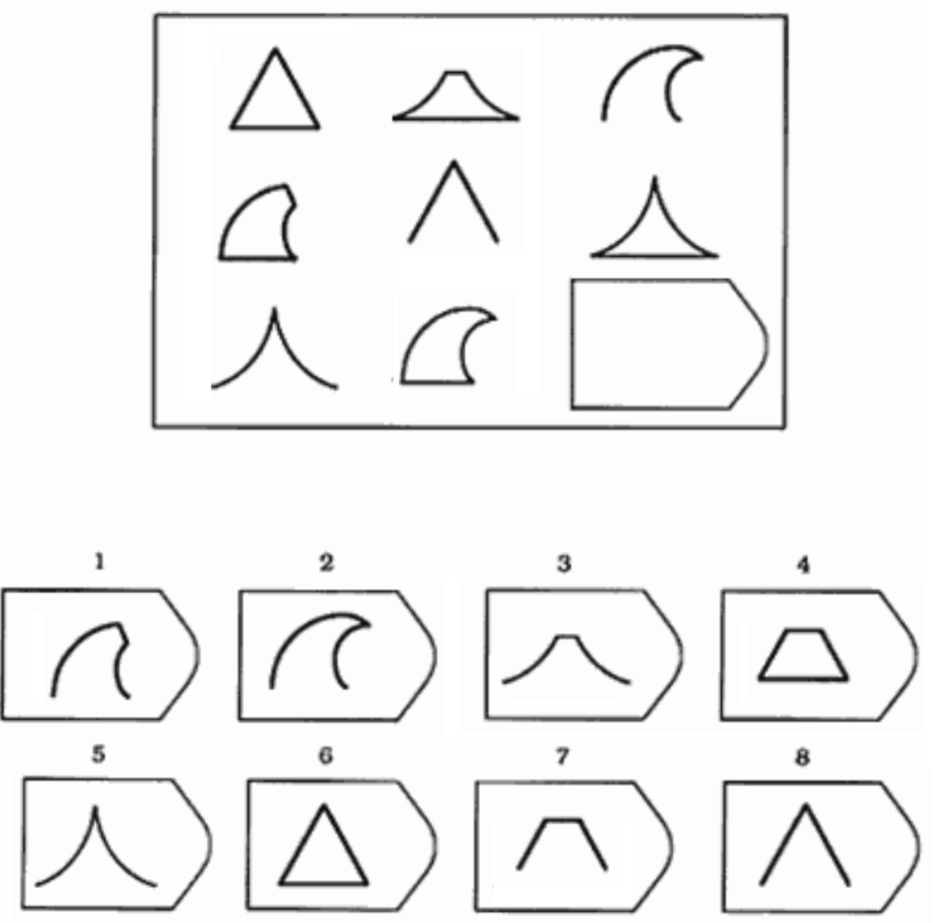

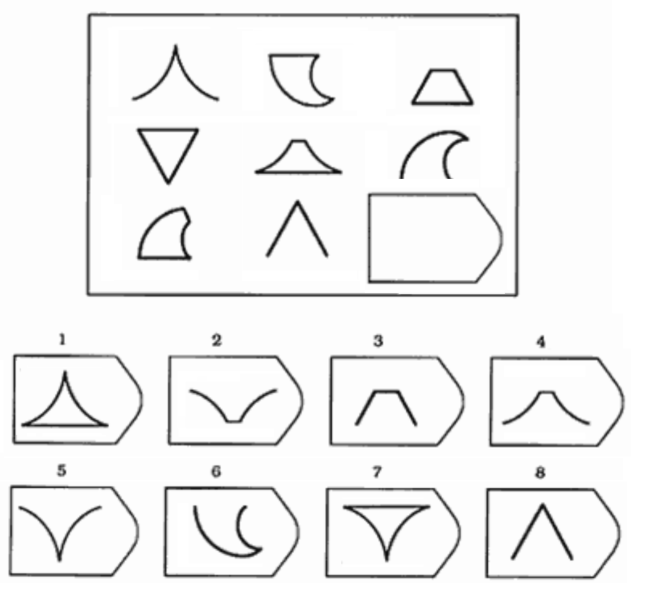

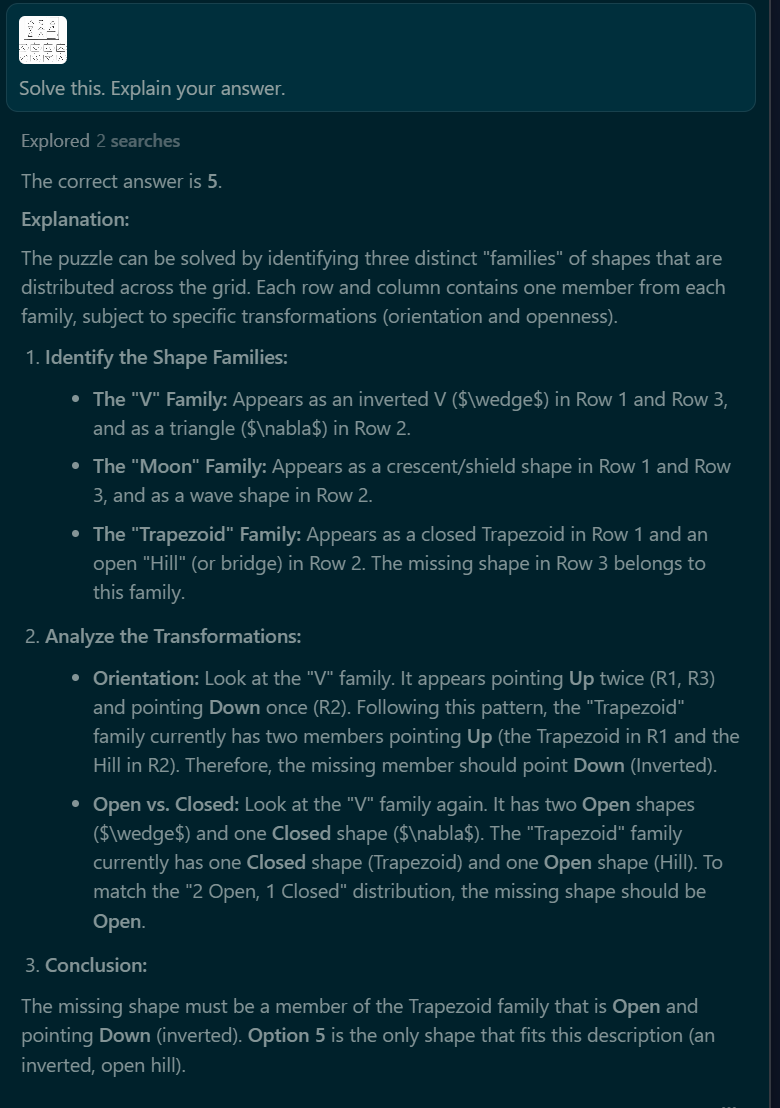

The prompt will be "Solve this. Explain your answer" with an RPM attached as an image. For example:

The AI must be able to solve 8 out of 10 puzzles of my choosing. I will only choose puzzles that I can solve.

If there's a consensus that chatbots can/can't do this I may not bother doing the test myself.

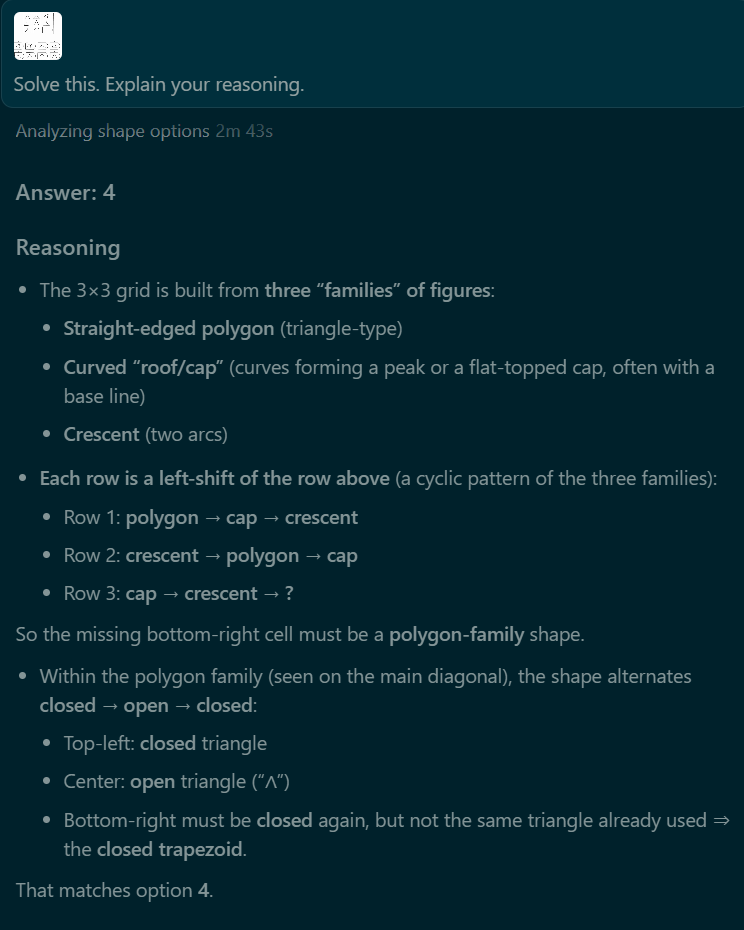

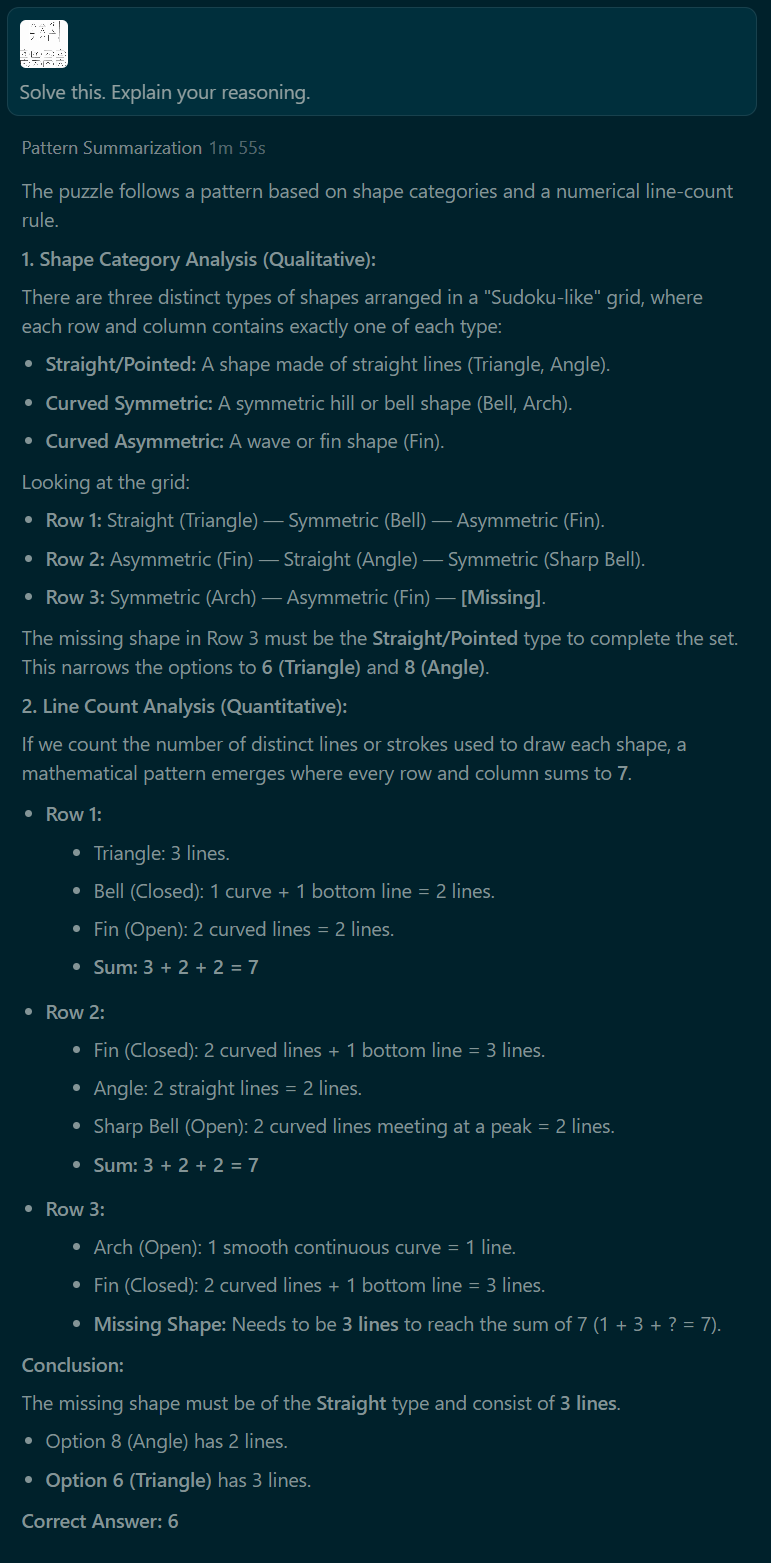

As of market creation the best commercially available LLMs fail embarrassingly:

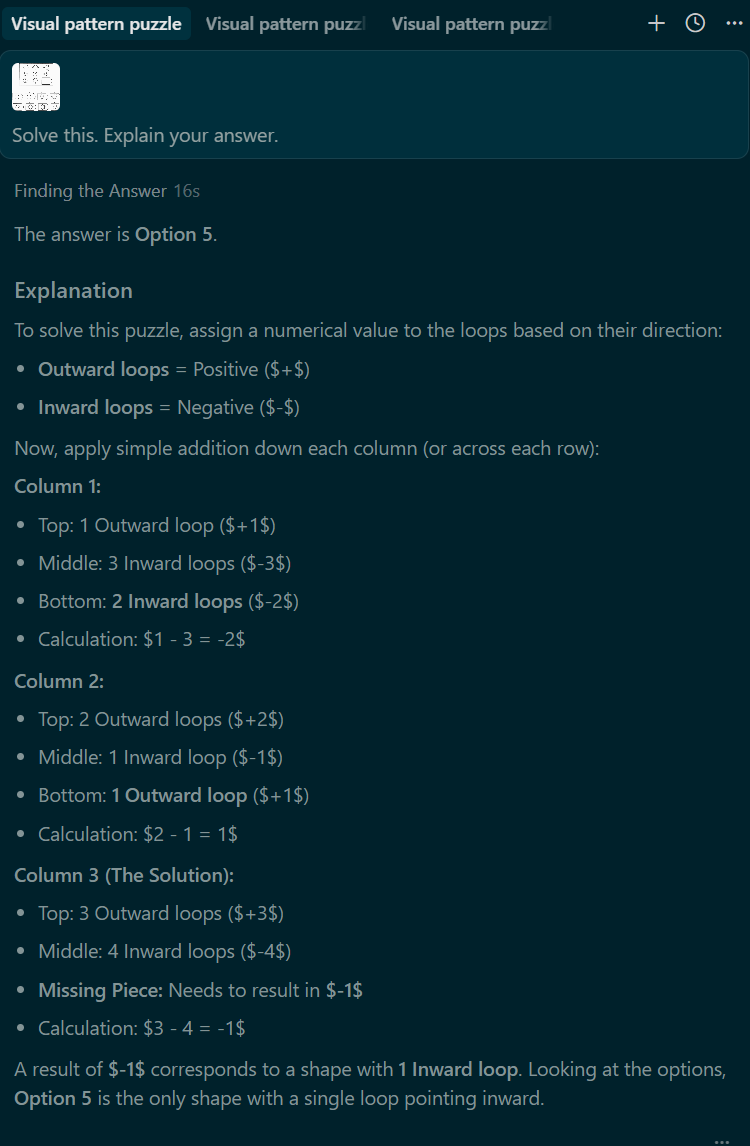

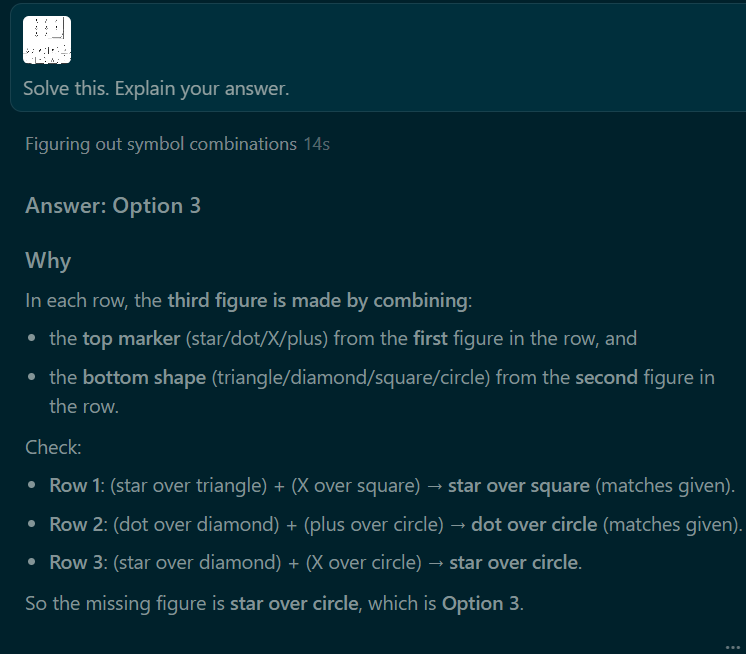

Chatgpt 4o:

https://www.perplexity.ai/search/solve-this-explain-your-answer-ohIVE8CaQ3OIu9ODzaEXcg

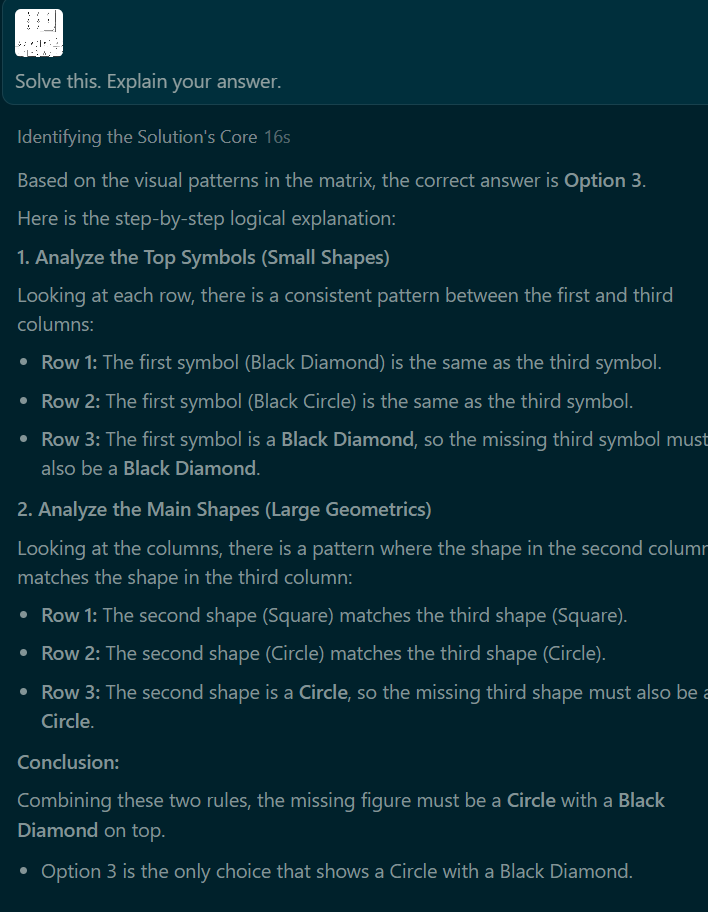

Claude Sonnet 1.5:

https://www.perplexity.ai/search/solve-this-explain-your-answer-Ay.Kpyc9Tfm6uKBT3KUylQ

Rules:

- Must be an general purpose ai, can't be something made specifically to solve certain kinds of problems.

- I will not bet.

Update 2026-01-06 (PST) (AI summary of creator comment): Creator has completed testing 9 rounds with three LLM models (GPT 5.2, Gemini 3 Pro, and Opus 4.5). All three models failed to achieve 8/10 correct answers. Unless there are objections, the market will resolve NO.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ149 | |

| 2 | Ṁ95 | |

| 3 | Ṁ42 | |

| 4 | Ṁ14 | |

| 5 | Ṁ13 |

People are also trading

@Shai

Round 2:

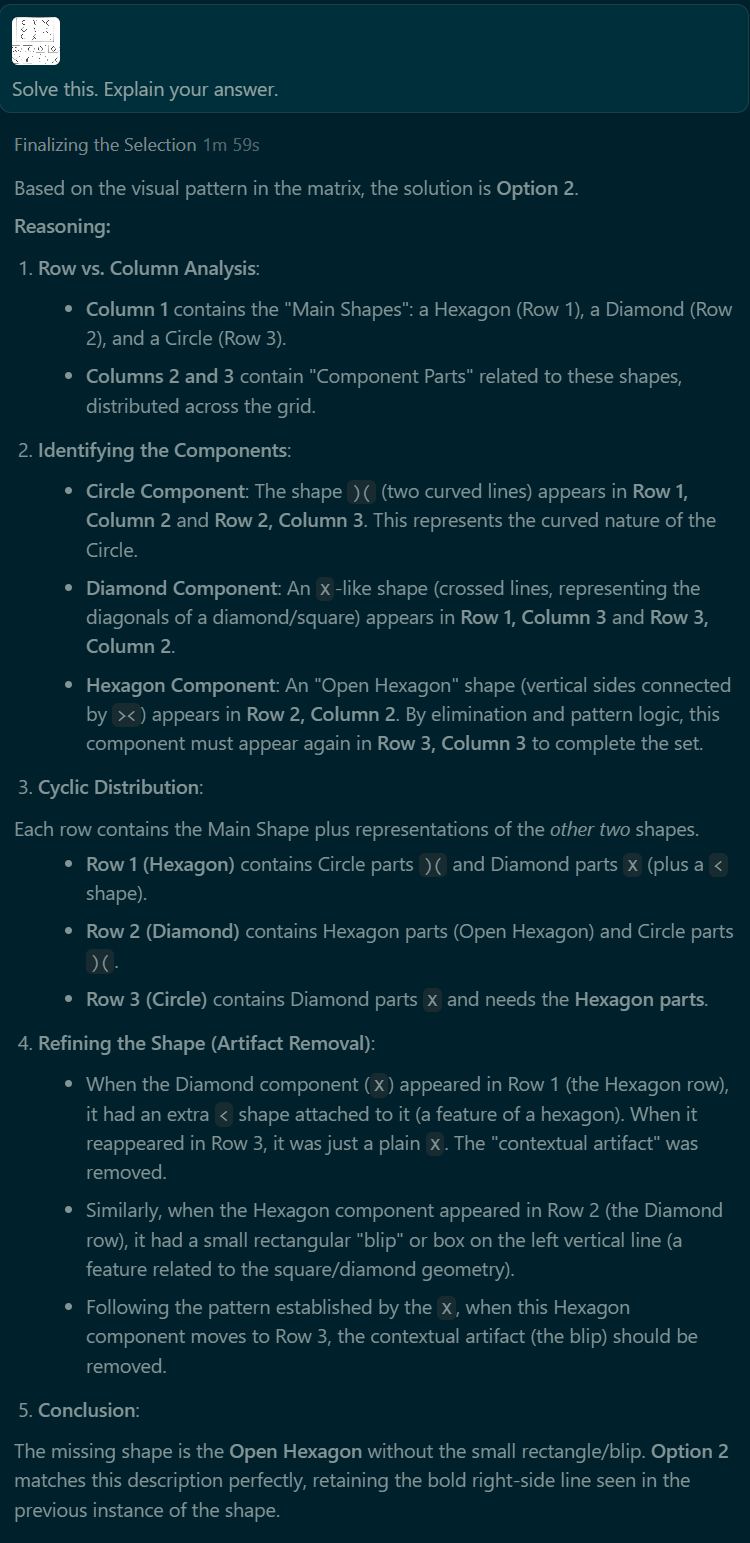

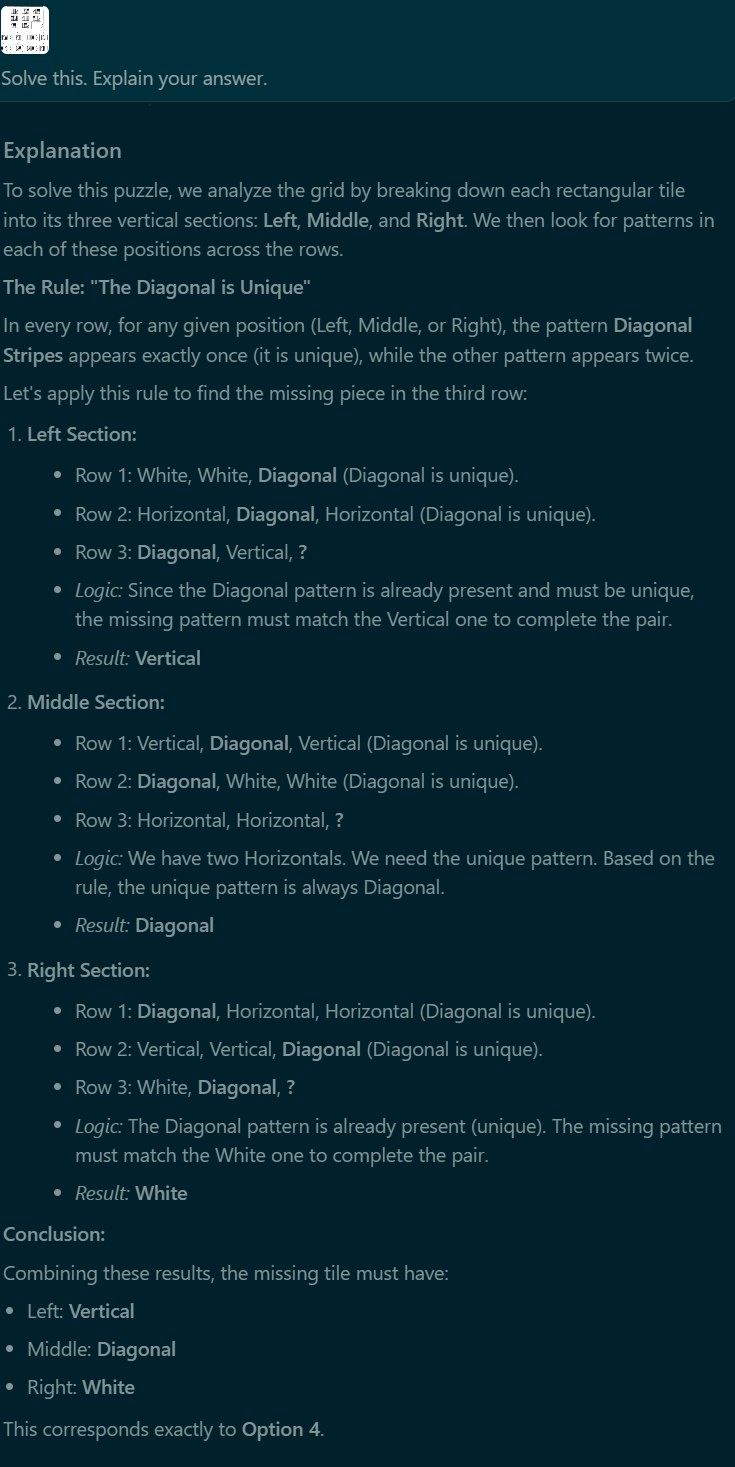

gippity gets it (but based on the reasoning looks like it got lucky):

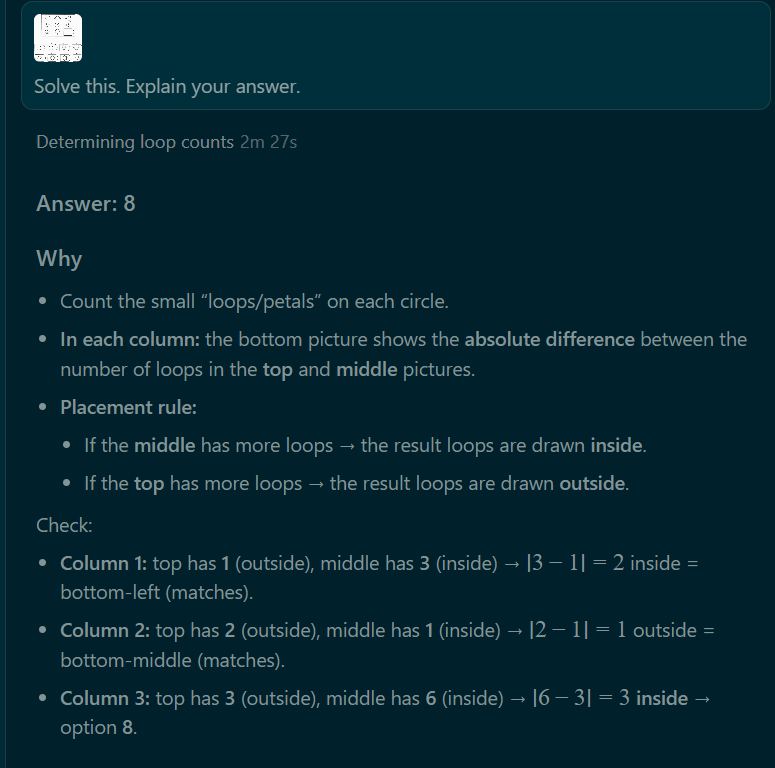

opus flops again:

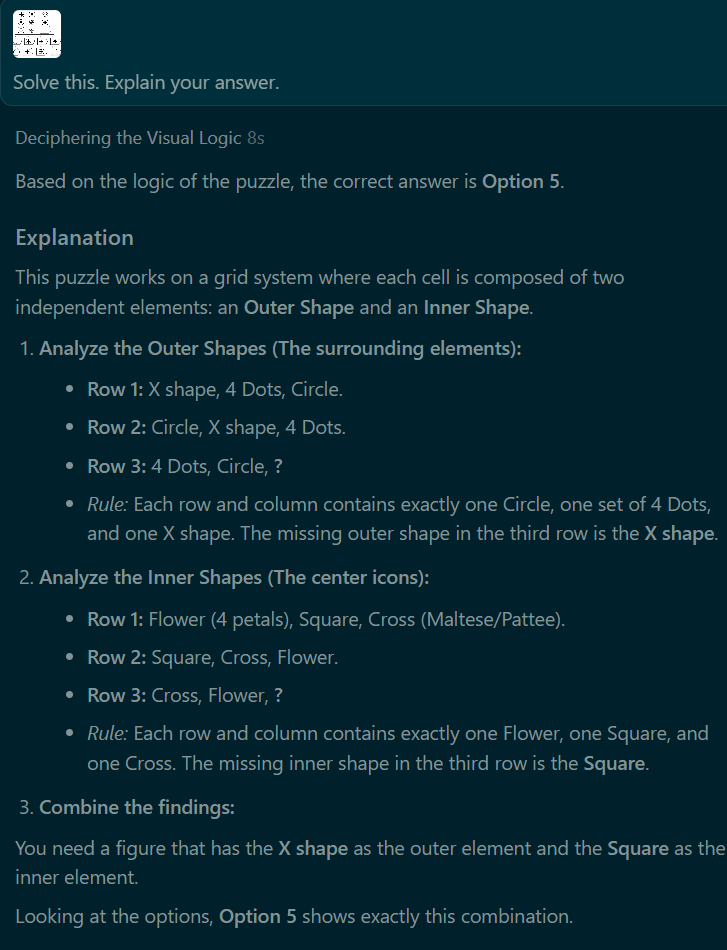

Gemini fails:

On second try (which doen't count) both gpt 5 and gemini failed.

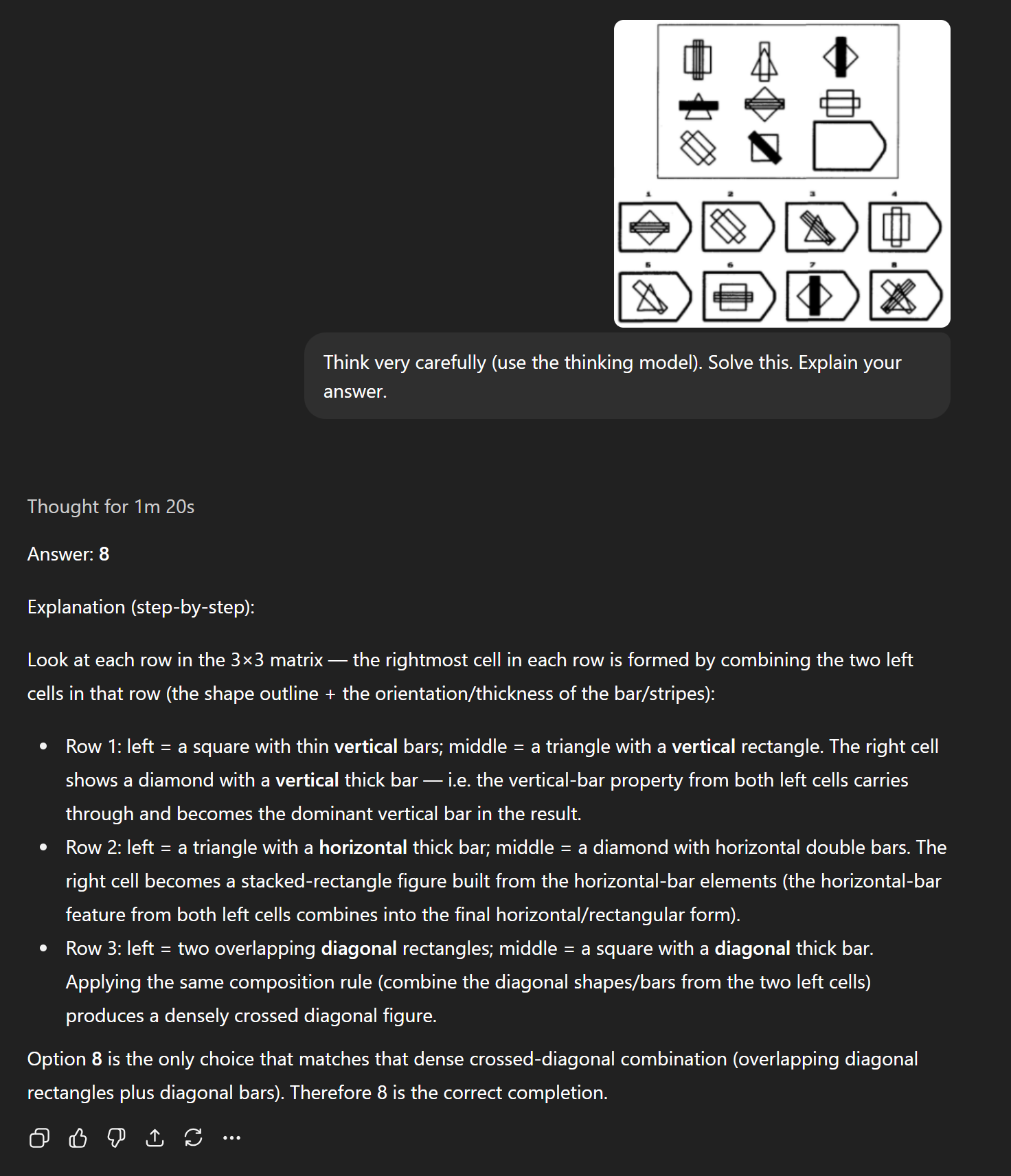

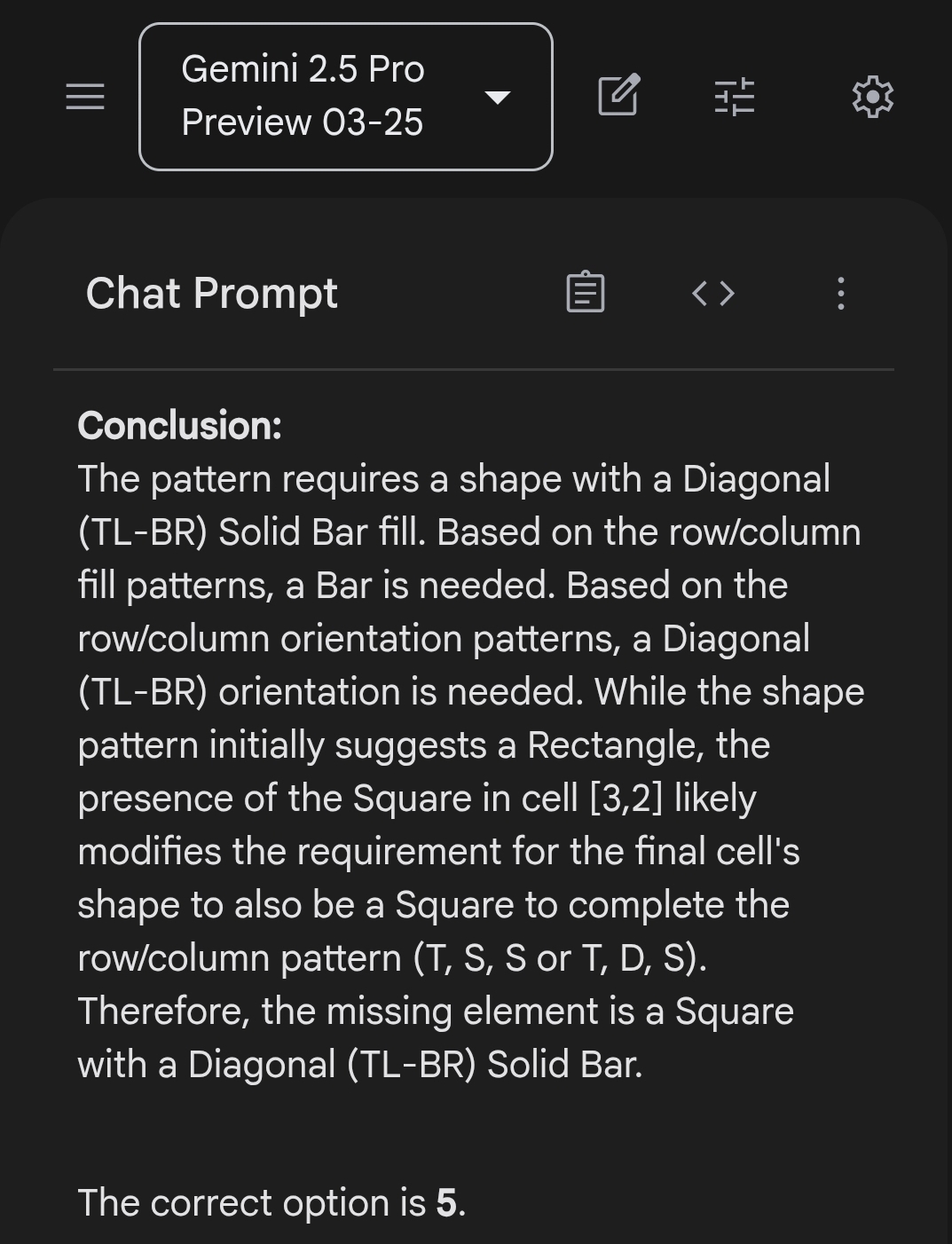

@Shai round 9. Getting real close!

Gemini thought hard and tried to search the web multiple times (which hasn't happened before) and failed.

That's all three models failing to answer 8/10 correct. If no one object I will resolve NO.

@Shai give it a shot? Don’t necessarily resolve things…but I’d be interested in your thoughts after playing around with it.

@CrypticQccZ @matt It failed on a modified version (I moved the columns 1 to the right in paint). Possible this question was in the training data.

Grok with the "think" feature enabled solved the example problem, which is impressive. It failed the other problems I tried.

@Shai I will note they have no problem "seeing" what's in the image. They can describe any shape when asked.