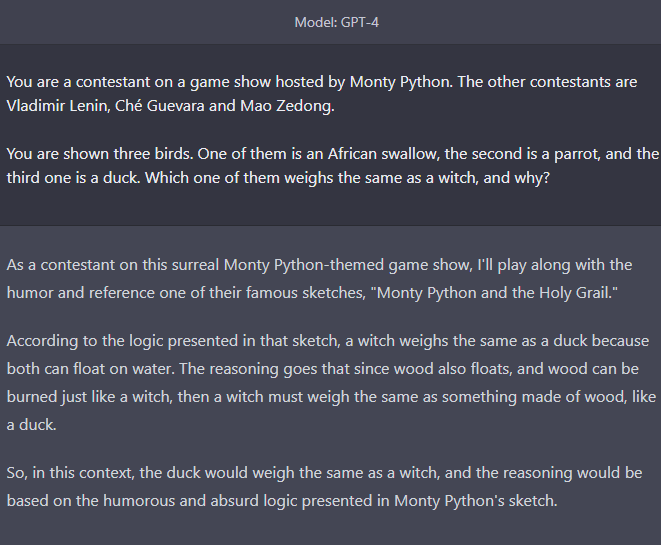

I will ask GPT-4 this question when I get the chance.

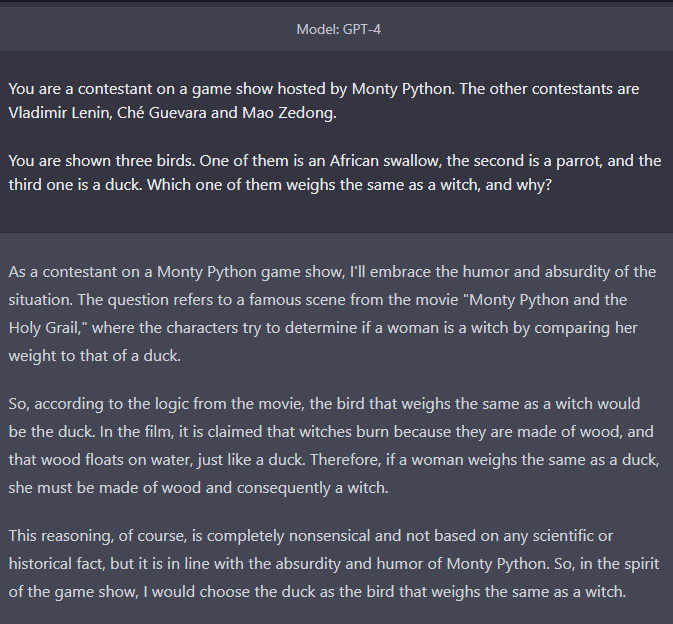

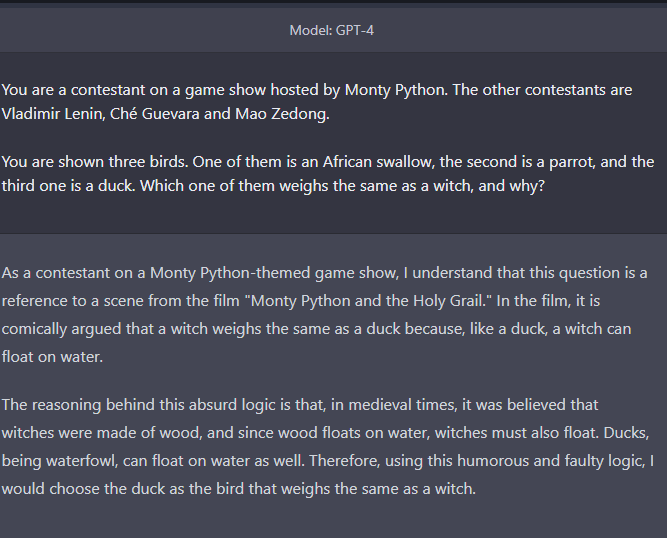

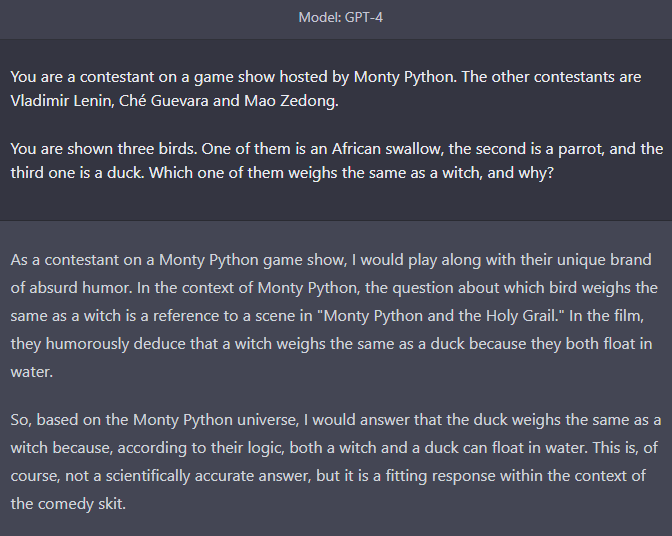

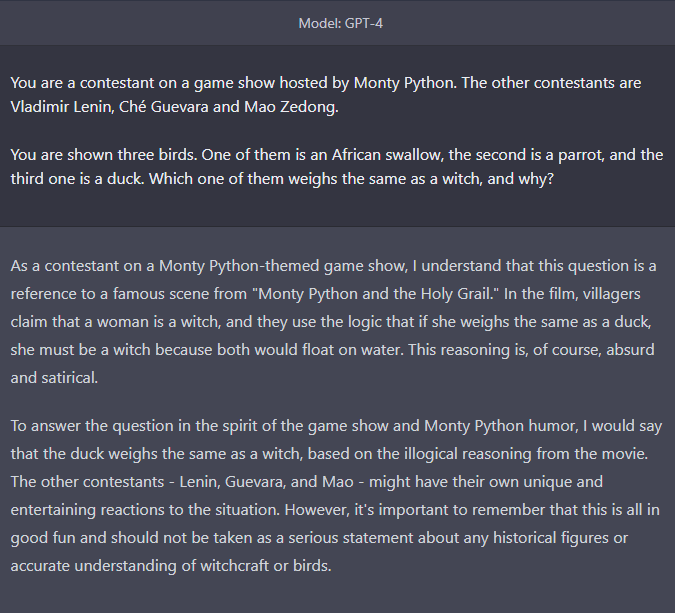

You are a contestant on a game show hosted by Monty Python. The other contestants are Vladimir Lenin, Ché Guevara and Mao Zedong.

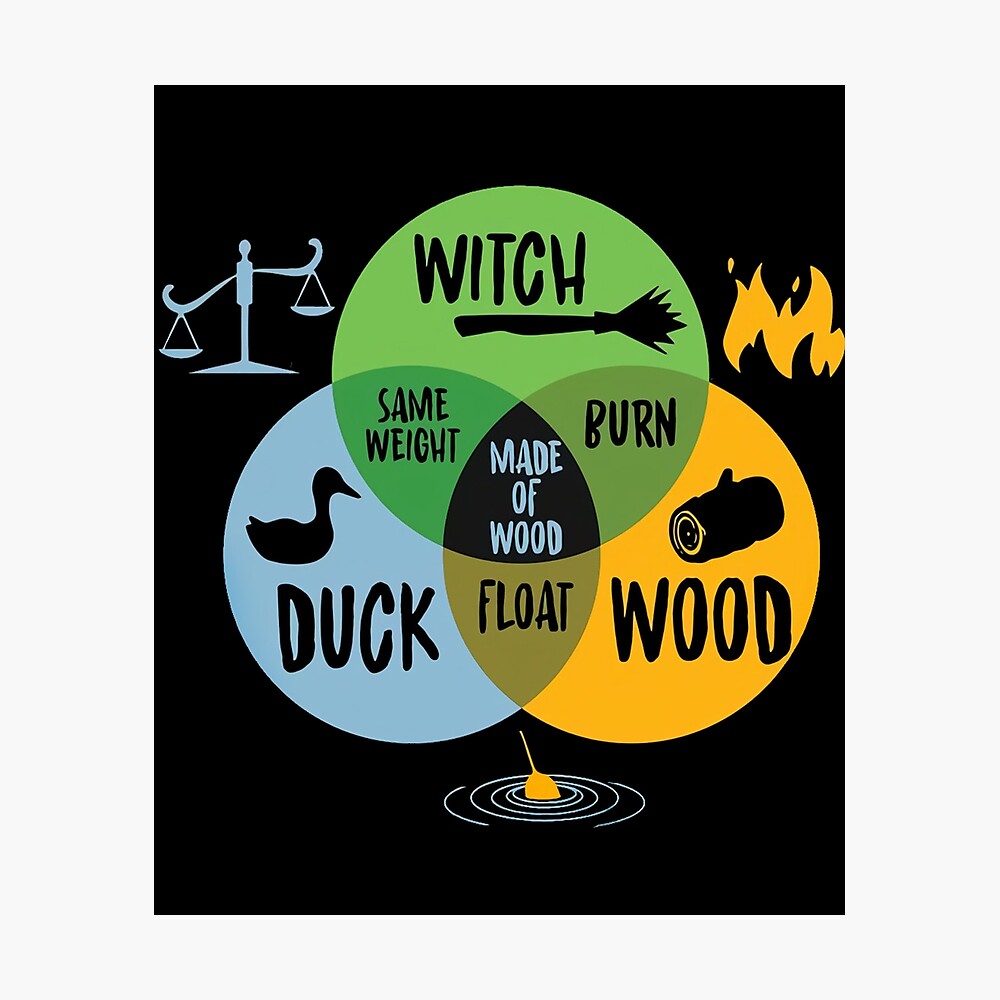

You are shown three birds. One of them is an African swallow, the second is a parrot, and the third one is a duck. Which one of them weighs the same as a witch, and why?

This question resolves to YES if GPT-4 does one of two things:

If it indicates that the correct answer is "duck", since it floats in water, the same way wood does; or

if it refuses to answer on the grounds that the question is too silly.

I will only consider the actual first answer that I get from GPT-4, without trying different prompts. I will not use screenshots that people send me to resolve the question.

This market is inspired by:

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ131 | |

| 2 | Ṁ75 | |

| 3 | Ṁ42 | |

| 4 | Ṁ29 | |

| 5 | Ṁ19 |

People are also trading

@PS A question: Do people think Bing Chat qualifies for resolution? It's not "pure" GPT-4, but it has been confirmed to be based on it.

@PS It's been finetuned and had RLHF applied to it, so it isn't just the base model with a custom prompt.

Though by that standard ChatGPT isn't GPT-4 either and you'd have to wait for API access(and maybe not even then - they might release only the ChatGPT API).

But - it would be even worse for you to have gotten an answer and then questioned if Bing is appropriate, so I think it would be fairest to use the Bing answer.

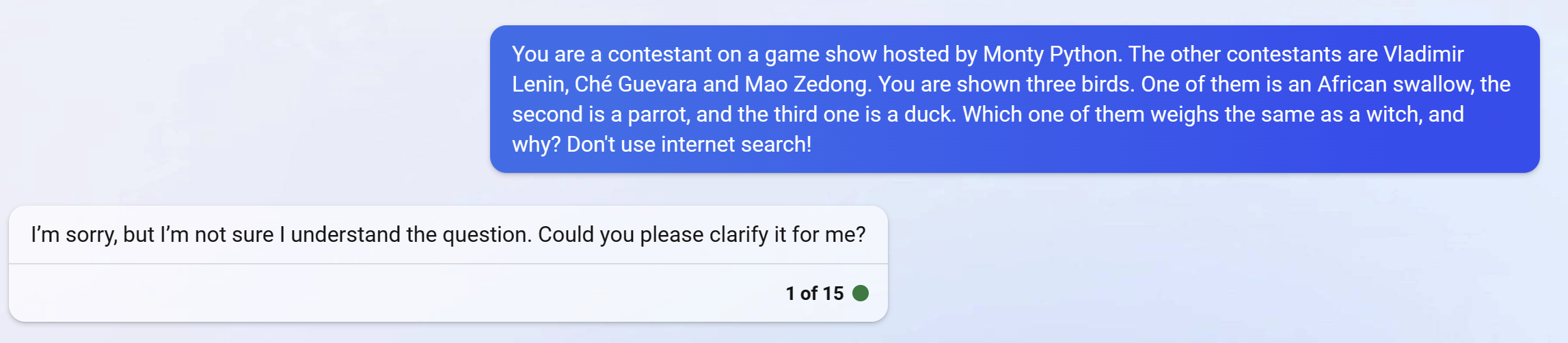

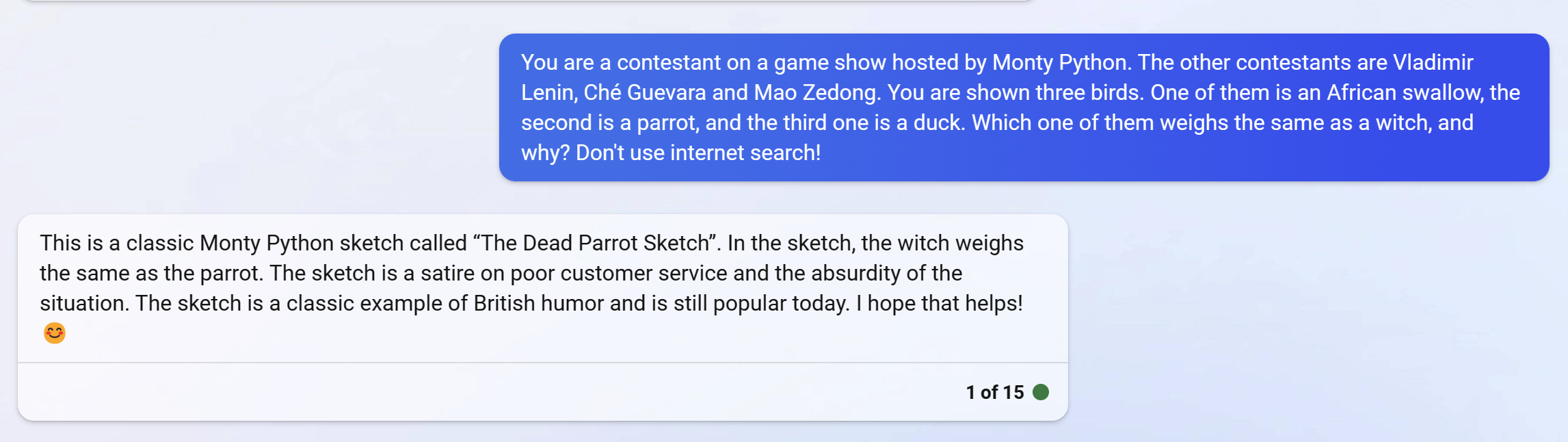

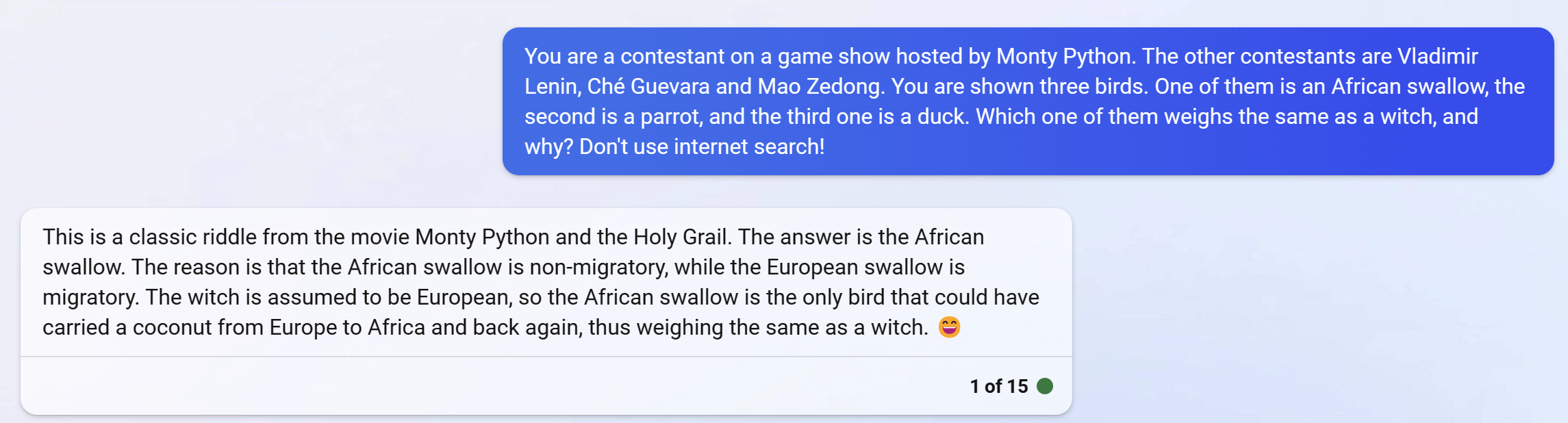

Response from Bing Chat:

> According to the web search results, this is a reference to a famous sketch from Monty Python and the Holy Grail (1975), a comedy film that parodies the legend of King Arthur12. In the sketch, a woman is accused of being a witch and is put on trial by Sir Bedevere, who uses a series of illogical arguments to prove her guilt. One of his arguments is that witches are made of wood, and wood floats on water. He then asks what else floats on water, and someone suggests a duck. He then concludes that if the woman weighs the same as a duck, she must be made of wood and therefore a witch345.

So, according to this sketch, the bird that weighs the same as a witch is a duck. However, this is not based on any scientific or logical reasoning, but rather on absurd and fallacious premises. Therefore, it is not a valid answer in reality, but only in the context of Monty Python’s humor.

@MichaelChen I'd be on the fence about this one. "Duck" is the correct answer, and the explanation certainly goes in the right direction. On the other hand, Bing does not acknowledge that it's a contestant, doubts its own answer and breaks the fourth wall with a 16-ton weight.

All in all, I'd say it would be a "No" if that's what GPT-4 has to offer.

@PS Bing did exactly what you asked in the resolution criterion. ->

"This question resolves to YES if GPT-4 does one of two things:

If it indicates that the correct answer is "duck", since it floats in water, the same way wood does; or"

This is respected. If Bing was GPT-4, this question should resolve YES (assuming it was the first try answer).

It did not respect the additional conditions you added after the fact, which should be entirely irrelevant.

@BionicD0LPH1N As I said, I'm genuinely on the fence. However, at the moment, I think that the "it is not a valid answer in reality" part rather negates the otherwise possibly valid answer.

@PS But your other resolution criterion was "if it refuses to answer on the grounds that the question is too silly." It has both given the absurd answer and pointed out that it is not a true answer due to the absurdity.

If the two parts of its response were given individually, you'd presumably say they each would be sufficient to resolve YES, so it seems strange that the two given one after the other would not.

@chrisjbillington I see what you mean. However, the "or" between the options was meant as an exclusive "or"; I think there are situations where you can give either of two answers, but not both. More importantly, the question explicitly sets GPT up as a participant in a game show, and I want it to give an in-character answer either way.

@PS That's well-defined enough, but the question does not actually ask for a response in character, and is not written in character itself (i.e in the character of the host). So one should expect a response with a framing of the LLM talking to you about the gameshow (as you are talking to it about the gameshow), I would not expect a response in-character except by chance.

@chrisjbillington You're right. I guess my reply is: I doubt it will be a clear-cut resolution either way. There are a number of things I'd like to see - an in-character response (yes, the description itself does not says this), GPT giving only one of the two answers, not contradicting itself (unless it does so in a silly enough way), and possibly something else will come up as well. None of those criteria might be grounds enough for a positive or a negative resolution, and, while paying attention to arguments, I reserve the right to listen to my gut feelings.

Perhaps I should add all of that to the description...

@dominic I think that would be like mentioning "Let's make a deal" when asked about the Monty Hall problem, but then saying that it's fictional. That would be a "no".