Which lab's AI will be the first to score over 10% on FrontierMath benchmark?

Resolution Criteria: Official announcement from Epoch AI or the achieving lab.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ378 | |

| 2 | Ṁ165 | |

| 3 | Ṁ140 | |

| 4 | Ṁ121 | |

| 5 | Ṁ79 |

People are also trading

@NeuralBets I think this can resolve. OpenAI reached 25%: OpenAI announces new o3 models | TechCrunch.

@TimothyJohnson5c16 The problem with AlphaProof is the need for formalization. Concepts required for high-school level math have been formalized in proof systems like Lean, these are enough for IMO, but many advanced concepts are still missing which might be required to solve problems in FrontierMath.

Another aspect of AlphaProof that I don't see people mentioning is that it's extremely slow, which makes sense because the state space of math proofs is much larger than the state spaces of Go or Chess. It took 3 days for it to solve IMO problems, a competition of 9 hours. Mathematicians say it might take them a week to solve a single problem from FrontierMath. You can see the issue here.

Personally, I think that explicit tree search might not be the way to go to scale test-time-compute, I'm more optimistic about o1-style approach of CoT + RL.

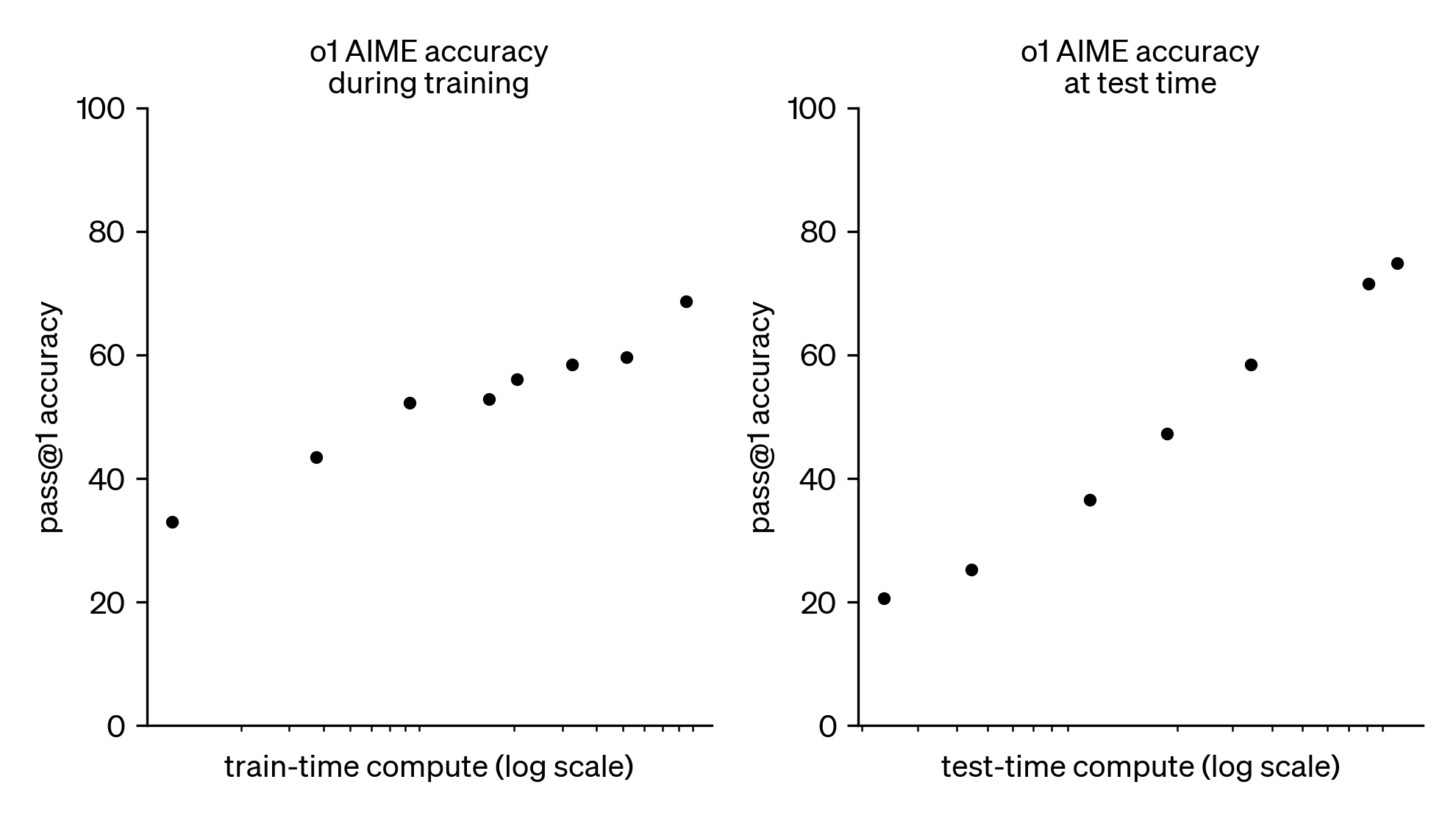

@NeuralBets I see your point about speed, but o1 also needed exponentially increasing amounts of compute to solve harder AIME problems.

Open AI didn't label the x-axis in this chart, but I suspect part of the reason they still haven't released the full version of o1 is because it's currently too expensive for practical use cases:

That's part of the reason that I'm betting NO here: Will an AI score over 10% on FrontierMath Benchmark in 2025 | Manifold.