Super Mario Bros. for the NES is one of the best-known games of all time, and accordingly, it is a common example used to demonstrate basic game bot development techniques. Completing the early levels is a relatively simple task for Lua scripts and for reinforcement learning.

Given that, it's rather surprising that, as far as I know, there has not yet been a bot capable of completing the game in its entirety. The closest I've been able to find is a project called LuigI/O which used the NEAT algorithm for evolutionary generation of neural networks to solve each level individually. But as far as I can tell, LuigI/O produced a unique network for each level, and didn't attempt to have the final network play through the entire game in a single session.

It's entirely possible this has already happened, and my Google research just didn't turn it up. In which case I'll happily resolve the market to YES immediately. But, assuming it hasn't happened yet, will it happen by the end of 2024? Resolves YES if someone links to a credible demo of a bot completing the whole game (as further explained below), and NO otherwise.

Considerations:

The bot must play the whole game in a single session, starting from boot and ending with Mario (or Luigi) touching the axe at the end of 8-4, including the title screen and the noninteractive cutscenes between levels. The use of save states to start at the beginning of particular levels (as LuigI/O and most ML demos do) is not allowed.

The bot must play on an unmodified cartridge or ROM image.

There must be no human interaction with the bot throughout the game. It's fine if the bot self-modifies during the run (e.g. by adjusting weights in a neural net), but it can't have human assistance in loading the updates.

The interface between the bot and the game must introduce some amount of imprecision into the input, such as by implementing "sticky actions" as seen in the Arcade Learning Framework. This ensures that the bot is reacting to potentially unfamiliar situations as opposed to just memorizing a precise sequence of inputs.

It's fine if the bot has access to "hidden" information about the game state, e.g. by reading RAM values.

It's fine if the bot skips levels with warp zones (although a maximally impressive demo would complete the game warpless).

It's fine if the bot makes use of glitches (wall jumps, wall clips, etc.). Bonus points if it discovers glitches currently unknown to speedrunners.

The bot does not need to play in real-time; any amount of processing time between frames is okay.

I will not be betting in this market.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ188 | |

| 2 | Ṁ54 | |

| 3 | Ṁ53 | |

| 4 | Ṁ42 | |

| 5 | Ṁ39 |

People are also trading

As far as I can tell, there doesn't seem to be any real progress on this task since market creation. I see a couple of new videos of people solving a level or two with ML as a learning exercise (e.g. here and here), but nothing that attempts to play the entire game in one pass, let alone do it with "sticky actions." So this looks like a NO to me, but I'll wait a couple of days before resolving just in case any stakeholder knows of a successful attempt.

Apparently someone did manage to generate vaguely accurate Mario phyiscs recently, since apparently that's an easier task than just playing the game: https://virtual-protocol.github.io/mario-videogamegen/

I did spend a little bit of time working on this myself, including trying to reproduce LuigI/O's results in Python. I do think that "sticky actions" really complicates the task. LuigI/O's successful networks basically seem to work by tuning oscillator nodes to trigger in a precise rhythm that aligns with the natural flow of the level, and that approach falls apart as soon as input errors start to accumulate. I suspect that deep neural nets would probably have a comparably hard time generalizing to slight variations in game state, but I didn't spend enough time messing with deep learning approaches to know for sure.

This market isn't hard because of the nn challenge, but there aren't any plug n play environments for accomplishing this. The smb gym environment doesn't include a start button as an action (for instance), so there's no way to advance past the start screen. [1]

While it would be fairly straightforward to modify it to not skip the various cutscenes or auto click start, why? There's no interesting ML challenge there.

I want to say you also haven't seen somebody just demo using that library to beat smb (ie all levels 1-1 to 8-4, skipping cutscenes allowed), but without allowing that library in a relatively unmodified state, I would be fairly surprised if someone decides to do this.

[1] https://github.com/Kautenja/gym-super-mario-bros/tree/master

Couple of factual points to address regarding that specific library.

One, you wouldn't actually need to modify anything to include the start button in the action space. Per the documentation, the base environment contains all 256 possible button combinations as actions. Normally, you'd want constrain that with a JoypadSpace wrapper environment that takes a list of valid actions. The package includes a few predefined constants for common lists, but you can pass any list you want to that wrapper during setup. So including start input in the action space is trivial. (Training your agent to press it exactly once and never again is less trivial, of course.)

Two, if you look at the implementation of this package, it appears that it already handles the start screen; it's not loading a save state at boot or anything. See _skip_start_screen() in smb_env.py. It doesn't render the display or process input from the neural network while it waits for the start screen to go away, but neither of those are requirements as far as this market is concerned. (Remember, nothing in the terms of this market requires that machine learning has to be involved!) The only thing the terms of this market would require is that the scripted inputs in that routine would need to go through the same "sticky actions" filter that all normal gameplay actions would need to go through.

As far as I can see, the only obstacle to someone using that library as written is that it doesn't incorporate "sticky actions," and it might be a little tricky to insert the "sticky action" filter somewhere where it would also affect the scripted input on the start screen. (And I'd honestly be tempted to waive that requirement anyway if someone came up with an otherwise successful agent; it's not like "sticky actions" would really interfere that much with the simple button-mashing script they have for the start screen.)

(It does also do some RAM modification to skip over Mario's death animation, in _kill_mario(). I guess I didn't directly say that the script can't modify RAM while the game runs, but I'd still consider it against the spirit of the market to do so. That said, if someone really finds it too hard to comment out that one line in the script but still manages to train an otherwise successful agent, I might not argue the point too much.)

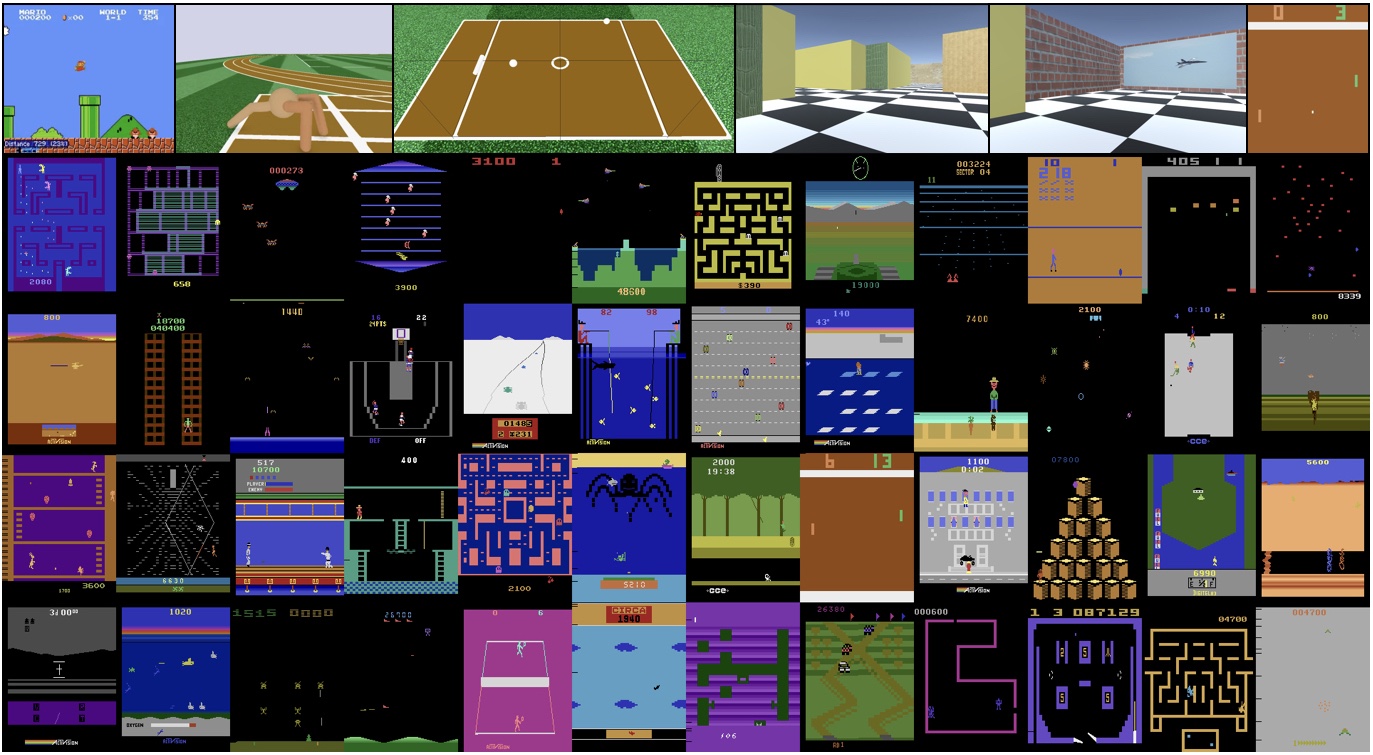

Saw this paper linked from another market today: https://pathak22.github.io/large-scale-curiosity/

It does include probably the best attempt of SMB1 I've yet seen; their video starts at 1-1, takes a warp pipe to world 3, and reaches the Bowser fight at the end of 3-4.

@vluzko I don't think real-time play is too critical, so I'm fine with however long it takes to evaluate each frame. I'll update the description accordingly.