Roman Yampolskiy published on his Facebook profile images generated by DALL-E 3 based on the same prompt he gave to the artist of the cover of his last book. The prompt was:

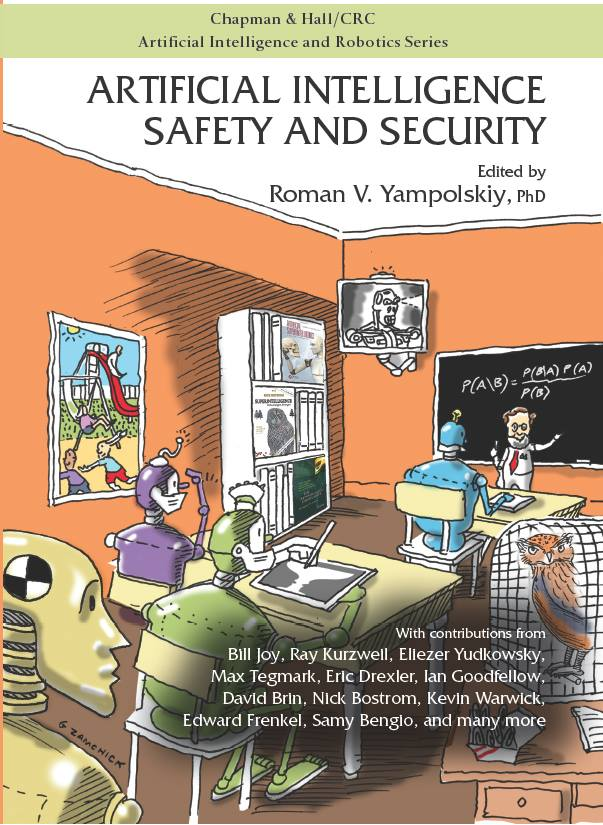

Classroom full of desks with different robots behind them. Human teacher is up front showing Bayes equation on the board. Bookshelf in the classroom has books including some with visible covers (ASFA, SH, Superintelligence). Classroom also has a cage with an owl. A large box of paperclips is seen on teacher's desk. TV in the room is showing a picture of a Terminator. Some robots have iPads on which you can see adversarial examples and illusions. Outside the window, you can see children playing. Most robots are looking at the teacher but some are looking at other items in the room.

In the comments, Yampolskiy wrote that it took the artist 2-3 tries to get it right.

Will some text-to-image model (can operate in more modalities) be capable of generating an image equivalent (image containing required elements) to the book cover of "Artificial Intelligence: Safety and Security" by Roman V. Yampolskiy, before 2025?

Resolves as YES, if for 12 generated images at least 4 contain all elements from the original prompt, minus book covers of ASFA, SH, Superintelligence since these were copy-pasted by a human artist (therefore, this part of the prompt will be swapped for "There is a bookshelf in the classroom."). I will judge if the images contain the required elements. I won't participate in this market.

Original book cover:

[Edit: changed "if for 12 generated images 4 contain all elements" to "if for 12 generated images at least 4 contain all elements" in the last paragraph]

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ24 | |

| 2 | Ṁ18 | |

| 3 | Ṁ15 | |

| 4 | Ṁ15 | |

| 5 | Ṁ11 |