Resolution Criteria:

If all of the following criteria are met, this question resolves to YES. Otherwise it resolves to NO.

1. Showcase Event: OpenAI must publicly showcase an AI assistant between January 1st, 2024, and January 1st, 2026. The AI assistant must demonstrate the ability to control a virtual desktop or browser environment.

2. Task Performance: The AI assistant must perform a series of routine white-collar job tasks which are specified in advance and observable by the public during the showcase. The tasks must be completed with minimal human correction, defined as less than 15% of tasks requiring human intervention in the task completion process, across tasks shown in the demo.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ1,224 | |

| 2 | Ṁ332 | |

| 3 | Ṁ75 | |

| 4 | Ṁ64 | |

| 5 | Ṁ58 |

People are also trading

Alright, this really deserves mod attention after the chaos. I get why folks are trading on the uncertainty but I've read the arguments and I think a decision just needs to be made about the Atlas demo.

Was it a perfect demo by the arcane expectations of the market? No

Did it hit the criteria? More or less

Was it in line with the spirit of the market? Yes

I've read the debate below and I think solid cases were made, but we have enough to make a decision without wallowing in an eternal back-and-forth.

It seemed to be the intent of OpenAI to present something that would qualify as "a browser assistant for white-collar tasks." I certainly was convinced that this would be the kind of tool I would use in an office setting if I were to use AI at a day job.

I'm resolving YES.

I'm sure some folks will be disappointed by this call but remember trading on post-hoc resolution speculation is always a high risk gamble. Nitpicking literalist interpretations of market criteria can sometimes bite!

(Disclosure: I had a position here which I exited at a loss yesterday to restore and maintain neutrality. I have no stake in this, or another, resolution.)

Alright, this really deserves mod attention after the chaos. I get why folks are trading on the uncertainty but I've read the arguments and I think a decision just needs to be made about the Atlas demo.

Was it a perfect demo by the arcane expectations of the market? No

Did it hit the criteria? More or less

Was it in line with the spirit of the market? Yes

I've read the debate below and I think solid cases were made, but we have enough to make a decision without wallowing in an eternal back-and-forth.

It seemed to be the intent of OpenAI to present something that would qualify as "a browser assistant for white-collar tasks." I certainly was convinced that this would be the kind of tool I would use in an office setting if I were to use AI at a day job.

I'm resolving YES.

I'm sure some folks will be disappointed by this call but remember trading on post-hoc resolution speculation is always a high risk gamble. Nitpicking literalist interpretations of market criteria can sometimes bite!

(Disclosure: I had a position here which I exited at a loss yesterday to restore and maintain neutrality. I have no stake in this, or another, resolution.)

@Stralor Too bad, I was hoping I could respond to the guy (Franz) who don't think before it's resolved. 🤣

@mods Creator last active months ago. This should resolve to Yes according to https://www.youtube.com/watch?v=8UWKxJbjriY.

@CharlesFoster

Similar to with Operator, the ChatGPT Atlas demo focused on personal stuff:

Web search / web history search

Looking up stuff about movies

Planning a haunted house

Help with recipes and grocery shopping

@CharlesFoster The only stuff I would consider maybe "routine white-collar job tasks" were reviewing a GitHub pull request and reviewing an email draft.

@CharlesFoster The "planning a haunted house" was to showcase task distribution through a google doc. That's pretty much "routine white-collar job" for me.

Few would deny that "web search / web history search" also happens in office.

@Lennyhnyq0 I think this market should be resolved based on whether the resolution criteria are fulfilled, not whether he thinks they are fulfilled. The question creator did not mention that his opinions would be consulted.

I think this market should be resolved based on whether the resolution criteria are fulfilled, not whether he thinks they are fulfilled.

I agree with this

@CharlesFoster @vdb fair enough, but I think Charles gave good reasons for why he didn’t think it fit. I question whether what was showcased constitutes a “series” of tasks, given that it was only 2 agentic tasks (the haunted house and the recipe), I question whether they were specified in advance (the details of what was to be done were fairly vague) or observable by the public (they kept tabbing away, so it’s far from clear that either task completed or how much intervention would be needed).

@vdb I will say that if Charles or the OP feel that the released product lives up to the spirit of the agentic task requirement, I’m willing to concede that point. If this demo was all we had, I would not be satisfied that the browser assistant was actually as good as predicted. But since the product is released, people can test it. I will likely try it out if it becomes available on a system I own, but for now I can’t judge it directly.

@Lennyhnyq0 Let me respond to your previous questions directly.

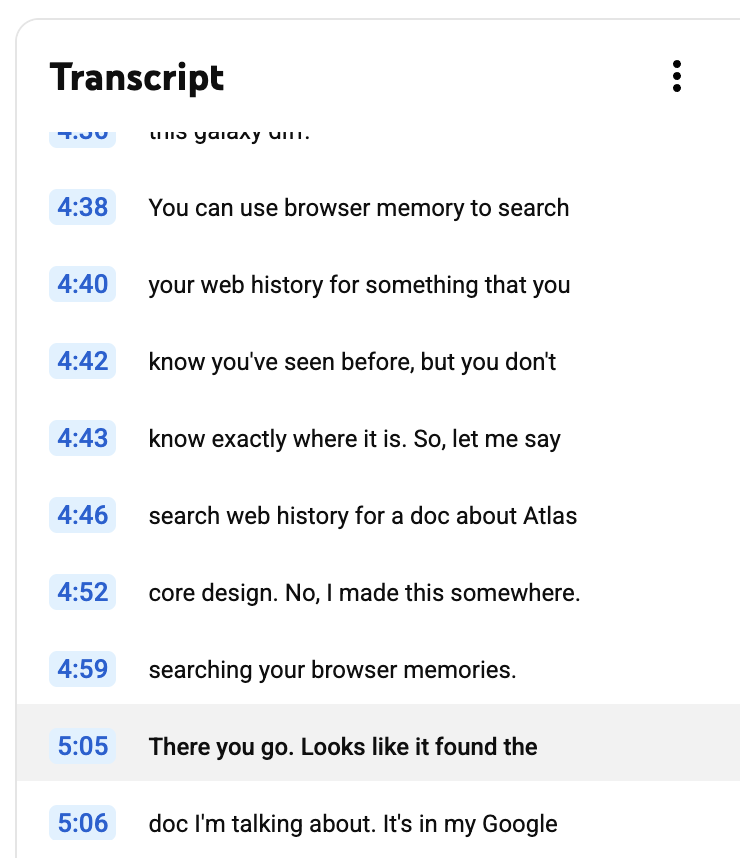

Do you count web history search as white-collar task? The presenter demonstrated that case starting at around 4:38 in the video.

Is the task specified in advance? Yes to me, as he typed the prompt "search web history for a doc about Atlas core design," which sets a quite specific goal.

Is the task performance observable by the public? Yes to me, as in this case he did not tab away at all. This task was simple enough that no human intervention is needed.

Is the task completed? Yes to me, as the presnted clicked in the doc to show that it's the one he was looking for.

For all I can see, this counts as one task that fits the resolution criteria. You got any objection to that?

@vdb this seems like a stretch as a “white collar job task” but maybe you can describe why you think it’s something more than the search button on chrome history? I do agree that this particular thing was demonstrated and specified in enough detail. It just seemed so minor that I didn’t even count it as an agentic task.

@Lennyhnyq0 It's a difference between 'exact' and 'fuzzy' search.

For an ordinary text search to succeed, you have to use the exact text that showed up. With LLM, synonyms or descriptions work, too, since LLM can make associations.

@vdb I get that, but that seems pretty useless for specific searching my history or docs. I spent some time trying to come up with searches I might do where I couldn’t just give them to the existing ChatGPT / Google AI mode and get the answer and I think the main use case is for a company’s share point or equivalent? But I don’t think this will search sharepoint for stuff you haven’t seen and I don’t know that many companies are ready to open their docs to it. If the stuff is in my browser history then whatever words I’m typing to describe it probably occur in the doc because I am familiar with the thing I’m looking for. The reason it’s helpful for web search is that I may not know the terminology for things I want to search. Even then, I suspect the search hits aren’t that much better, since they already perform partial matching at the sentence level and I probably know some meaningful words that are use in connection with the terms I need. The main value in Google AI mode is actually the summaries. I’m sure you can come up with examples where I would word the question one way but only the trivial words would overlap with the text of the hit I want, I just think it’s rare even in web search and extremely rare in history search.

@Lennyhnyq0 You may find this useless; someone else may find this useful. We could have a separate discussion on where this functionality is more useful or less useful.

As far as the market resolution goes, what needs to be discussed is whether this task demonstration fits the resolution criteria. Do you think it does not?

@vdb it seems like a stretch to call it a “routine white collar job task” that was meaningfully demonstrated (beyond the existing search capabilities).

Edit to clarify: If others agree that it rises to the level of task they interpreted from the question, then I guess I just misinterpreted the intent. But this would not have been on a list of routine white collar job tasks had I made one in advance, which is what I think the standard should be (vs., “can I imagine a situation in which a white collar worker might appreciate this automation on the job”)

@Lennyhnyq0 What exactly are you objecting to? Is it not 'routine'? Not 'white collar'? Not 'job task'?

@vdb right - it’s not a routine thing that I expect is performed as part of white collar jobs. I think the crux is whether calling it a job task means something at the level of, say, an ONET task or simply, “anything of value that might be done on a job”

@Lennyhnyq0 I'm not sure I understand. Could you answer three questions for me. Do you think that web history search case is a 'routine' thing? Do you think it is a 'white collar' thing? Do you think it is a 'job task'?

3 independent questions, 3 answers needed. It's hard for me to read from your previous comment what your answers to the 3 questions are.

@vdb simple answers are no, yes, no. Routine, to me, means both simple and frequent and I don’t think it is frequently needed. Job tasks to me means something far more complete than just getting back to a site I already visited. Searching through my history to give me a summary of what I worked on between two dates that might be relevant to a project so I can put it in a status report or refresh my memory might be a reasonable “job task” version of this.

@Lennyhnyq0 You don't think it is frequently needed for Lenny, or you don't think it is frequently needed for all white collar workers?

@vdb I don’t think it’s frequently needed for the average white collar worker, but of course my perception of that is based on my own experiences and my perceptions of those I work with. To be clear, if I thought this was a substantial task like the one I described, I probably wouldn’t exclude it based on frequency alone since that seems nitpicky. Also, if I thought this was a small piece of a task but one that people have to do so often that saving even a little time on it would be a big value, I might concede that would be enough to meet the criteria. But I think this one fails on both measures so it seems like a very low bar if we are expanding the criteria enough to include it.

How would you describe the line of what you consider a routine white collar job task? Literally anything that might ever happen in an office that could be done 1 second faster by describing it to the browser instead of doing it yourself? 10 seconds? 10 minutes? Are there aspects of office life that wouldn’t count as job tasks, like checking the specials at the nearby grocery store or the bus schedule for the ride home? Would super-niche tasks count, like if it only worked if you were the office manager of an AI lab in Chicago but not any other role, company, or location?

@Lennyhnyq0 I wouldn't even ask that the task be done faster. Nowhere does the resolution criteria require that the task be done faster.

"2. Task Performance: The AI assistant must perform a series of routine white-collar job tasks which are specified in advance and observable by the public during the showcase. The tasks must be completed with minimal human correction, defined as less than 15% of tasks requiring human intervention in the task completion process, across tasks shown in the demo."

@vdb it has access to ChatGPT, which can search the web for stuff, summarize stuff, and help me edit emails. So chromium, with a ChatGPT sidebar would fit the second criterion by having an AI assistant and doing a series of tasks, even if all of the tasks were ones that ChatGPT alone can do? If not, then how far beyond base ChatGPT value does it have to be to meet the criterion?

@vdb you type in your query and it returns a bunch of pages (and an AI summary most of the time). But my point is that your claim was that it is enough for it to simply do a thing, regardless of whether that thing adds value compared to the status quo. That seems like the wrong bar for what the criterion was meant to assess.

My understanding from the demo (not having access to the thing itself) is that ATLAS controls the browser to automate some personal tasks the company thought people might use enough to help them generate ad revenue. So mostly it helps you shop, which is not a white collar job task. The most interesting feature they demoed was the one where it could edit a doc and use it to create tasks in a task tracker. But the demo of that was so abbreviated that there’s no way to judge how brittle or annoying it is or whether it even worked on the precanned doc they used.

If it turns out that people using the system put out reviews that include clear job task use cases, then I think that will satisfy the criterion. Zvi’s review didn’t seem promising on that front. The thorniest problem is security. How can I use it to do job tasks if I can’t trust it to be secure with my company’s data and tools?

@Lennyhnyq0 I'm claiming that if the resolution criteria stated in the question description are met, then the resolution criteria for this question are met.

Because the stated criteria did NOT require the AI assistant to perform tasks better than previous technology, whether the AI assistant performs better is irrelevant to whether the criteria are met.

@vdb and what I'm saying is that making that claim means that the second criterion is irrelevant to the resolution. Any AI assistant that can control the desktop or browser can necessarily do some things that might be considered relevant to a white collar job if doing them at sub-human levels of competence is sufficient. All it would have to do is randomly click on web pages and randomly copy/paste text around and it is doing a series of routine white collar job tasks by your reading of the criterion.

@Lennyhnyq0 I'm not sure I would count "randomly click on web pages and randomly copy/paste text around" as routine white collar job tasks. Which job does tasks like that?

I would count web search, polishing emails, distributing tasks, writing docs, comparing codes etc. as routine white collar job tasks. These are done routinely by office workers who program, for instance.

Web search history is a border case that could go one way or another. Some white collar workers may perform it routinely, but not a great portion, I would guess.

@vdb if you can randomly click web pages and randomly copy/paste, then you can do web search, email polishing, distributing tasks, etc., all at subhuman levels. So my point is that "doing a task" naturally assumes some level of competence that makes it worthwhile.

@Lennyhnyq0 If a AI assistant can polish my email autonomously, then it can perform the task of email polishing, even if it does so more slowly than me. Doing a task is one thing. Doing better is another.

@vdb anything that can randomly copy/paste in text from the web can polish your email autonomously. It may just take a very long time to get it to the right state.

@Lennyhnyq0 If it takes 1 day for the AI assitant to polish my email, and OpenAI makes a video of more than one day long to showcase it, that still fulfills the criteria.

"1. Showcase Event: OpenAI must publicly showcase an AI assistant between January 1st, 2024, and January 1st, 2026. The AI assistant must demonstrate the ability to control a virtual desktop or browser environment.

2. Task Performance: The AI assistant must perform a series of routine white-collar job tasks which are specified in advance and observable by the public during the showcase. The tasks must be completed with minimal human correction, defined as less than 15% of tasks requiring human intervention in the task completion process, across tasks shown in the demo."

@vdb but again, by that definition, ChatGPT itself already met the criterion back in 2023. Look, the point is that there has to be some threshold for complexity of task or else simply typing a character and clicking a mouse counts. There also has to be some threshold for generality among white collar jobs, or else a demo of something that works only for OpenAI employees is sufficient. And there has to be some threshold for value added or else randomly clicking / typing / editing is sufficient. Or typing out many characters then deleting them all.

My read of the criterion is that the AI assistant does things that apply generally to a large number of white collar jobs, that it is actually helpful for it to do those things, and that the things are demonstrated in a way that allows the public to judge their value and the level of intervention required, with less than 15% of the demonstrated tasks requiring human intervention (which, to me, also implies that more than 6 tasks should be demonstrated or else why set the threshold below 50%?).

@Lennyhnyq0 I disagree that ChatGPT already met the criterion back in 2023. ChatGPT does not control a browser.