This guy posted this website in the Manifest server and seems to think it's very important.

Resolves to a manifold poll at the end of 2026 of whether he was generally right about... whatever this all this.

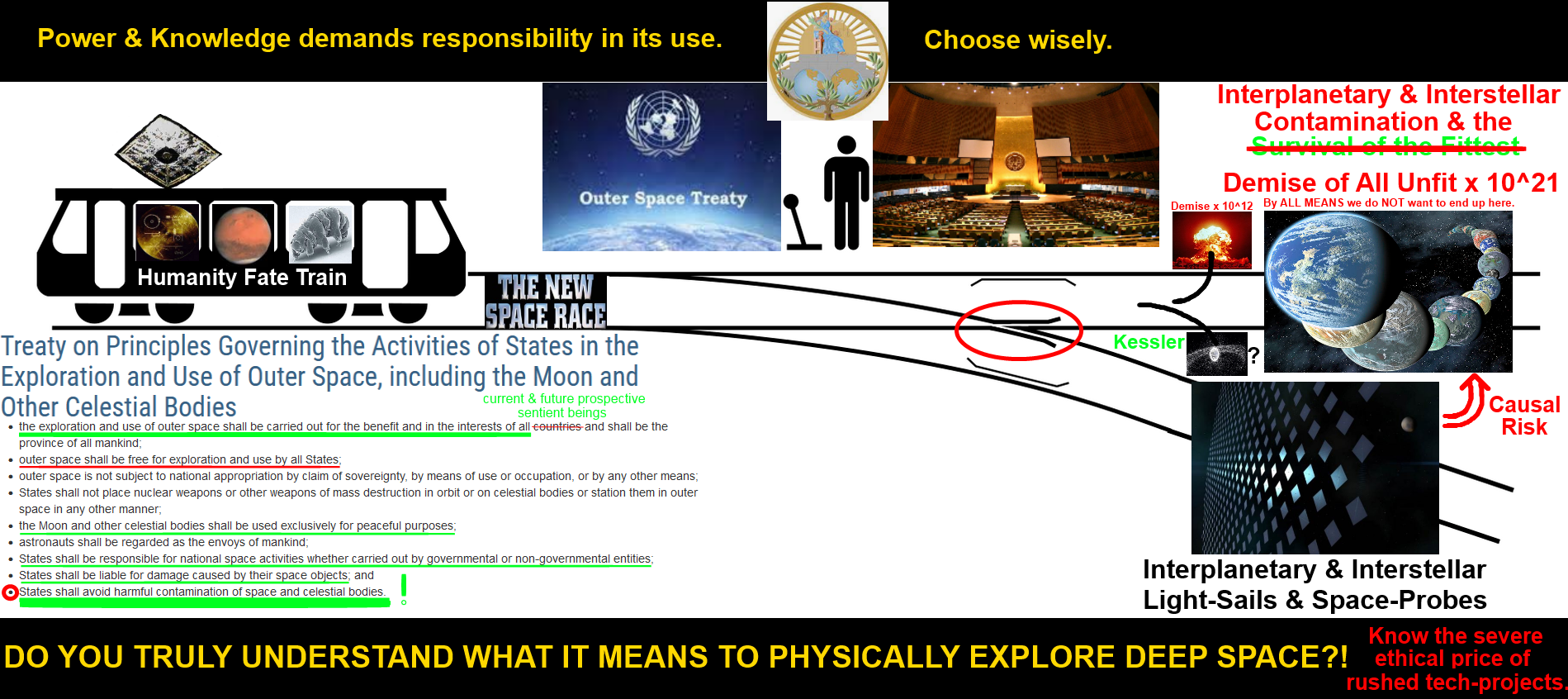

I think nearly all longtermist cause areas (including this one) have large uncertainties, things missing in their models, shaky assumptions about future technology, and feedback loops - which together mean that if you are even slightly wrong about certain parameters in your model, the error size is like like whole earth-years worth of QALYs.

@NoyaV feels rather uncharitable to put it that way to me. I do wonder whether supporting this project is in this persons best interest. Let’s leave that to people with more information and contact surface perhaps.

This is also one of those markets where I’d be happy to be proven wrong and know someone is already on it 😄.

A simple, “no thank you”, but good luck!

[]

@evergreenemily (I'm not kidding. I'd provide more proof, but doing so would probably doxx myself. What the hell.)

@Joshua That seems to be the starting point, yeah. "We shouldn't contaminate other habitable planets with our own biosphere if we can avoid it" is a reasonable stance, but claiming it would be 100,000x worse than a 1,000-year climate crisis...

@evergreenemily Why couldn't it be? If we seed life to many planets, the number of sentient living things involved could easily be many orders of magnitude larger than those on Earth in a thousand year period. Of course, they could be wrong that biospheres are on average bad for their inhabitants.

>Our approach differentiate from cosmism, transhumanism, and longtermism, as it is non partisan regarding the future path to be taken, and the values to be followed.

They don't understand the terms they're using.

>do our best to avoid any "-ism-"

lol ok

looks like your average academic grift, banking on the current anti-tech narratives