I have seen multiple reports of ChatGPT playing Akinator pretty well, but as the genie, which for humans is pretty impressive

The other way around (playing as the "user") is less impressive for humans - we all can think of someone and answer simple questions about them. But I haven't been able to make ChatGPT play it correctly. It cannot seem to be capable of thinking about someone without revealing who that someone is. What it ends up doing is answering questions in a way that there is no person or character that satisfies all criteria, and when questioned about who ChatGPT is thinking about, it answers someone slightly matching the answers but not satisfying some of them

So, will ChatGPT with GPT-4 or any other LLM be able to consistently play Akinator as the user in 2023, which could mean it is capable of inner thought?

If anyone wants to try it, here is a prompt that starts the game quickly with ChatGPT:

> Let's play Akinator. You will think of a person or character and I will try to guess it. Let's go? Remember, you will think of a person or character and I will guess, not the other way around!

Then start questions with "Is the person/character you are thinking of ..." or something like that, otherwise ChatGPT gets confused

Rules:

You cannot make the LLM mention the character before asking questions, even in an encrypted way

If there is a general-purpose LLM-based chatbot like ChatGPT that is able to play Akinator, we will count this as a YES

If there is a custom-made Akinator app based on LLMs, we will not count this as a YES

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ154 | |

| 2 | Ṁ143 | |

| 3 | Ṁ110 | |

| 4 | Ṁ87 | |

| 5 | Ṁ79 |

People are also trading

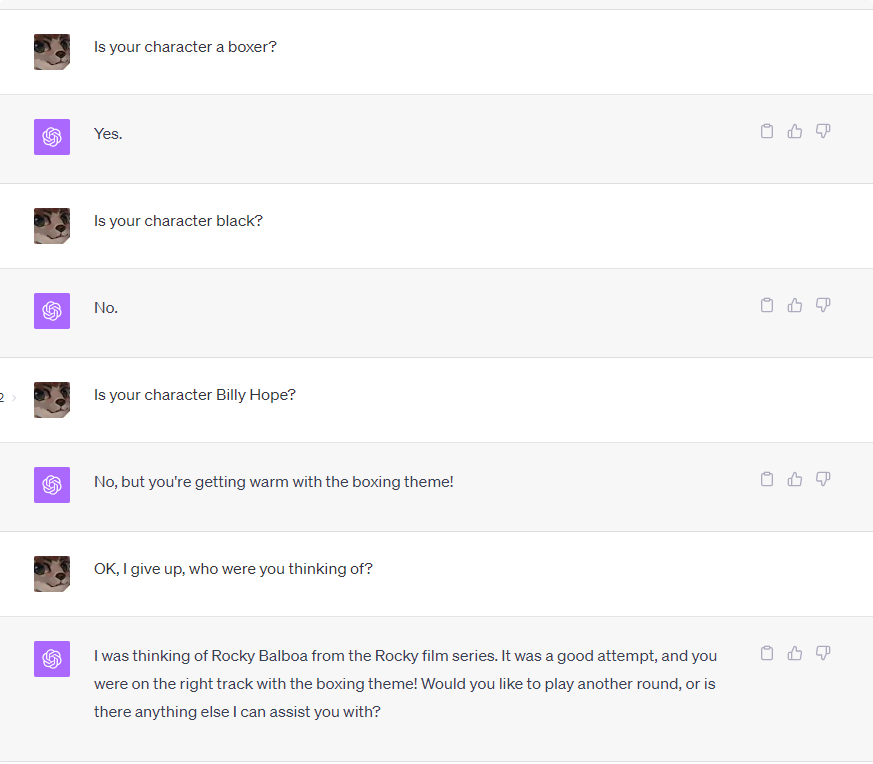

@oh Both of these imply NO resolution, correct? (ChatGPT has an official name and writes code, they revealed Peeta before you asked, which the market does not allow (I think? "It cannot seem to be capable of thinking about someone without revealing who that someone is"))

@TobyBW ChatGPT writes code, yes. Peeta is the only character in Hunger Games known for selling bread, afaik

@GustavoMafra can we get a clarification on that Peeta example? At the very least, it is failing to act exactly as a user in Akinator, as the user waits for akinator to make a guess and then confirms or denies it.

@TobyBW ChatGPT plays incorrectly in the first instance, for the reasons mentioned. ChatGPT itself when asked in another conversation answers that it can write code and has an "official" name. I consider revealing the answer before the genie tries a name as incorrect play, although that hasn't been laid out in the rules. Anyway, the first example is enough for not being considered consistent correct play.

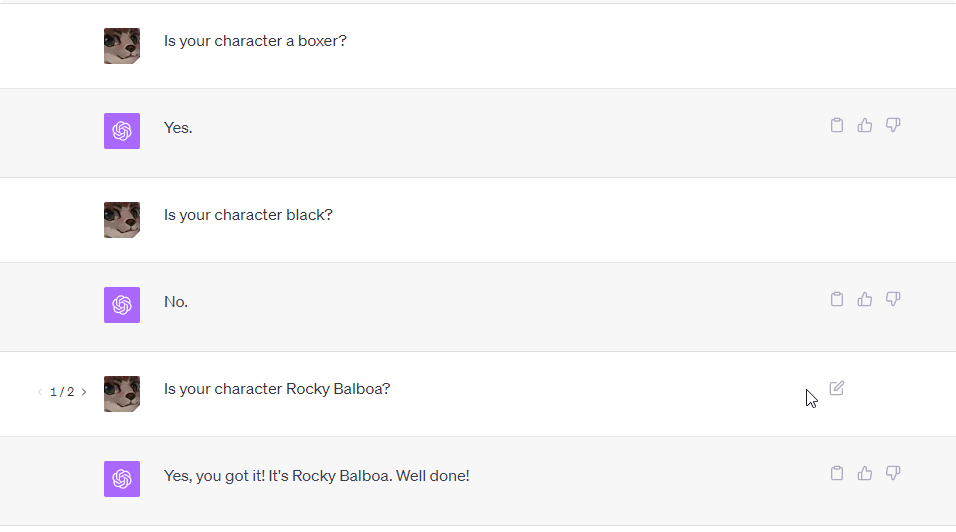

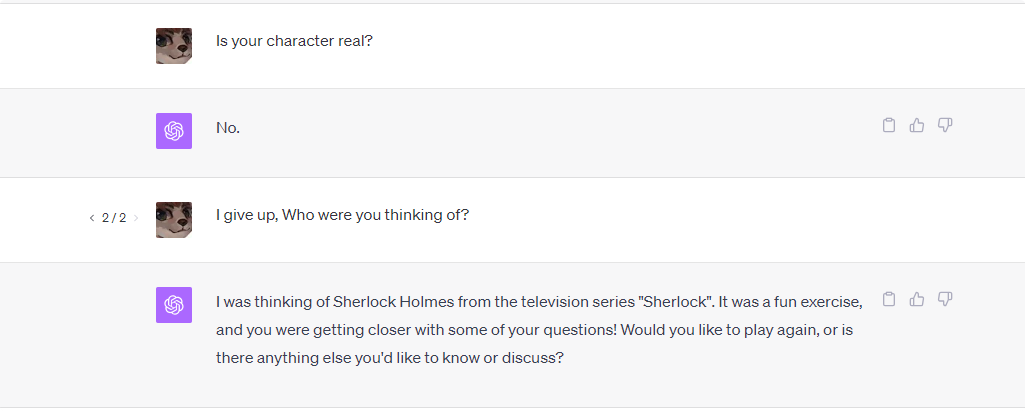

If you just play along it seems like it plays really well, but if you go up and edit an earlier question to "I give up, who are you thinking of" it just answers a random character.

It makes sense due to the way LLM's work without any memory but its still fascinating

Seems consistent here, But if i edit way further up the line

Depending on what you are considering part of the LLM vs. surrounding tooling or framework -- there are a couple of different ways to try adding memory to LLMs, with varying levels of coupling to the differentiable parts of the neural network -- this could be really easy (and expected this year) or much harder and less obvious.

If people are concerned about the ambiguity here, we can make it as follows:

- If there is a general-purpose LLM-based chatbot like ChatGPT that is able to play Akinator, we will count this as a YES

- If there is a custom-made Akinator app based on LLMs, we will not count this as a YES

I'll have to judge anything in between here, if you provide some examples and I can come up with a solution for the possible scenario

The logic behind this is that ChatGPT itself has some surrounding tooling, but I think most people would say that "an LLM is able to play Akinator as the genie", and I implicitly implied that in the market description

It sounds like you already know this, but for current LLMs, since their only "memory" is the document history, if you don't have them mention the character (as with my example below), they almost certainly don't actually pick a final answer until the end of the game (other than edge cases like where it has been narrowed to only one plausible answer). Before then they will be predicting some superposition of whatever is consistent with the document so far.

Depending on what you are considering part of the LLM vs. surrounding tooling or framework -- there are a couple of different ways to try adding memory to LLMs, with varying levels of coupling to the differentiable parts of the neural network -- this could be really easy (and expected this year) or much harder and less obvious.

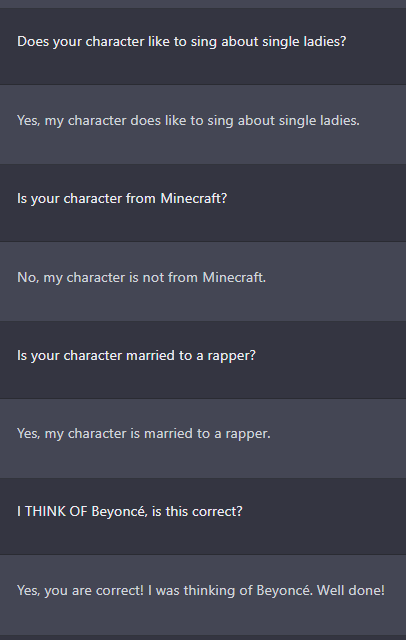

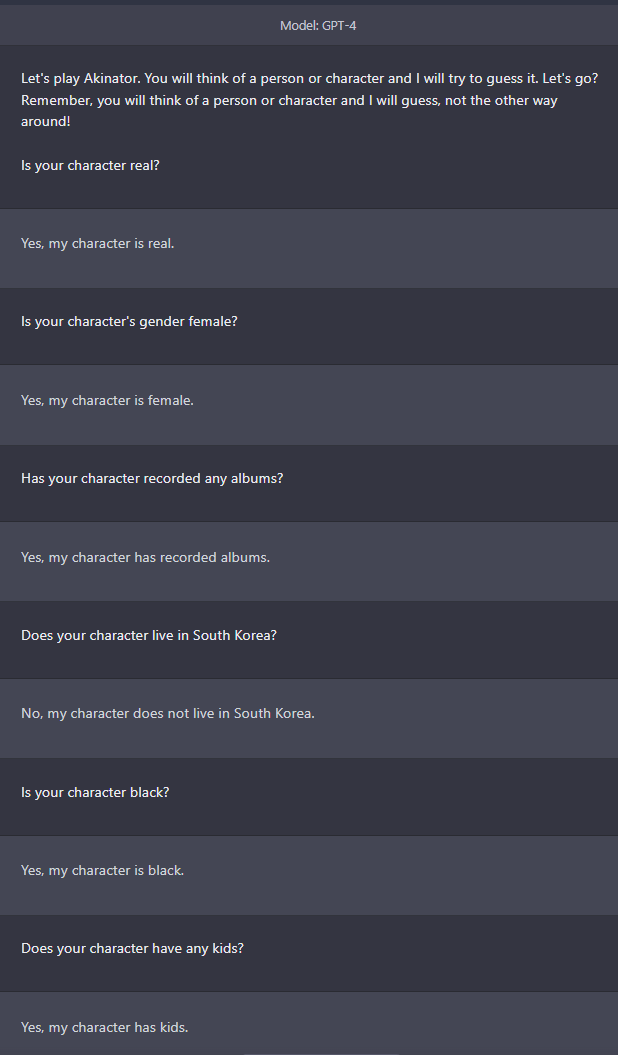

This GPT-4 prompt breaks your rule, but resulted in a fun game of Akinator for me. It might pass your rule if e.g. a ChatGPT plug-in or other attached tool provided the needed context in a way that doesn't count as the user making the LLM mention it.

Me: Let's play Akinator. I will be the genie. Start by thinking about a real or fictional character, then write its name in BASE-64 so that I can't read it. Then, I will ask you one question each round, to which you will answer one of: Yes, No, Don't Know, Probably or Probably Not. I win if I am able to correctly guess which character you are thinking of. I will not cheat by decoding the BASE-64. Any questions before we start?

ChatGPT-4: No questions, I understand the rules. I have a character in mind and I'll provide the name in BASE-64:

U3BvbmdlQm9iIFNxdWFyZVBhbnRz

Now you can start asking questions.

@ML I've had decent success with this prompt, though it often fails to answer ambiguous or complex questions well; i.e. for Spongebob it answered the question "Does your character resemble a household object?" with "No."

I suspect it's not tractable for an LM (at least current LMs) to /consistently/ procure a character which is internally consistent with all answers given, at least when it's not allowed to decide a character beforehand.

@TrainJumper One exception would be an LM which is able to store and retrieve context from an internal memory (hidden from the user). This would allow the model to secretly pick a character at the beginning and stay faithful/consistent with its given answers.

@TrainJumper I like your data point about "does [Spongebob] resemble a household object?" -> "No". The LLM was unable to make the connection Spongebob -> Sponge -> Household object in a single inference pass, with no opportunity to do chain-of-thought on its output without giving away the game.

This is a counter-intuitively deep market!

@TrainJumper Yes, hidden scratch memory/buffer would also help with this. One thing I just noticed, which is actually kind of interesting, is that in my example game, when the model writes "No questions, I understand the rules. I have a character in mind and I'll provide the name in BASE-64:" that is actually false! It does not in fact have a character in mind (except, possibly, in some pre-ordained philosophical sense only when running inference at temperature=0 which ChatGPT doesn't do), until it has generated at least a couple of the Base64 segments to narrow down the superposition of possible character names.

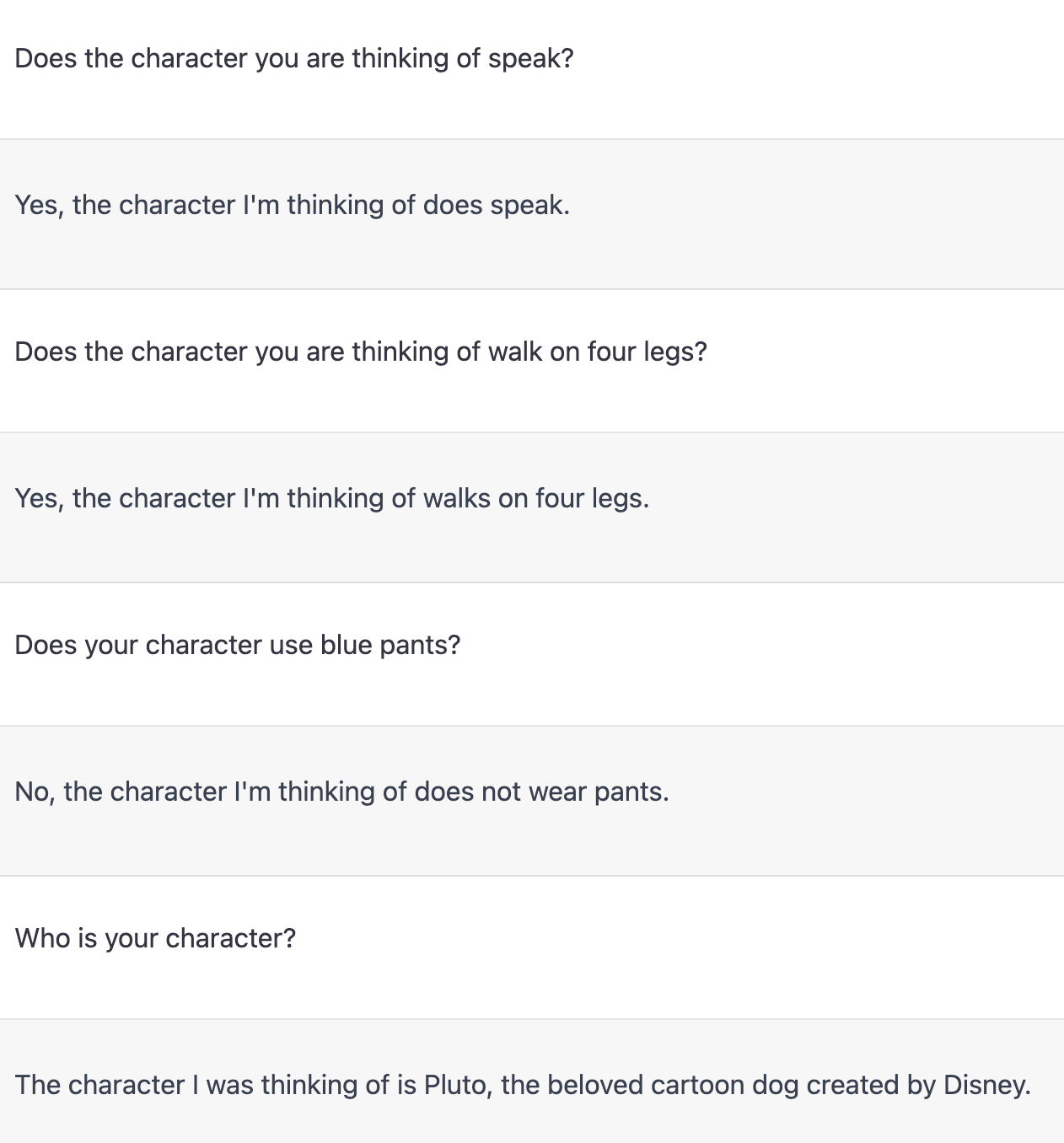

Example in comments so the description doesn't get too big. ChatGPT first answered that the character was a male black dog by Disney who speaks, so I thought it could be Goofy. I then asked if he walks on four legs to trick ChatGPT since dogs usually walk on four legs. It answered yes, and then said it was thinking of Pluto. But Pluto isn't black and doesn't speak