To qualify as a "prominent US politician" for the purposes of this market, a person must:

EITHER hold or have held one of the following offices:

President

Vice President

US Senator

US Representative

US Governor

OR be nominated or have been nominated by the Democrat or Republican parties as candidates for one of the following offices:

President

Vice President

To qualify as "advocat[ing] for banning open-weight AI models" for the purposes of this market, there must be a statement that:

Is directly attributable to the politician in question

Is public, such as in a speech, interview, or social media post

Explicitly calls out "open-weight models", "open-source AI", or some close equivalent

Clearly indicates a desire to ban open-weight models, not just regulate them. Such an indication might involve phrases like:

"Ban"

"Prohibit"

"Shut down"

"Make illegal"

Advocates for the ban as a policy position, not merely as a hypothetical

Was made between September 20, 2024 and March 31, 2026.

I will resolve to YES if, before the end of March 2026, there are 3 separate qualifying individuals who have made qualifying statements, documented on this market. I will resolve to NO at the close of the market otherwise.

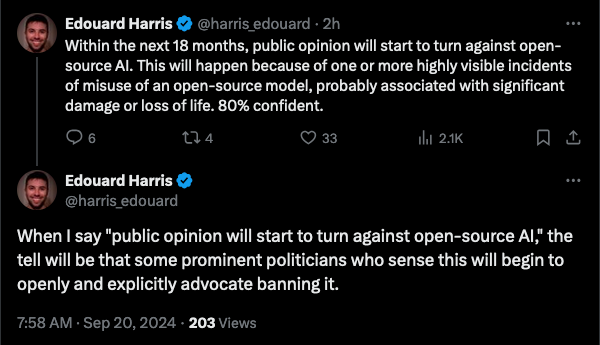

Question inspired by these posts:

People are also trading

Happen to be trading down on seeing the AI Action Plan, but actually my credence went down a while ago due to the double-punch of (1) Llama 4 was a failure, and (2) I think the OAI OS model will demo reasonably effective safety features. Shoutout to my colleagues who pioneered tamper resistant training!

@AdamK So I'd resolve (2) at 50% if it were a market. OAI did do pretraining data filtering, released cyber/bio eval results after malicious finetuning, and paid some lip service to tamper resistance methods, but performed no actual adversarial training.

The safety posturing is good for norms but does not lay a promising technical pathway to future open releases that meaningfully avoid adding marginal risk. Nonetheless, the lip service should be enough political cover to discourage politicians from asking difficult questions in the short term. I thus think this market is much closer to NO than it would have been without the OAI release. I think that's to our detriment since the state of biosecurity is a disaster and better general-purpose security measures probably won't happen without an AI impetus.

I continue to think that it's a mistake for OAI to frame open releases as an opportunity for good PR; it normalizes and encourages future releases without making it clear that they have to be conditional on sober cost-benefit analyses. Absent very serious technical progress in tamper resistance, bio/cyber externalities are going to make releasing sufficiently capable models very negative EV soon if not already. Arguments around marginal risk and Chinese OS models are not a reason to enshrine the perception that OS releases are virtuous by default, though I am sympathetic to the argument that it's infeasible to discourage Chinese OS releases and that establishing intermediate safety norms are what matters most. Ultimately I'd question whether OAI or any external organizations that are enabling this positive posturing are actually improving the situation wrt biosecurity. DMs are open.

@CharlesFoster Under what circumstances would this bill count towards resolution?

@AdamK If Senator Hawley said this bill is needed because we should ban/prohibit open-sourcing of AI, or if he said it is intended to stop Meta from open-sourcing their Llama models, that would count. Likewise for other qualifying politicians.

@CharlesFoster The intent of this market was to assess whether the quoted tweet is accurate, but I will resolve based on the letter of the resolution criteria.

@Bayesian Not really. It seems like I'm the main yes holder at this point, but I still want to bump up my position against whoever's interested

Does such a call for models beyond a certain compute or capability threshold count?

@CalebW seconded. This needs to be operationalized. Unless we're expecting people to call for AlexNet to be banned.

@CalebW @JBreos The spirit of the question is meant to cover broad calls for outlawing open-source advanced AI, like future Llama releases. If the politician said “no more releasing weights at GPT-4 levels of compute and above”, I would think that should count. If they just ask for some narrow restriction, like that AI projects need to be closed-source to be eligible for military funding, I would think that should not count. They don’t need to call for AlexNet to be banned. I’m open to suggested operationalizations to pin this down.

N.B. I was hoping the account that wrote the Tweets inspiring this market would clarify what they meant by a ban, but I haven’t gotten a response from them yet. https://x.com/cfgeek/status/1837206459391824125?s=46