Resolves YES if at least one of the most powerfull neural nets publicly known to exist by end of 2026 was trained using at least 10^26 FLOPs. This is ~3 exaFLOP/s years. It does not matter if the compute is distributed, as long as one of the largest models used it. A neural net which uses 10^26 FLOPs but is inferior to other models does not count. Low precision floating point such as fp32, fp16, or fp8 is permitted.

Resolves NO if no such model exists by end of 2026.

If we have no good estimates of training compute usage of top models, resolves N/A.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ212 | |

| 2 | Ṁ83 | |

| 3 | Ṁ55 | |

| 4 | Ṁ23 | |

| 5 | Ṁ15 |

People are also trading

https://www.semianalysis.com/p/google-gemini-eats-the-world-gemini

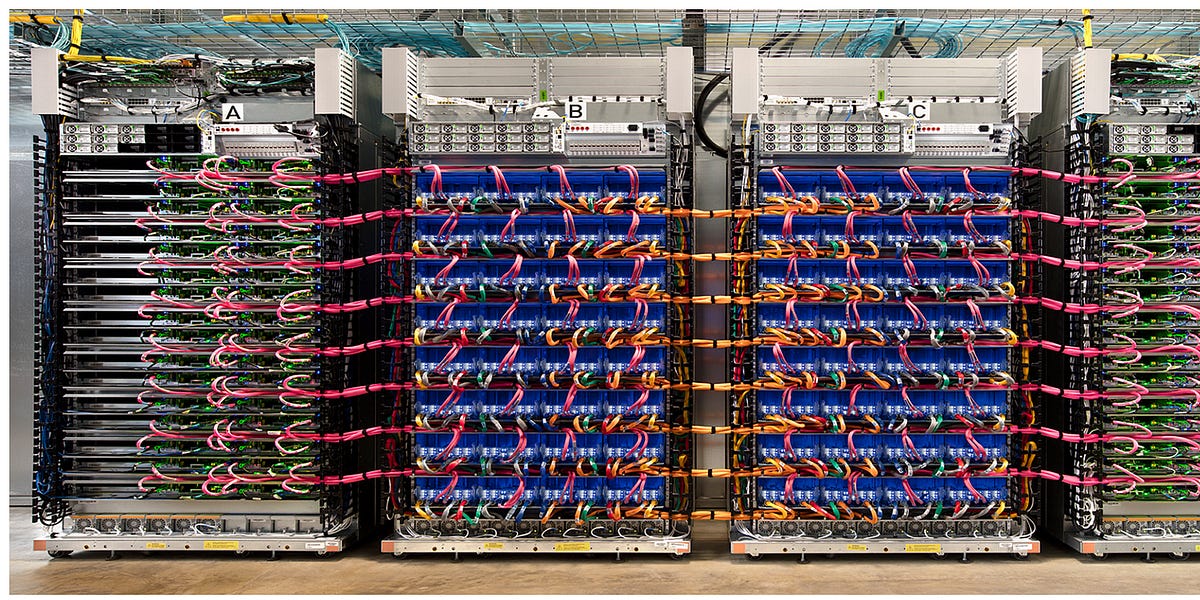

Furthermore, on Gemini, Google’s upcoming LLM, has been iterating at an incredible pace. They have access to multiple clusters of multiple TPU pods. We are told 7+7 pods for Gemini. We believe that the first was trained on TPUv4. We believe these pods are also not using the maximum 4096 chips, but instead a somewhat lower number for reliability and hot-swap of chips. If all 14 pods were used for ~100 days at reasonable MFU, this gets to a bit north of 1e26 hardware FLOPS for training Gemini.

If we trust SemiAnalysis, this market will easily resolve Yes.

See also:

@Amaryllis [2^<number> -> 10^<number>? [seems to be replicated in at least one other similar comment on another of these markets as well]]

@Lorec Oops! Sorry. Thanks for catching. Should be fixed now.

(Internally, I prefer to think in log2, but the rest of the ML community prefers log10, so, confusion.)