Resolves YES if at least one of the most powerfull neural nets publicly known to exist by end of 2026 was trained using at least 10^27 FLOPs. This is ~30 exaFLOP/s years. It does not matter if the compute is distributed, as long as one of the largest models used it. A neural net which uses 10^27 FLOPs but is inferior to other models does not count. Low precision floating point such as fp32, fp16, or fp8 is permitted.

Resolves NO if no such model exists by end of 2026.

If we have no good estimates of training compute usage of top models, resolves N/A.

Show less

People are also trading

> We used Multislice Training to run what we believe to be the world’s largest publicly disclosed LLM distributed training job (in terms of the number of chips used for training) on a compute cluster of 50,944 Cloud TPU v5e chips (spanning 199 Cloud TPU v5e pods) that is capable of achieving 10 exa-FLOPs (16-bit), or 20 exa-OPs (8-bit), of total peak performance.

- https://cloud.google.com/blog/products/compute/the-worlds-largest-distributed-llm-training-job-on-tpu-v5e

Note that for resolution purposes, I will permit 8-bit precision if they can productively utilize it in training.

If I'm doing my math right, it would take ~18 months for this to satisfy the requirements of this market. (Assuming they could get full peak utilization out of it.)

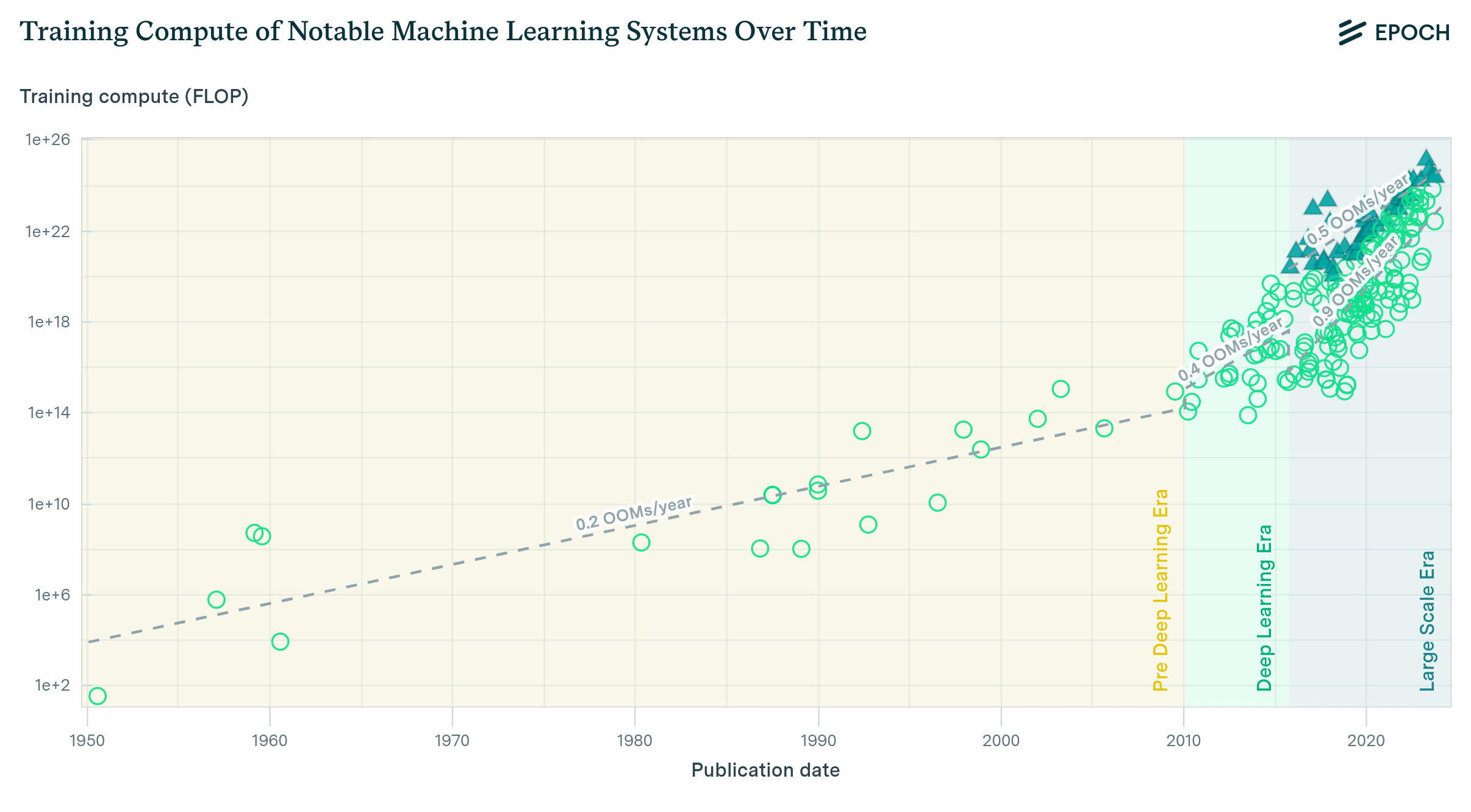

At 0.5 OOMs/year¹, and ~10²⁴/10²⁵ OOMs already this seems like a no-brainer.

¹: https://epochai.org/blog/compute-trends

See also: