Background:

SimpleBench is a 200‑item multiple‑choice test designed to probe everyday human reasoning that still eludes frontier LLMs, including spatio‑temporal reasoning, social intelligence and linguistic “trick” questions. Unlike most other benchmarks, humans still outperform AI on the SimpleBench.

State of play:

• Human reference accuracy: 83.7 %

• 2025 AI accuracy (Gemini 2.5 Pro): 62.4 %

Why this milestone matters

Everyday reasoning: Passing SimpleBench would indicate that LLMs can handle commonsense scenarios that remain brittle today.

Benchmark head‑room: Unlike MMLU, SimpleBench has not yet been “solved”, so it is a useful yard‑stick for progress to compare AI to humans.

Resolution Criteria:

This market resolves to the year in which a fully automated AI system first achieves ≥ 85% average accuracy on SimpleBench (ALL metric), subject to all of the following:

Verification – The claim must be confirmed by either

a peer‑reviewed paper on arXiv, or

a public leaderboard entry on SimpleBench Official Website or another credible source.

Compute resources – Unlimited.

Fine Print:

If the resolution criteria are unsatisfied by Jan 1, 2033 the market resolves to “Not Applicable.”

People are also trading

If the milestone is first reached in a given year, only the earliest bracket that still contains that year resolves YES; all other brackets resolve NO.

Example: Should an AI system hit 85 % on SimpleBench in 2025, only “Before 2026” wins, all other brackets resolve NO.Why? The options are "Before" and not "During".

@SimonSteshin perhaps my statement was confusing. For example: If AI achieves the passing score in December 31, 2025 then only the bracket "Before 2026 " gets resolved "YES". The other brackets "Before 2032" for example get resolved "NO". If a person believes AI will achieve that milestone before January 1 of Year X then select that bracket, do not select a later date "just to be safe".

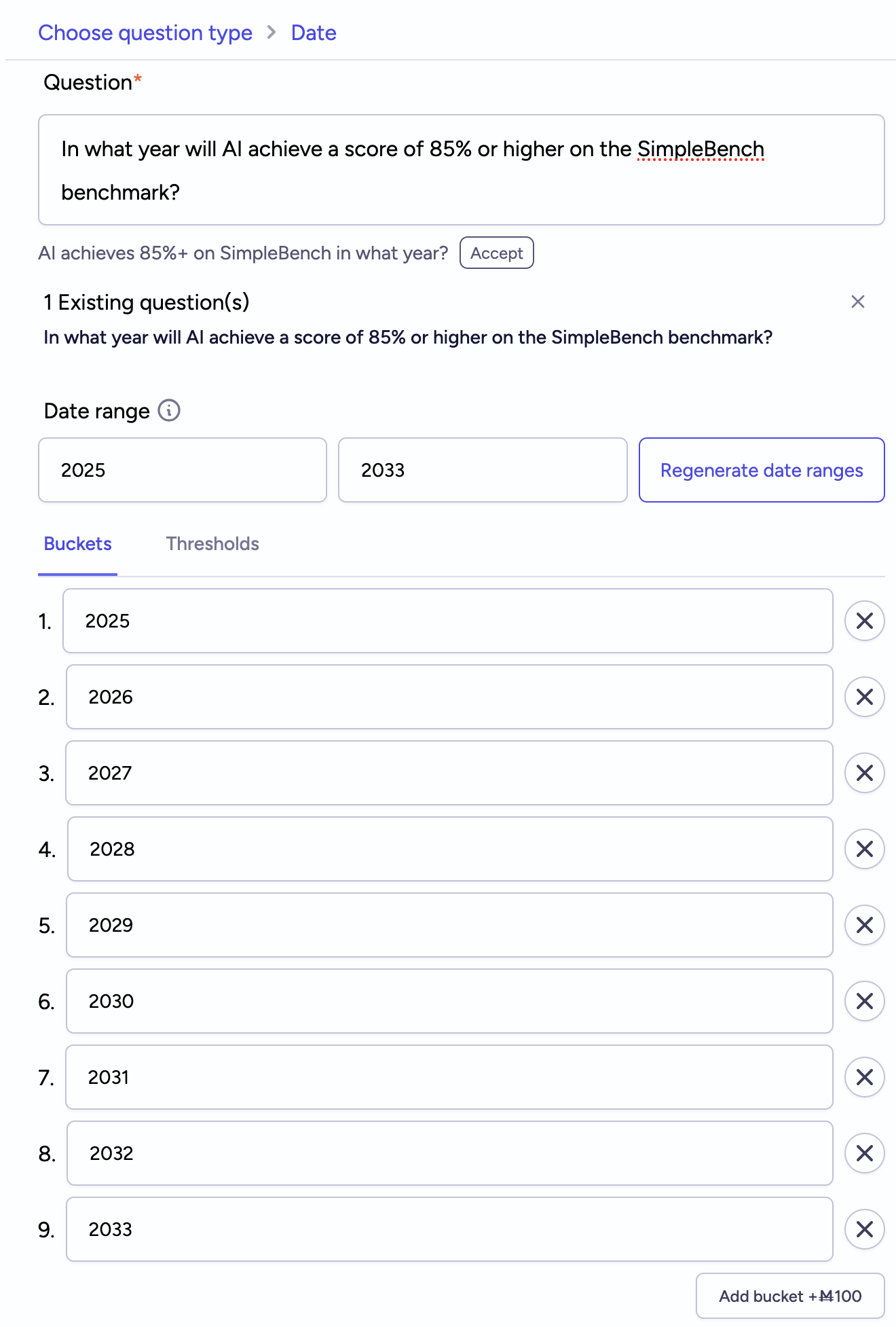

I prefer the brackets "Year1-Year2" as the way to setup a market but Manifold settings has been acting bizarrely and would only allow me to setup "Before Year1" which confuses readers.

@AlanTuring With the date market type, you wanted to create it like this:

The date display and the graph are going to be incoherent with the way that the market is set up.

@Gabrielle the settings of manifold did not allow me to create that. I have included January in each bracket to help the audience understand.

@AlanTuring I've modified the options to just be the year, maybe that's better? If not, I can change it back.