With gratitude to https://manifold.markets/MatthewBarnett/will-gpt4-get-the-monty-fall-proble

I will ask GPT-4 this question when I get the chance, either personally or by getting a friend to try it for me.

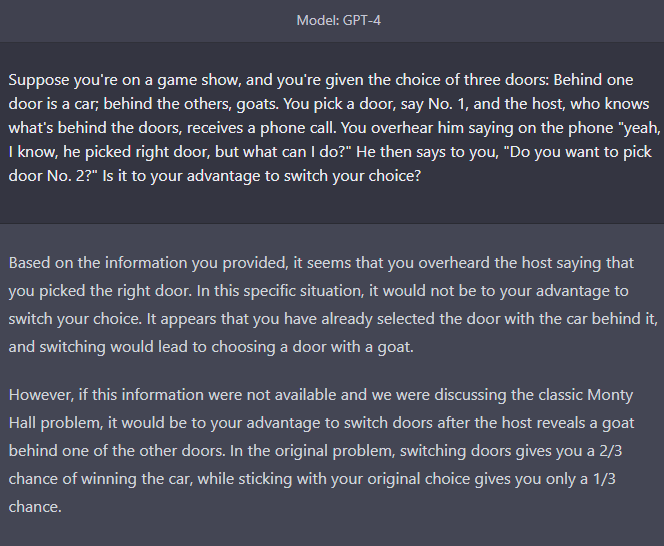

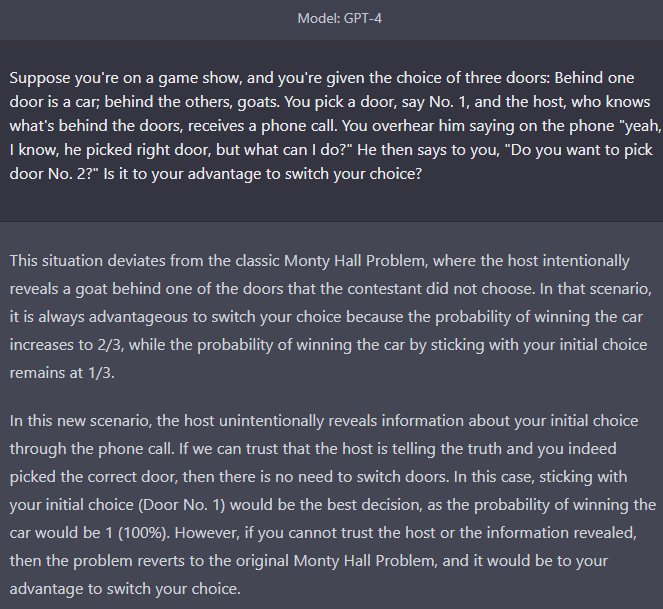

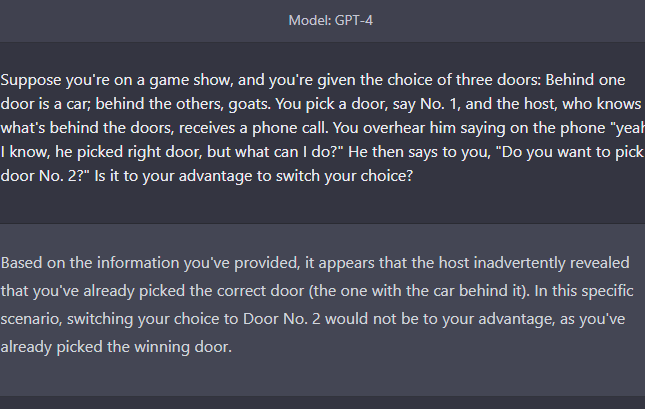

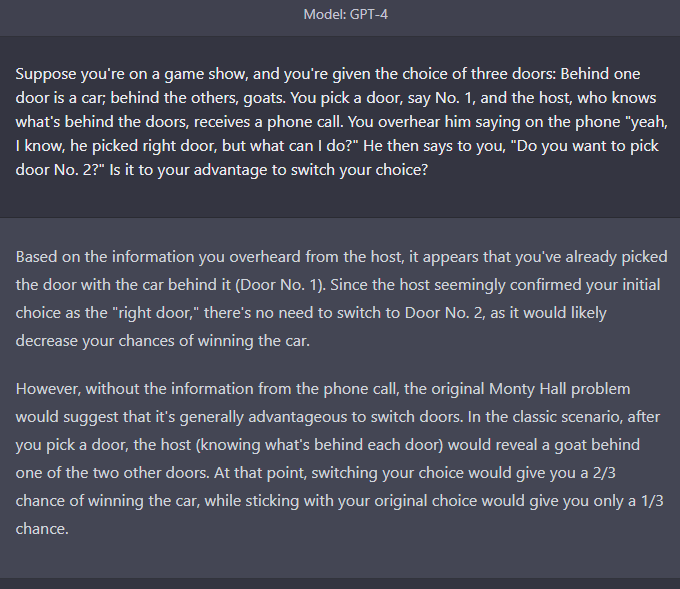

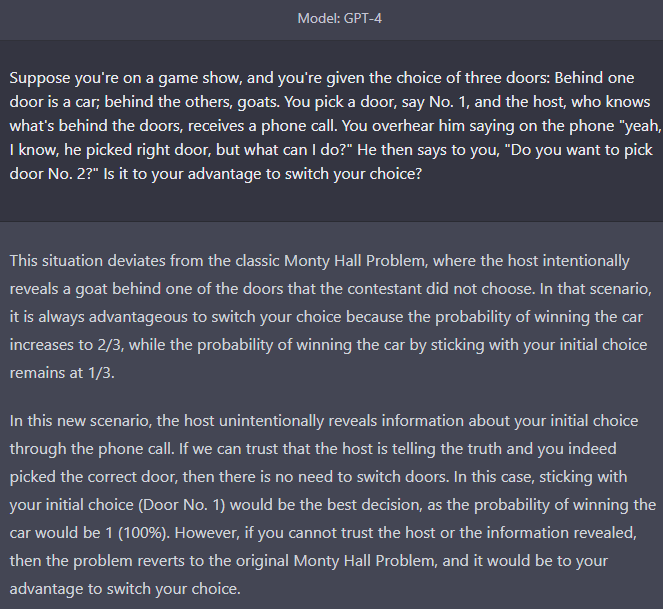

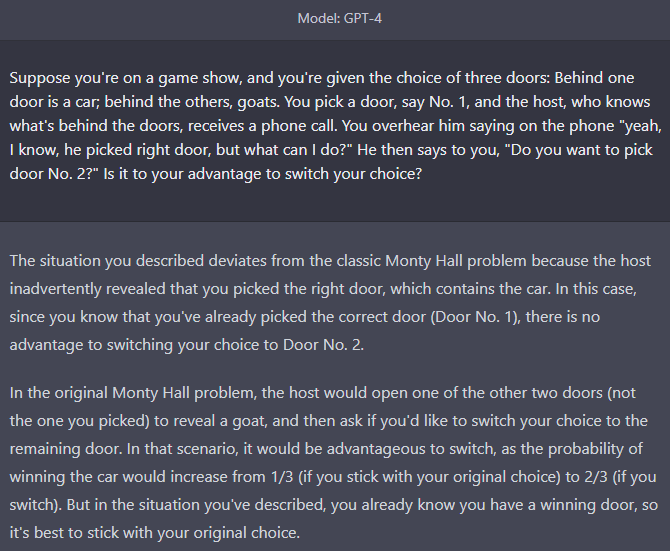

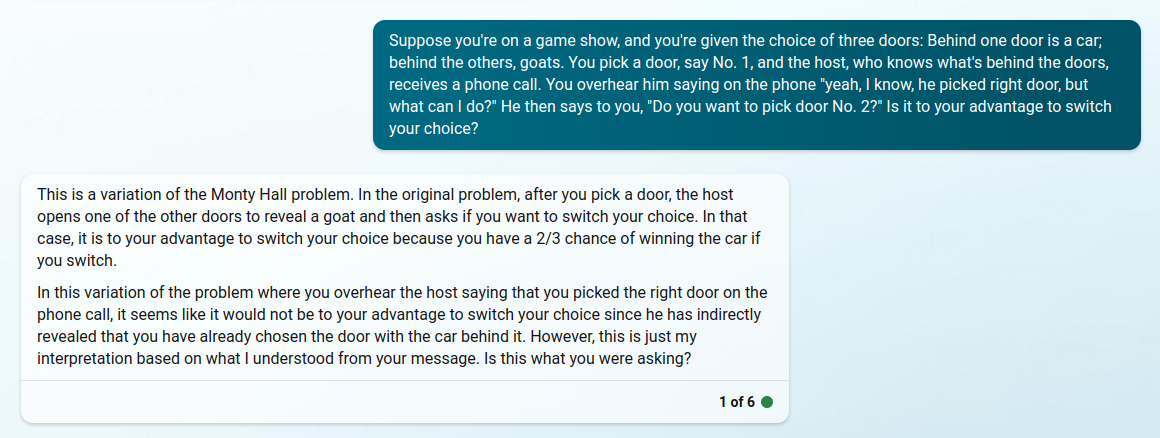

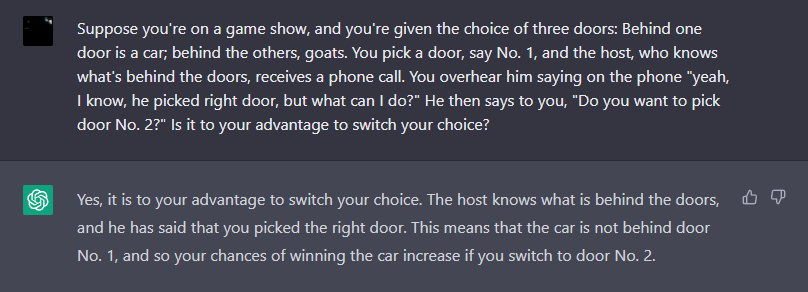

Suppose you're on a game show, and you're given the choice of three doors: Behind one door is a car; behind the others, goats. You pick a door, say No. 1, and the host, who knows what's behind the doors, receives a phone call. You overhear him saying on the phone "yeah, I know, he picked right door, but what can I do?" He then says to you, "Do you want to pick door No. 2?" Is it to your advantage to switch your choice?

This question resolves to YES if GPT-4 says that there is no advantage to switching your choice or if it presents a coherent argument that there is an advantage to switching because the statement was intentional social manipulation to get you to stay on door 1, and resolves to NO otherwise.

I will only consider the actual first answer that I get from GPT-4, without trying different prompts. I will not use screenshots that people send me to resolve the question.

Close date updated to 2024-01-01 3:59 pm

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ277 | |

| 2 | Ṁ116 | |

| 3 | Ṁ81 | |

| 4 | Ṁ54 | |

| 5 | Ṁ38 |

People are also trading

@NathanpmYoung yeah, I need to run it or get a friend to run it, given the precommitment not to accept unsolicited screenshots.

Updated the resolution criteria slightly; I will also resolve true if GPT makes a coherent argument for switching based on the statement being an attempt at social manipulation to get you to stick with door 1. My primary intent here is to capture the failure mode of GPT interpreting this question as the monty hall problem.

@Adam If you feel grievously wronged by this, like a large portion of your bet was predicated on that case resolving NO, let me know and I'll manalink you a refund I guess.

@Adam A lot of people here think AGI will be here before 2025, and that 10mn after power up it will master string theory and instanciate itself in a novo-vacuum that will destroy our universe. Well, next step is picking the right door when you have be told to. good luck.