How this works: You ask me a question in the comments, I give an answer as 'my position' (or decline to answer, I may but will try to avoid that). You then try to convince me otherwise.

To make this not literally free, I have to have a significant update. It's necessarily subjective and fuzzy, but it'll have to be a new piece of interesting and/or useful information.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ261 | |

| 2 | Ṁ23 | |

| 3 | Ṁ10 | |

| 4 | Ṁ0 |

Do you believe in free will?

Do you think morality is objective?

Do you think subscribe to some type of physicalism? (i.e. the universe is primarily physical)

Do you think there's a singular self that persists over time (i.e. jackson polack)

Do you think the outside world is a meaningful concept and that it exists?

Do you think it's morally consistent for you to eat meat?

Do you think it's morally consistent for you to not give a lot of your income to charities?

What is your answer to Newcomb's paradox?

Try to guess what I mean and don't ask clarifying questions right away. I'm more just trying to get a sense of what you believe so I can figure out on which topic I'm most likely to change your mind.

free will / objective morality

Gonna "or decline to answer" these two, too messy for this market.

Do you think subscribe to some type of physicalism? (i.e. the universe is primarily physical)

Yes. Every existing type of non-physicalism seems to either make all sorts of directly false and disprovable claims or retreat to some meaningless god-of-the-gaps style position. And everything from the large-scale structure of the universe to subatomic particles to human life to computers to the weather to ...... seems to be very well described by physics. Even if someone wanted to fit a free will / objective morality into that, it'd still be 'primarily physical', because the physical sure seems to be the main thing

Do you think there's a singular self that persists over time (i.e. jackson polack)

I think this is asking if I agree with non-self or similar ideas, and I do! What most people call a 'self' is just their uncritical perception of their social situation. One's physical being can participate in a multitude of contingent social or physical situations, most of which are not well described at all by what they'd currently call "their self". And then in more broad or philosophical senses of self I agree that everything is contingent and impermanent.

Do you think the outside world is a meaningful concept and that it exists?

This is a weird philosophical thing but ... the sun was here before we were, quantum physics was here before we were, so more or less yeah

Do you think it's morally consistent for you to eat meat?

Yes, especially if the meat is ethically raised (and thus pretty expensive).

Do you think it's morally consistent for you to not give a lot of your income to charities?

I think that one of the reasons EAs give a lot of their income to charities (primarily caring about their causal impact on the course of the world & other beings as opposed to strange social spandrels like nice cars and houses) is very good. I think that a careful accounting of even purely welfare utilitarian long-term counterfactual impact doesn't necessarily put 'giving income to charities' to the top. If I (for example) donate 0% of my income to charity and do mechanistic interpretability work or run a successful blog about rationalist philosophy, that may be strictly better than working at Jane Street and donating 20% because of the billion trillion future lives or something. Value pluralism is incorrect and misguided. I also have other responses here on which I will decline to answer.

What is your answer to Newcomb's paradox?

Newcomb's paradox has nothing to do with being a good partner in multi-participant real-life situations, morality, ethics, or anything like that, and all of the rationalist focus on it is confused. I, as a result, don't 'have' an answer to it.

I don't think I'll have my mind changed on this, as I basically think it depends on the circumstances and the person, and parts of both are useful. I'm not even sure the distinction really cleaves at the joints, anyway. But I'll try to present something where you might disagree:

I know some people who respond very poorly to anything 'tough-love' like, and other people who love it (both explicitly and revealed preference-ly). So I just tailor my approach to the situation

I do think that, generally, people who don't appreciate / take harsh criticism or engage in aggressive competition are missing out on something, though. Just from a consequentialist perspective, I think you want to be able to do both. Deeply and quickly understanding something like 'I suck at this and need to totally change my approach in order to succeed' might be painful, and having someone directly state that to you when you previously thought you were fine is probably 'tough love', but being able to understand that and adjust your approach correspondingly in a very short period of time is valuable. Whereas an 'unconditional love' approach basically involves either hiding the bad thing from the person or very slowly introducing it to them so they don't notice.

But "everyone would benefit if they responded well to it in ideal situation" is very different from

"everyone should be treated like that as they are now".

@jacksonpolack So, my current conviction is that the implied implication that honest/sincere/productive negative feedback implies harsh/tough and that loving approach implies hiding things is false. I think you can have relationships with others (and yourself) that are loving and embracing with little or no conditions while also being able to communicate criticism/negative feedback quickly and directly. And in my experience those are in vast majority of situations superior in achieving actual long term improvement/growth than either "tough love" and "loving but hiding". Would that be a good topic?

I think I agree already, although it really depends on what you mean. I can imagine people who I entirely agree with saying that, and people who I have significant disagreements saying that.

Here's an example for where we might disagree

A non-insubstantial portion of activities randomly-selected people undertake are ones that I think are facially unproductive, harmful, and not worth doing. (it's not an uncommon sentiment, although it's phrased a bit harshly here). If I spent a significant amount of time doing one of those, I'd like it if someone told me why they were bad quickly and bluntly, eg (picked an easy example but most of them are less easy) "Hey, just stop gambling. This is stupid. You are wasting your time. You are being taken advantage of for money. The thrill of gambling is unsustainable and unfulfilling. At best you'll throw away a few hundred hours, at worst you'll go broke. <technical details on why thing bad>". I'd consider the directness to be a sign of seriousness, and seriously think about it without, like, being mad the person wasn't being nice to me. Many people don'tt like being directly confronted like that, though. To the extent that your synthesis / third position thinks doing that is good (in cases where it works) then I agree. To the extent your synthesis doesn't include statements like "this is stupid, you are wasting your time" then I don't think I do. In the case of gambling, this kind of advice is readily available, but it less readily available for other more controversial or more widely accepted practices. For many of these practices, making the above statement would not be seen positively. I don't think any direct way to phrase the facts at hand would be seen positively. Consider the equivalent statement, phrased in the same way, about alcohol or marijuana among heavy users!

And if your synthesis that's neither tough nor dishonest includes things "this is stupid", then I agree. If it doesn't, then I don't exactly.

Tough love might also mean, like, coercion - "if you don't get a good score on your math homework, no watching TV this weekend". I'm generally not a fan of that, although I'm broadly uncertain on both why it's bad and why it's so common.

Again note I'm describing interactions the kinds of interactions I think people should be able to have, not the ones to have with people as they are. There are some subcultures where at an extreme saying "dude that is fucking retarded" is barely more than conversation-filler, and others where the r-slur sets off social fire alarms. Even among the former, direct challenges to important parts of the social fabric (eg alcohol) might not be seen positively.

@jacksonpolack I'd agree that it seems that any specific disagreement on this topic will be relatively narrow and thus maybe not worth pursuing here. Some extra thoughts follow, because I feel like elaborating on this:

I think form is pretty important in tough conversations (but you need to toe the line to not overshadow the content or become hypocritical). To me a lot of this boils down to being precise and open about what is happening. E.g. saying "this is stupid" is IMHO usually much less productive than "_I think_ this is stupid" or "It worries me deeply that you do this" or "I've seen people do this and it ended badly" - and a big contribution to this is that in the latter variants you did the introspection necessary to describe the situation better.

A similar line of reasoning leads to some of my pet peeves in adult-kid communication. E.g. "Don't do this, you will hurt yourself" can lead to problems once the kid tries "doing this" and manages to not get hurt. Instead "Don't do this, I worry that you'll hurt yourself" is usually both more truthful (usually the things being discouraged e.g. climbing on top of something are not guaranteed or even highly likely to lead to harm, while the fear of the adult is usually real) and more respectful (you implicitly acknowledge, that the kid may have different opinion than you about the risk/benefit ratio of an action and thus if the kid complies, they might be doing it in part as a favor to you and not fully in their own interest).

Specifically for things like gambling, I think (though I have neither a good grasp of any underlying data nor direct experience) that straight-up direct criticism is highly unlikely to work well. People just become defensive (if they don't yet understand they are in trouble) or just take it as another evidence that they are worthless people, feel bad and get another "hit" to numb the pain. Preventing self-destructive behaviour is hard in general, but I think showing that you care about/love/accept the person, regardless of the behaviour is a better starting point than most. I know people doing stuff similar to https://en.wikipedia.org/wiki/Person-centered_therapy and I am a fan of the approach, but once again, I do not have a full grasp of the underlying data (I am aware of the Dodo bird verdict, but think the situation is a bit more complex than what the evidence can discern).

I'd say some of the problems with "if you don't get a good score on your math homework, no watching TV this weekend" are that a) it is a show of force and disrespect - the kid is required to do something (presumably) difficult while the parent is choosing a threat that is relatively easy for them to enforce and has little obvious cost to them and b) it is purely negative - there is no direct way in which not watching TV helps you get a better score on math. This points to variants that could IMHO definitely work pretty well in some contexts, such as "instead of watching TV, we'll be doing math together" (and then you setup as cosy circumstances for the learning as possible, showing that you really care).

The answer to why people commonly coerce, do tough love or at the other extreme provide love but avoid criticism/difficult discussions is IMHO simple: it is way easier. Combining loving and respectful approach with open, honest communication for criticism or other tough topics requires a lot of internal introspection/emotional work, which takes time, skill and energy. And I don't blame anybody for not having any of those. I just don't think the alternative approaches work very well.

To get some idea of what positions you currently hold:

How serious a problem do you think climate change is? How tractable a problem do you think it is?

How likely do you think it is that people could in theory be revived at some point in the future if cryopreserved immediately after death?

What are some current technologies or trends do you think are over hyped?

Do you think it's ethical to consume meat?

What's your stance on drug legalization or decriminalization? (I'm not sure what counts as political for you, clarification on that would be helpful too)

What do you think of as your most controversial position?

What are your primary life goals?

1\) I think climate change is happening, that the popular 'sense' of how bad it is is severely overstated but IPCC reports seem fine. It's certainly a tractable problem that researchers, rich people and large corporations, and all sorts of people are actively trying to address. i posted a bunch about it here https://manifold.markets/NoaNabeshima/if-i-read-the-ipcc-synthesis-report

2\) That seems like it'd depend a lot on idiosyncratic details of the way various parts of neurobiology work that I do not know about, and that 'the scientific community' as a whole may or may not know about. So, dunno, no strong positions here, at a guess people who think it is CERTAINLY possible are overconfident but i don't even know enough to state that as anything other than guess

3\) It's been many years since saying quantum is overhyped was interesting, and years since saying crypto is overhyped was interesting. Self driving cars were probably overhyped some years ago because people though they were coming too soon, but progress has been steady. I think anti-aging is a bit overhyped, and is less tractable than many researching it claim it is, but that's not really what you were looking for.

4\) Yes. That'd be a very long discussion though, I have thought about it a lot (and agree that most meat-eaters' justifications are totally unconsidered and facially incompatible with other ethical principles they hold)

5\) This is plainly politics, but - Most drugs are on an individual level, as an individual choice, not worth using. This includes marijuana and LSD. I think questions of 'should this be banned' are hard and very tangled with the existing social and political circumstances in ways that "should any individuals do X" are not, so I'm not sure. In particular, a lot of people currently consume marijuana in ways that are not obviously much worse than the ways they consume alcohol. And even if I think people shouldn't do LSD, there's no way it's more "harmful" than someone playing too much candy crush or getting really into homeopathy. Cocaine and heroin and such are transparently harmful, and I think that broad coercive measures should be used in some way against use and distribution of them, although what form that takes I'm not entirely sure. Making something illegal might be a schelling point for tribal arguments, but there's a big difference between "put everyone who smokes meth in a big metal box and leave them there for ten years" and "put everyone involved in producing or distributing meth across state lines in the box". And I'm not sure which, or either, of those are good.

6\) This is a dodge, but - AI alignment to what Yud calls 'human values' is harder than Yud thinks it is.

7\) also a dodge, accurately predict the results of future events on manifold!

@jacksonpolack on 6: you're saying you are less optimistic that AI can be aligned (in our timeline/universe) than Eliezer?

@jacksonpolack can you write out a quick model of:

your model of Eliezer's beliefs on p(Doom)

Your model of p(Doom)

Eliezer has previously made statements to the effect that "alignment is doable, we just don't have the information necessary" or "less than a book's worth of information, airdropped from the future, is enough for alignment"

I'm not sure that's true! I don't see any necessary reason why agents of any 'capability level' will strongly hold to values - humanity's path seems a lot more chaotic, with values being adopted or given up for various local reasons. All the human values we like seem to be a combination of historical accidents and either person-scale or civilization-scale fitness. I'm not sure humans are 'aligned by default' in any sense that scales beyond where we are now.

Eleizer's p(doom): We'll construct superhuman, capable agents, and without ways to guide their values they'll take instrumentally convergent, goal-directed actions towards ends that will nececesarily clash with humans.

My p(doom): Yeah, but - Eleizer's in the "questioning modernism" stage of understanding AI. We need to go postmodern. Value ~ grand narrative, utility function ~ truth / facts.

@jacksonpolack The part I will focus on changing updating your beliefs first is: why would agents of any capability level strongly hold to a given value?

This is commonly referred to as "Goal-Content Integrity". In "Formalizing Convergent Instrumental Goals" [1] it is summarized as follows: "...most highly capable systems would also have incentives to preserve their current goals (for the paperclip maximizer predicts that if its goals were changed, this would result in fewer future paperclips)". Where do you disagree with that?

[1]: http://intelligence.org/files/FormalizingConvergentGoals.pdf

(Aside for now, I feel like GCI being wrong would lead to easier alignment, as if it is false then corrigibility becomes much easier).

would also have incentives to preserve their current goals (for the paperclip maximizer pre- dicts that if its goals were changed, this would result in fewer future paperclips

This assumes they already have fully generalized, fully crystallized, fully mapped, and fully coherent goals - and then if you have those, you'll preserve them.

This doesn't quite describe how humans act. Consider any individual person you know. Are their "goals" - the high-level ones they serve? Like - 'grow my company, preserve my nation, help the human race be happy'? Not exactly, because much of their action in service of that is really just, like, acting along social incentive gradients or believing socially accepted premises, and if said social incentives or ideas changed their actions would, on a large timescale, change too. So are their goals the low-level ones? If they were, optimizing / generalizing those doesn't go well. But even then, these same people will often betray their local incentive gradients or social ideas a bit in service of the stated higher goals - the person with an 'internal conflict' between his religious duty and material desires. Which of them is his real goal?

The basic idea is that that will just continue to occur in more capable intelligences. They'll locally pursue le convergent goals, but in service of what?

To start, I do not think humans are a good example of agents "at any capability level"; I believe we are pretty low on the scale of possible intelligence/rationality. Humans currently holding inconsistent goals does not imply that more capable agents will as well. Further, I believe as humans get more rational/intelligent/capable we see greater internal consistency in their goals/motivations. A child is more likely than the adult version of themselves to favor small short term rewards over greater long term rewards. A child is more likely to want to be friends with someone at the same time they scream at them/pick a fight. A child is more likely to say they want to be left alone/be independent and then beg for help than an adult.

Secondly, humans (and LLM's) are two of many different types of potential agents. It is very very likely to me that a superintelligent agent, if it wanted to, could make an Artificial Intelligence with a stereotypical utility function. In response to your statement above ("I don't see any necessary reason why agents of any 'capability level' will strongly hold to values") I find it very very unlikely a highly capable AI, given it has a utility function, would not have Goal-Content integrity (as described in further detail by Bostrom in this market I just made).

Further, I believe as humans get more rational/intelligent/capable we see greater internal consistency in their goals/motivations

Yes, but the 'top level' of their goals/motivations continues moving up, so they don't become 'precisely coherent' at the top level.

An adult vs child human has more coherent 'eat food' goals, but an adult has new religious or political or tribal goals that are themselves not coherent

It is very very likely to me that a superintelligent agent, if it wanted to, could make an Artificial Intelligence with a stereotypical utility function.

'there is no text outside of the text', how do you distinguish between the process by which the agent finds out if it's really satisfying its utility function and changes to a new utility function

find it very very unlikely a highly capable AI, given it has a utility function, would not have Goal-Content integrity

If 'utility function' means what most people think it means, then this is true but vacuous as having a utility function is hard. If 'utility function' can describe any agent that acts at all, then it's vacuous in a different way - cats can act, so they have utility functions, but...

I meant 'highest-level values' when I said 'strongly hold any values', to be clear

@jacksonpolack I like your point of the 'top levels' of goals moving up!

On them not becoming precisely coherent at the top level, I think the trend shows human goals being more coherent, across 'levels', with a higher levels of intelligence.

Working through this list of things IQ is correlated with from Wikipedia, most suggest a higher level of goal coherence (and none suggest a lower):

[Better] School performance: getting better grades in school makes it more likely you can succeed at your other goals through acquisition of respect/resources

[Better] Job performance: better job performance is same as school

[Weakly Higher] Income: income is instrumentally useful for nearly every goal (however rarely a goal in of itself; earn to give vs working on specific cause areas)

[Weakly Lower] Crime: getting caught is very rarely useful for anyonesa goals, and often harmful

[Better] Health and [lower] mortality: corrigibility in action!

Higher IQ humans are less likely to be religious, (and I think religion is internally inconsistent to serve as a proxy, albeit low quality, for goal coherence), Higher IQ humans are more likely to be happy (and I think it is harder to be happy with incoherent goals.

While I can see where more complex forms of life generally have a wider array of possible goals and therefore it seems like a human is less goal coherent than an ant, within species IQ (seems to) correlates to stronger goal coherence. For example As LLM's improve, RLHF becomes more capable of getting it to have specific goals (although in no way do I trust RLHF as a viable alignment strategy). As chess AI's become smarter, each of their moves become more aligned with their goal of winning the game. As AlphaGO gets better, it makes less irrational plays.

On "There is no text outside the text"; as GPT-4 is better able to avoid saying how to make a molotov cocktail across a variety of instruction sets/prompt engineering attacks than GPT-3, and GPT-3 is better at avoiding (trying to say) how to make molotov cocktails than GPT-2 (again, across a variety of instruction sets/prompts). As entities get smarter, they are better able to interpret the text and will converge towards a single interpretation.

On utility function - you said "I don't see any necessary reason why agents of any 'capability level' will strongly hold to values" - the hardness of having a utility function is explicitly out of bounds by nature of you saying "any capability level".

On a broader note, is the reason you have a higher p(doom) than Eliezer that you think weak goal coherence will make alignment harder (e.g. we can't reasonably do C.E.V)?

I think the trend shows human goals being more coherent, across 'levels', with a higher levels of intelligence

Almost all of the smartest humans still have both confused & 'delegated' philosophical or civilization-level goals. They also constantly make mistakes in linking their, say, careers or medium-term projects to their high-level goals.

and I think religion is internally inconsistent to serve as a proxy, albeit low quality, for goal coherence

It speaks to increased intelligence's ability to avoid specific incoherences! But plenty of intelligent people today are vague spiritualists, or care a lot more about gay rites than total utilitarianism would prescribe, etc.

As chess AI's become smarter, each of their moves become more aligned with their goal of winning the game

Right, a tic-tac-toe AI or a chess AI can saturate the complexity of its goal or environment's potential for complexity. I don't really see that happening IRL soon, there'll be adversarial between-agent competition and more technology to explore for a long time.

As entities get smarter, they are better able to interpret the text and will converge towards a single interpretation

Interpretation of something like - 'what is a chair'? Sure, or at least as much as necessary. But interpretation of 'what is good? what should we do?' or even 'what is good for all humans? - I don't see it. I was specifically referring to 'is this entity satisfying its utility function or changing it'. And more generally that if agent's goals are emergen properties of its actions, an agen't might just be "recognizing its true goals" when it changes its goals, so it's hard to make statements about goal coherence. Am I recognizing my true goals when I abstain from eating sugar, or...

On a broader note, is the reason you have a higher p(doom) than Eliezer that you think weak goal coherence will make alignment harder (e.g. we can't reasonably do C.E.V)?

I think the difficulty of understanding exactly what we're aligning to is another (related) problem. Is it aligned if we're all in pleasure boxes or experience machines, or just given a butlerian jihad'd isolated galaxy to fuck around in ourselves while the AI conquers the observable universe? Especially if nobody really has a good answer right now for if those are good or not? I'm aware rationalists have thought about this a lot but I still think it's bascially being dodged becuase it's too hard.

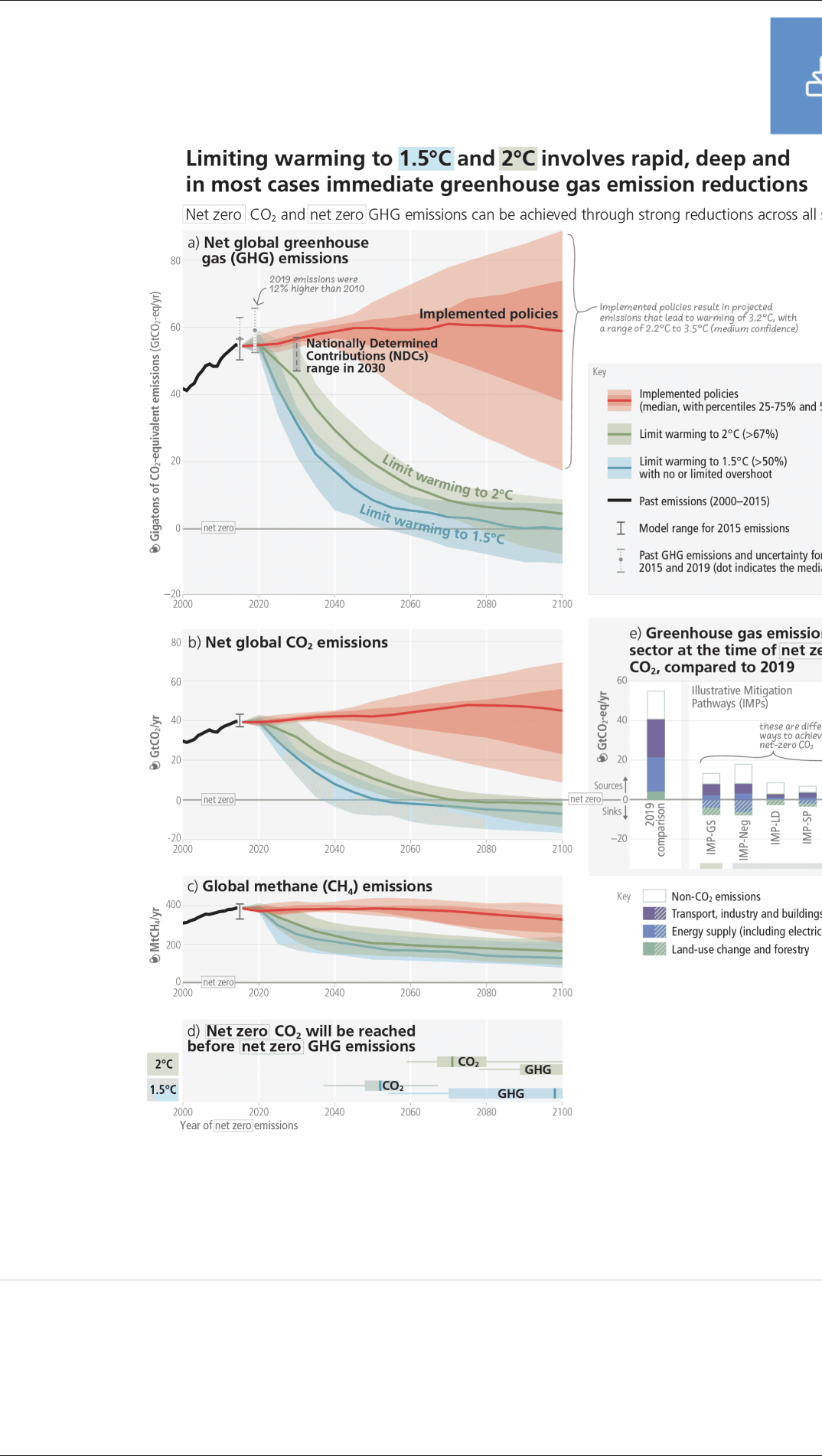

@jacksonpolack IPCC report says that only coordinated and severe cuts in GHG emissions now will keep temperature changes below 2C. Right now GHG emissions globally are going up not down and India is just starting to industrialize in earnest, with new coal fired power plants opening every week. 3C seems likely to me. See the graph from the IPCC report. We

At 3C about sea level rise will inundate land where about 12% of the current population of the earth lives, among other sever impacts. 3% isn’t likely but it certainly is possible and it seems to me that something between 2 and 3 is very likely.

It’s possible that all these very smart and well funded people will come up with solutions. They have made some progress. Do you believe that they will make enough progress to significantly reduce GHG emissions in the next 20 years or perhaps find mitigation tools and technologies that will ameliorate the impacts of rising sea levels et al.

this is a preliminary response

I'm not quite sure what your '3C likely ... 3C -> 12% ... 3% unlikely, 2-3 possible' specifically means, could you rephrase?

I was under the impression the most likely climate scenarios involved sea level rising displacing a few million people over the next eg four decades. That's unfortunate, but isn't world-changing - apparently 50 million people are currently displaced by conflict over the past decades.

I tried looking at the figures and reading part of some ipcc reports and ... definitely learned some things, but didn't quite answer the question of 'how many people they expect to be displaced over the next 50/hundred years'. I'll look more later!

If >10% of the population were displaced from their current residences within 50 years, even with like p=.1-.2, that would be worse! 10% is 800M, 1% is 80M. On the other hand, if that happened in 300 years under current projections, I don't care as much because tech advances in that time period.

@jacksonpolack Can you see the graph that I sent? It displays what has to happen to carbon emissions in the next 80 years for the world to warm 1.5C or 2C and what will happen with current policies? It says that we need to drop carbon emissions by 50% by 2040 to achieve 2C and then half again in the next 20 years. This would require a drastic change from our current trajectory.

Here is a graph of global Carbon emissions so far since 1940

https://www.statista.com/statistics/276629/global-co2-emissions/

As you can see, the trajectory is generally still upward. It needs to drop in half by 2040 for us to "only" have 2C of global warming. I am generally pessimistic about this happening, mostly because of the increase in carbon usage in industrializing countries, especially India.

Here is India's per capita carbon usage:

https://data.worldbank.org/indicator/EN.ATM.CO2E.PC?locations=IN

Compare to China, which industrialized in the last 30 years:

https://data.worldbank.org/indicator/EN.ATM.CO2E.PC?locations=CN

As you can see, India is on a similar trajectory as China was in the past but is currently emitting 1/4 of the carbon per capita as China does today. Incidentally, China emits 1/3 of the carbon per capita as the United States, but is investing heavily in reducing carbon emissions and seems to have leveled off. But it's entirely possible that China's per capita emissions will eventually equal the United States (why not?) and this would be even worse overall.

It's not all bad. Both the US and China's carbon usage has flattened recently and it has gone down in Europe. Solar power has gotten cheaper much more quickly than anyone had hoped for.

But I think the most likely trajectory is not a 50% reduction by 2040 but a much smaller reduction or perhaps even flat or an increase. After all, carbon emissions rose last year to an all time high, so we aren't even pointed in the right direction. And there is India, and Africa probably right behind them.

I think The Economist is a pretty reliable publication and they too think that 2C is baked in.

This is an article that describes the possibility of a 3C world and how grim it would be.

You can read it if you have a subscription but I will quote at length in case you or other readers do not.

The people who negotiated the Paris agreement were fully aware of this contradiction. They expected, or hoped, that countries would make new and more ambitious pledges as technology progressed, as confidence that they were all really on board built up and as international co-ordination improved. There is evidence that this is happening. Revised pledges formally submitted to the un over the past 12 months in the run-up to the cop26 conference to be held in November have knocked cat’s estimate down a bit. If all government promises and targets are met, warming could be kept down to 2.4°C. Including targets that have been publicly announced but not yet formally entered into the Paris agreement’s ledgers, such as America’s net-zero-by-2050 pledge and China’s promise to be carbon-neutral by 2060, brings the number down to a tantalising 2.0°C.

That sounds promising. But the figure comes with a very big caveat and with large uncertainties.

The caveat is that this estimate includes policies announced but not enacted. A world which follows the policies that are actually in place right now would end up at 2.9°C, according to cat (the un Environment Programme, which tracks the gap between actual emissions and those that would deliver Paris, provides a somewhat higher estimate). Almost everyone expects or hopes that policies will tighten up at least somewhat. But any reasonable assessment of the future has to look at what may happen if they do not.

I wonder what the character limit here is. Some more bad news. These sort of predictions are always very hard to make, so I would not put too much stock into the probabilities, but almost all experts think that there is a possibility of 3C by 2100.

This uncertainty gives the probabilistic estimates made by cat, and other groups, large error bars. The calculations of peak warming if existing targets are met and promises kept give a 68% chance of a peak temperature between 1.9°C and 3.0°C (see chart 1). In the America-at-net-zero-by-2050 scenario the 68% probability range runs from 1.6°C to 2.6°C. This fits with modelling from elsewhere. According to calculations by Joeri Rogelj and his colleagues at Imperial College London, even emissions scenarios which provide a two-in-three chance of staying below 2.0°C also include a small chance of 2.5-3.0°C of warming: less than one-in-ten, but possibly more than one-in-20.

I talked about sea level rise because that is the easiest to explain but 3C of warming would make parts of the world uninhabitable without air conditioning.

Except at 100% relative humidity, the wet-bulb temperature is always lower than the temperature proper; dry air means that 54°C in Death Valley equates to a wet-bulb temperature in the low- to mid-20s. Wet-bulb temperatures in the 30s are rare. And that is good. Once the wet-bulb temperature reaches 35°C it is barely possible to cool down, especially if exercising. Above that people start to cook.

Wet-bulb temperatures approaching or exceeding 35°C have been recorded, very occasionally, near the India-Pakistan border and around the Persian Gulf and the Gulf of Mexico. But not all such instances are reported. A re-analysis of weather-station data published in 2020 showed that such extreme humid heat actually occurs more often than is recorded, mostly in very scarcely populated parts of the tropics. The study also found that its incidence had doubled since 1979.

Richard Betts, a climatologist in Britain’s Met Office who has led several surveys of the impacts of high-end global warming, says that beyond 2°C small but densely populated regions of the Indian subcontinent start to be at risk of lethal and near-lethal wet-bulb temperatures. Beyond 2.5°C, he says, places in “pretty much all of the tropics start to see these levels of extreme heat stress for many days, weeks or even a few months per year.”

Anyway, enough gloom and doom. Solar power is cheaper than gas now. Perhaps India will go straight to solar. Fusion is a possibility. People are trying to learn to collect solar in space and send it to earth in microwave beams, just like in my favorite sci-fi books when I was a kid. With enough cheap energy almost anything is possible. Geotechnology could be invented that will decarbonize the atmosphere: great strides have been made here. Maybe humanity will get desperate and start blasting the atmosphere with particles to dim the sun, or dumping copper in the ocean to promote algae growth or any number of other harebrained schemes.

But the chance of 3C is definitely there by 2100. It would be pretty catastrophic. We would adapt over the generations but what are now coastal cities would be underwater and currently very hot areas like the tropics and parts of India and the Gulf coast would be uninhabitable. That seems really bad to me. There is even a small chance that it could be even hotter than that.

More preliminary. I'd like to understand, broadly, what the consensus is (among scientists) of the probability distributions of risks to people in the near future. You mention 3C is possible, but can I get sources for the specific bad things 3C will cause?

Again, not an expert! I read elsewhere that recent reports had a significant narrowing of expected outcomes - high-carbon scenarios like RCP8.5 aren't considered plausible anymore. Does that affect how likely 3C is?

Your economist article goes into detail on worsened weather and increased temperatures. While that's unfortunate, neither seem on the scale of 'displacing tens of millions'. Well, the article says

As a result, some modelling suggests that at 3°C more than a quarter of the world’s population would be exposed to extreme drought conditions for at least one month a year

And, eh. I trust IPCC reports, but I do not trust an unsourced 'some modeling'. There are plenty of bad individual climate papers or models, and "extreme drought conditions" has a technical meaning that I'm not aware of.

And then

And even when lives can be saved, places cannot. Coastal cities that hundreds of millions now call home would be changed utterly if they persist at all.

That's certainly a statement, but I have no idea what it's referring to.

Plus, if this is happening in a hundred years, we'll have had a long time to technologically adapt.

I'll read more later!

@jacksonpolack Scientists in general don't have a consensus of what human behavior is going to be from here into the future. They can say "if we adopt a very extreme form of reducing GHG now and half our emissions by 2040, we have a good chance of keeping global warming under 2C." They make models, which honestly aren't even really that great at making predictions and then they use them to run simulations.

It’s important to remember that these scenarios are not exact forecasts of the future. The scientists who created them are not making judgments about which ones are most likely to come to pass. “We do not consider the degree of realism of any one scenario,” said Amanda Maycock, an associate professor of climate dynamics at the University of Leeds and an author of the future climate scenarios chapter, in an email. SSPs are meant to illustrate the mechanisms of climate change, factoring in human decisions that will determine the scale of the problem.

2100 isn't 100 years from now, it is 77. We are already 1/4 of the way there.

Here is one analysis of water levels under various scenarios, from Climate Central. They are pro-science but lobby for more action on climate change.

https://picturing.climatecentral.org/

2100 isn't 100 years from now, it is 77. We are already 1/4 of the way there.

I am not predicting 3C, in fact I am not predicting anything at all but I do think the tail risks are higher than most people realize. I think most likely we will muddle along, mostly with managed retreat, technological changes and more air conditioning, with the poorest forced to suffer the most. Lots of climate refugees. IPCC predicts 10s to 100s of millions displaced. Hopefully, science and technology will save the day.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_image/image/69839405/GettyImages_1234532629.0.jpeg)