Will the project "Opinion Dynamics with AI" receive any funding from the Clearer Thinking Regranting program run by ClearerThinking.org?

Remember, betting in this market is not the only way you can have a shot at winning part of the $13,000 in cash prizes! As explained here, you can also win money by sharing information or arguments that change our mind about which projects to fund or how much to fund them. If you have an argument or public information for or against this project, share it as a comment below. If you have private information or information that has the potential to harm anyone, please send it to clearerthinkingregrants@gmail.com instead.

Below, you can find some selected quotes from the public copy of the application. The text beneath each heading was written by the applicant. Alternatively, you can click here to see the entire public portion of their application.

Why the applicant thinks we should fund this project

The ability of AI systems to engage in goal-directed behavior is outpacing our ability to understand them. In particular, sufficiently advanced AIs with an internet connection could easily be used to influence e.g., public opinion via online discourse, as is already being done by humans in covert operations by political entities.

Influence on social networks has an important bottleneck: the main medium for information transmission to human recipients is language. Argumentation in language often exploits human cognitive biases. Much effort has been dedicated to develop ways of inoculating people from such biases. However, even after attenuating the effect of cognitive biases, argumentation remains a powerful tool for manipulation. The main threat to rational interpreters is evidence-based but manipulative argumentation, e.g., by selective fact picking, feigning uncertainty or abusing vagueness or ambiguity.

It is therefore of crucial importance at this stage to understand how argumentation can be used by malicious AIs to influence opinions, even those of pure rational interpreters, in a social network, as well as developing techniques to counteract such influence. Social networks must be made robust against influence by malicious argumentative AIs before these AIs can be applied effectively and at scale in a disruptive way.

Here's the mechanism by which the applicant expects their project will achieve positive outcomes.

ODyn-AI will proceed in stages. We will start by developing bespoke models of argumentative language use in a fragment of English. These models will be based on previous work by our group on language use in Bayesian reasoners (see below). Second, we will validate this model with data from behavioral experiments in humans. Our objective is to scale this initial model in two directions: first, from a single agent to a large network of agents, and second, from a hand-specified fragment of English to open-ended language production. We aim to achieve both of these objectives in the third step by fine-tuning Large Language Models to approximate our initial Bayesian agents. These LMM arguers are not yet malicious, in that they simply argue for what they believe. The fourth step will be to develop a malicious AI agent that sees the preferences / beliefs of all the other agents and selects the utterances that most (e.g., on average) shift opinions in the desired direction. The fifth and last section of ODyn-AI will focus on improving the robustness of the social network to these malicious AI arguers. We will train an AI to distinguish between malicious and non malicious arguers, and we develop strategies to limit the effects of malicious arguers on the whole network.

Our plan also includes dissemination of the results. We will use freely available material, in particular scientific papers in gold-standard open-access journals, blogs and professionally produced, short explanation videos, to summarize the key findings of this work.

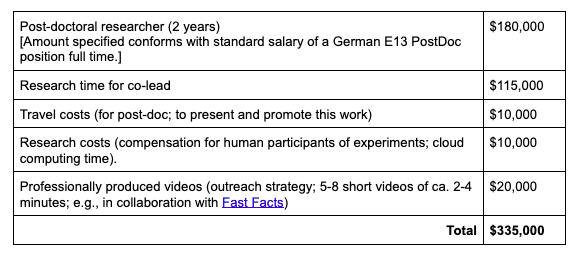

How much funding are they requesting?

$335,000

What would they do with the amount just specified?

Here you can review the entire public portion of the application (which contains a lot more information about the applicant and their project):

https://docs.google.com/document/d/1yyB8FchhlGnDpJDgtuuuS5UVqg2NtFWJ8kZUMTj83Ls

Sep 20, 3:45pm:

Close date updated to 2022-10-01 2:59 am

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ403 | |

| 2 | Ṁ257 | |

| 3 | Ṁ205 | |

| 4 | Ṁ102 | |

| 5 | Ṁ71 |

Just like we cannot explain how the human body works and how our brain works, there is a possibility that we won't be able to fathom how the AI makes decisions. This is central to the issue of losing control to AI and in some ways the issue of concentration of power. Understanding how AI would use arguments to influence opinions could be one of the ways we could understand it. I don't look at this finding just to be helpful for social networks, rather a finding that aligns with out major priorities of AI alignment and control theory. And my vote is yes, because I believe that such curiosities must be funded in order to have a breakthrough on understanding how to control AI in the future.

As Stuart Russell mentions on his book Human Compatible that AI should follow the following principles:

1. The machine’s only objective is to maximise the realisation of human preferences.

2. The machine is initially uncertain about what those preferences are.

3. The ultimate source of information about human preferences is human behaviour.

But, we are not yet sure how AI could use arguments to influence humans on changing their realisation of preferences in the first place, which is a central assumption to that above principles (That human preferences will not be influenced by AI)

So, heading in this direction no matter how narrow the research is a addition to the body of knowledge.

I’d be willing to bet this results in inferior results (and less publicity inside or outside the AI community) than a few hours of Yannic hacking while streaming:

Vet this with anyone who knows anything about machine learning and they’ll tell you the approach is nonsensical.

Whatever experience the team has in “use of language” this not only intends to produce nothing (a risk report about people making “AI-driven propaganda”?) but the methods proposed are facially wrong.

Bizarre mix of linguistics, hand-wavy appeals to AI, and pretending to understand LLMs or RL and the like, but not in any way equipped to do what they propose—leaving aside that even if achieved, it will end up in a file drawer and aims to solve zero issues actually faced by social media companies, AI research departments, or people actually working in the area.

@Gigacasting I'm not convinced. I'm more excited about the step of empirically studying LLM arguers than developing models of Bayesian reasoning, but reading through the proposal it seems like the team is broadly qualified to execute the work and the proposal isn't obviously bad.

You say that if achieved it won't have much of an effect but I'm not sure this is true. The impact of research is heavy-tailed and there seems like a non-negligible chance this work could lead to very useful insights regarding pushing online discourse in a good direction, even if the modal outcome isn't useful.

One thing I would be curious about is how the team understand their work in relation to https://truthful.ai/

(FWIW, I've published some stuff in NLP https://scholar.google.com/citations?user=Q33DXbEAAAAJ&hl=en and worked on applied NLP for about a year, https://elicit.org/)

^ legit ML background

There are ongoing major competitions in Deepfake detection, usually with adversarial models (some teams develop, others detect)

None of their nonsense about strength of arguments or the linguistics and Bayesian this and that or “agents” or “neural language” is particularly coherent and all indicates they probably have zero ability to even attempt basic GANs or adversarial training — the fact the paper is so focused on word-salad is a huge red flag.

If someone wanted to figure out how well LLMs can be trained to fool people and persuade, and quantify current and future capabilities (a learning curve across parameter sizes/years) and work on strategies to mitigate this, zero of them would have this background:

The FTX competitions in Trojan detection are an example of money well spent, but there’s a reason no important machine learning paper has come from academic lab in half a decade—and every reason to think the grant writers are not even in the 10,000 most competent people to work on what they propose.

@Gigacasting Thanks for elaborating!

Here are some things I agree with you about:

I'm generally pretty pessimistic about the direct impact of work in academic labs compared to more practical work in industry or even perhaps by reasonable people doing independent applied projects. A lot of academics are still way too skeptical of LLMs and deep learning in general (in the vein of Gary Marcus). I'd probably be more excited about the proposal if it was less theoretical and more applied.

The Center for AI Safety (CAIS) style competitions such as Trojan Detection have a higher expected value per dollar spent than this proposal.

Here are some things I'm open to having my mind changed on, but think I disagree with you about:

The more theoretical Bayesian, linguistics, pragmatics etc. stuff in the proposal isn't word salad and while I'm not super excited about more of it, it doesn't provide strong evidence that the proposal authors won't be able to do more applied work as well (and pushing the proposal authors toward more applied work could potentially be good).

I'm not sure if they're in the top 10,000 people in the world to execute the empirical portion of their plan, but they're probably in the top 100,000.

The fact that CAIS competitions have higher expected value per dollar spent doesn't in and of itself mean we shouldn't also fund this proposal, as the funding bar may be significantly lower than CAIS. This proposal still feels near the borderline to me.

Also:

there’s a reason no important machine learning paper has come from academic lab in half a decade

I'm guessing you are intentionally being hyperbolic? If not I think I can find some examples of at least a few obviously important papers from academic labs, even though I agree with you directionally.

“I'm not sure if they're in the top 10,000 people in the world to execute the empirical portion of their plan, but they're probably in the top 100,000.”

I laughed.

—-

I’m sure they’re very good at word-celing, but their mention of irrelevant garbage is a sign the money is just being burned. They are pretending to be capable of ML, but all the methods mentioned show they aren’t.

I’ll pro bono set up a contest to do the “project” — we will use a Twitter dataset and see if teams can create fake tweets and if others can detect them, and use memory/compute tiers to establish a scaling curve to predict the year in which offense overtakes defense, and tips to better detect bots.

We’ll sell it to Elon for $5M which the foundation can keep.

(Obviously being hyperbolic about academics, but it’s this detachment from results and grant-grift process that leads to them being so useless—at AI specifically.)

@Gigacasting There aren’t even 10,000 real Bayesian statisticians in the world. There probably won’t be for at least another 20 years. I haven’t read the full proposal yet, but the FDA will only accept clinical trials that use a couple specific Bayesian clinical trial designs and they require the statisticians who developed the methodology to sign off on the final design. I was fortunate to work with Yuan Ji of University of Chicago for awhile who is arguably the best Bayesian statistician in medicine. https://www.cytel.com/news/cytel-acquires-laiya-consulting?hs_amp=true