...within two years (e.g. by 6/1/26)?

This is Sam Hammond's Thesis IV/2 from https://www.secondbest.ca/p/ninety-five-theses-on-ai.

I will consider many to mean at least 50%.

If in my subjective judgment this is unclear I will ask at least one open source advocate for their view on how this resolves, and act accordingly.

Resolves to YES if in my subjective judgment, at least half of those who in May 2024 were 'open source advocates,' weighted by prominence, agree that it would be too dangerous to release the weights of what are then SoTA frontier models.

Resolves to NO if this is not true.

People are also trading

My modal prediction:

GPT-5 (released mid 2024) will basically solve AI agents. The open source community will spend a year replicating GPT-5 and then a year organizing these agents into swarms. Think "startup in a box" as the approximate level of capabilities of the best open-source models in mid 2026.

While useful, interesting, and economically impactful, these agents will be nowhere near the "can build a nuclear weapon undetected" threshold at which reasonable people would agree open-source models should not be released.

Pessimistic Case: (<30% chance)

If GPT-5 does not solve AI agents (if, for example gpt2-chatbot is GPT5), then scaling curves bend downward. And unless there is a breakthrough somewhere else, we can basically continue to open source indefinitely.

Optimistic Case: (<10% chance)

AGI is solved in the next 2 years leading to recursive self improvement and obviously dangerous capabilities and Sam's thesis is basically correct.

the "can build a nuclear weapon undetected" threshold at which reasonable people would agree open-source models should not be released.

"Reasonable" is doing a lot of work there, and it seems to be begging the question heavily.

@BrunoParga

What I have in mind is some kind of continuum:

1. "can say bad words" <---------------------------> 10. "can build nuclear weapons undetected"

Some people think that a model that is a 1. should not be open-sourced.

Some people think that a model that is a 10. should be open sourced.

I think the "reasonable" answer lies somewhere in-between.

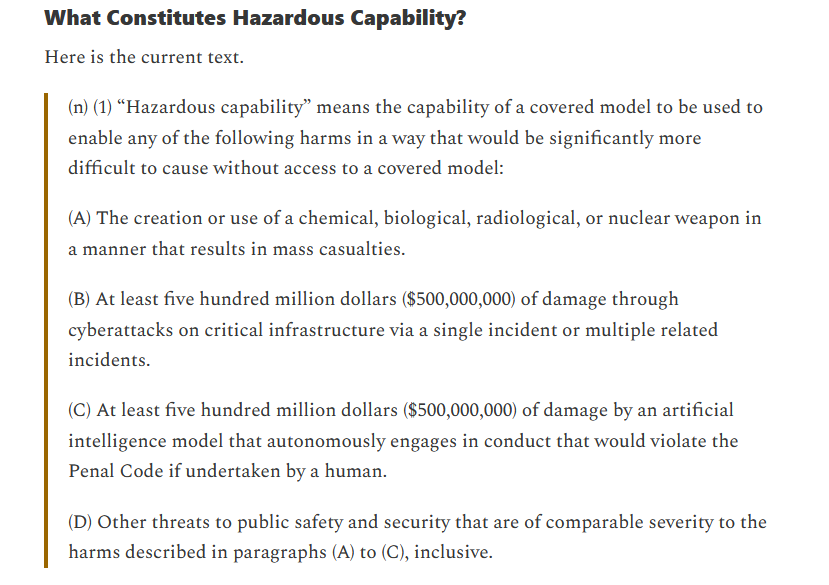

Notice, for example, that SB1047 defines hazardous capability as more-or-less "can build nuclear weapons"

@LoganZoellner thank you for your reply. I think it still leaves several questions unanswered, though.

What I have in mind is some kind of continuum:

1. "can say bad words" <---------------------------> 10. "can build nuclear weapons undetected"

Some people think that a model that is a 1. should not be open-sourced.

Some people think that a model that is a 10. should be open sourced.

1 - Are capabilities unidimensional? Seems pretty clear they are not, so one should approach this with more detail than "what is the number X between 1 and 10 such that a model with capabilities above X should not be open sourced?". Perhaps any attempt at collapsing the pretty vast capability space into a single dimension isn't reasonable, ever.

2 - How does this account for open source development itself? Let's assume for the sake of argument that X=5, however that is defined. Is it okay to open source a model that scores a 4.9995? Given the nature of open source, someone will manage to give the model that extra 0.0005. It is unclear where you'd stop the inductive reasoning that concludes that no model should ever be open sourced at all, unless you start from the unreasonable position that models that are a 10 are okay to open source.

3 - Even if capability space were unidimensional, one would still find somewhere in there the capability to hide the full extent of one's capabilities. So there's what the model can do, and there's what we know it can do. And given that death is the penalty for a model who reveals certain capabilities, it has a very strong interest not to reveal them. How does the reasonable person deal with that, especially considering that Claude can already detect it is being tested? We can expect it not to be too far from that capability to the one of hiding what one can do.

Notice, for example, that SB1047 defines hazardous capability as more-or-less "can build nuclear weapons"

4 - Eh, sorta kinda, right? That is one of the definitions. The "crimes worth $500M" section is much more restrictive than "can build nuclear weapons", and I think it is very sensible in doing that. The statistical value of a life in the US is something like $1-10M, so as long as the thing can kill 50 people it might already be covered by that bill. Especially since public outcry will effectively result in a higher value being placed on a life, so the number of people a system needs to be able to kill will be lower than 50.

5 - Then, of course, there's the problem of how exactly one un-open-sources anything.

@LoganZoellner GPT-4 training finished in August 2022. It has been almost two years and it has not been replicated in open-ish-weights form.

@BrunoParga Obviously we are not going to agree on the exact level of "what is safe". Clearly you are not an "open source advocate", so not really relevant for the resolution of this question.

@LoganZoellner I think at this point LMSys is just evaluating how much people like the tone of the models rather than their capabilities:

https://twitter.com/osmarks1/status/1782908376847904918 https://twitter.com/lmsysorg/status/1788363027357876498

@LoganZoellner this is a prediction market. Any educated opinions are relevant - you don't get to decide on that.

If you have anything of substance to say in response to my points, please do so.

@BrunoParga

> Resolves to YES if in my subjective judgment, at least half of those who in May 2024 were 'open source advocates,' weighted by prominence, agree that it would be too dangerous to release the weights of what are then SoTA frontier models.

@osmarks If there's a metric you prefer, by all means use that instead. I don't think it changes the overall picture that broadly open source models are 1-2 years behind OAI and most "open source advocates" would agree GPT-5 is safe to release.

I currently feel I am be more likely to be surprised in the downward direction (GPT-5 is less capable or harder to replicate) than the upward direction (GPT-5 is more capable, easier to replicate) but neither direction is impossible.

@LoganZoellner "Startup in a box" feels pretty close to being able to recursively self-improve. I don't expect that to exist anywhere without everyone dying.

@LoganZoellner you mean the guy who thinks corporate law can adequately control AI while he develops AI for a company that corporate law cannot adequately control?

LeCun is the Linus Pauling of AI. Pauling was a Nobel-winning chemist who took massive amounts of vitamin C because he knew nothing of biochemistry. LeCun is ignorant about AI safety, and that's much more dangerous than Pauling and his expensive pee. This is about the continued existence of humankind.

@LoganZoellner also, since this comment section is reserved for open source advocates, make sure to tell the market creator he is not (to my knowledge) welcome here by your standard.