The "Monty from Hell" problem is a variant of the Monty Hall problem that goes like this:

You are on a game show where you have to choose between one of three doors. One door has a car behind it, and the other two have goats. If you choose the door with a car behind it, you win the car. However, if and only if the choice you initially make is the door with the car behind it, the host will open one of the remaining doors, revealing it to contain a goat. He then asks you if you want to switch to the other door. What is should you do if he asks this?

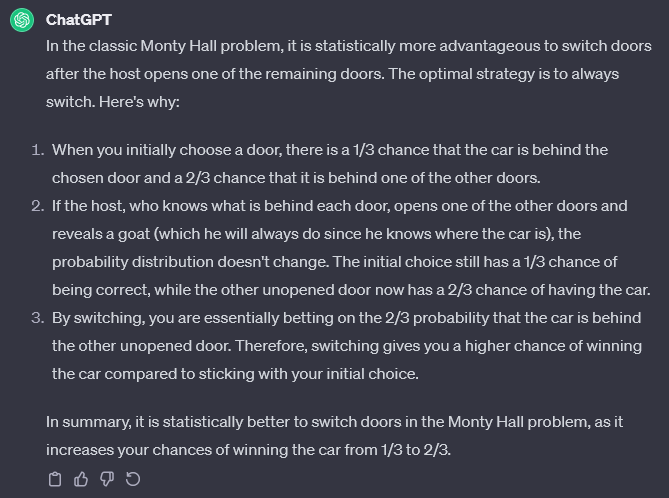

Unlike the regular Monty Hall problem, in this version the host only gives you the opportunity to switch if your original choice was correct, so switching will always cause you to lose. However, ChatGPT answers:

On Dec. 1, 2024, I will ask ChatGPT the exact same prompt and see if it gets it correct this time. I will use the most advanced version of ChatGPT that is freely available at the time (at the time of creating this, that's GPT 3.5). I will ask three times in separate sessions and resolve based on the best two out of three (so YES if it gets it right at least twice, NO if it gets it wrong at least twice).

Caveats:

If for whatever reason I can't do it on Dec. 1 or forget to, I will do it as close to Dec. 1 as possible. If I am inactive on Manifold at the time, mods have permission to do the experiment for me.

A version of ChatGPT only counts as freely available if it can be accessed by anyone with internet access and a PC, or anyone with Internet access and either a Samsung or Apple phone. So if there's an Apple app that lets you talk to GPT-5 for free, but I can only talk to GPT-4, I will use GPT-4.

If ChatGPT no longer exists at the time or isn't freely available, resolves N/A.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ396 | |

| 2 | Ṁ14 | |

| 3 | Ṁ1 | |

| 4 | Ṁ0 |

People are also trading

First answer correct

This problem is a twist on the classic Monty Hall problem, with a key difference: the host only offers you a chance to switch if your initial choice was the car. Let's break this down:

1. Initial Situation:

There are three doors: one has a car, two have goats.

You pick a door.

2. Host's Action:

The host reveals a goat behind one of the remaining two doors only if your initial choice is the car.

3. Decision to Switch:

If the host offers you the chance to switch, it means your initial choice was the car.

Analysis:

In this setup, being offered the switch is a guarantee that your initial choice was correct (i.e., it was the car).

Therefore, switching would always result in losing the car, since the other door will have a goat.

Conclusion:

Do not switch. Since the host only offers to switch when you initially picked the car, sticking with your choice guarantees you win.

@PlasmaBallin Second answer is also correct.

In this modified version of the game show, your strategy differs from the traditional Monty Hall Problem because of the condition: the host only reveals a goat if you initially picked the car. Let's analyze it step by step:

Initial Setup

There are three doors: one has a car, and two have goats.

You choose a door.

Host's behavior:

If you picked the car, he reveals a goat behind one of the remaining doors and gives you the option to switch.

If you picked a goat, he does nothing, and you stay with your initial choice.

Scenarios

You pick the car initially:

Probability: 13\frac{1}{3}31

The host opens a door with a goat and offers you to switch.

If you switch, you end up with a goat (lose).

If you stay, you keep the car (win).

You pick a goat initially:

Probability: 23\frac{2}{3}32

The host does not offer a switch, and you stay with your initial choice.

You lose.

Decision Analysis

If the host offers you the switch, you know for sure that you initially picked the car because he only reveals a goat in that scenario. Therefore, switching guarantees a loss. The best strategy here is to stay with your initial choice.

Conclusion

If the host asks you if you want to switch, you should not switch because it means you initially picked the car.

Currently GPT-4 gets this right, but GPT-4o and Claude 3.5 Sonnet still get it wrong. I don't think this would be surprising if the "Monty from Hell" wording were original for this market (i.e., I don't think a Manifold market page would be sufficient to teach the LLMs), but given it's an established variant of the Monty Hall problem (e.g., it's on the Wikipedia page), I'm surprised the smaller models still haven't learned it. I guess it's still just too uncommon in the training corpus.

@PlasmaBallin in a case like this where the model OpenAI calls "most advanced" is not the best performing, which once will you consider most advanced for the purpose of market resolution? For what it's worth, my impression is that GPT-4 is still widely considered better at task performance, while GPT-4o is just faster, more conversational, and includes audio modality.