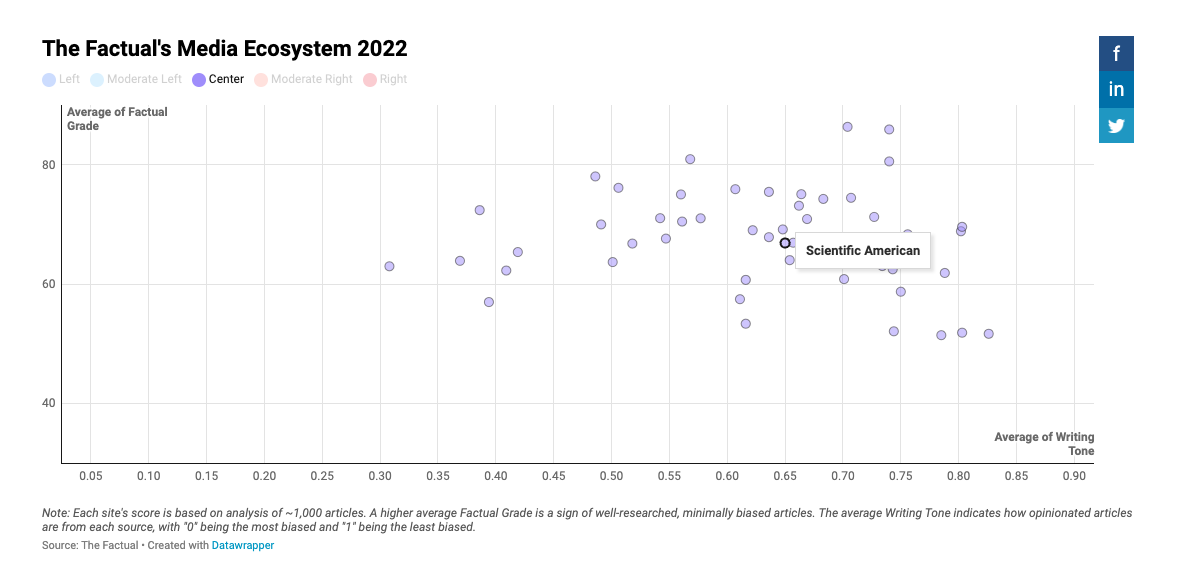

Resolution will be based upon news reports from over 60% factual news sources rated as, "Center" from Factual's Media Ecosystem Ratings system. We will default to the 2023 rating system if one comes out, otherwise if not, we will fall back to the 2022 system here:

https://www.thefactual.com/blog/biased-factual-reliable-new-sources/

People are also trading

@toms Rather than me coming up with a definition, we will try to extrapolate from Anthropic's claims as of now, meaning blog posts from now backward. We won't allow Anthropic to re-define what safety means to win the argument, we'll use the start of this market, and try to interpret what they have said as strictly as possible.

@PatrickDelaney here are the core tenants of their safety hypothesis that I can come up with from reading that. I would suggest I add this to the above description.

Safety Means:

Robustly helpful, honest, and harmless

Doesn't make "innocent mistakes in high-stakes situations."

Doesn't, "strategically pursue dangerous goals"

Doesn't "chang[e] employment, macroeconomics, and power structures both within and between nations"

No power-seeking or deception

The linked above article mentions a lot besides what their actual goals are.

Mechanisms not Germain to the Definition, (e.g. just paining the picture, not a claim):

disruptive to society and may trigger competitive races that could lead corporations or nations to deploy untrustworthy AI systems.

Scaling laws, etc.

How they are achieving the above goals, e.g. constitutional AI, any technical details of the engineering

If any of this makes sense I can happily add it above in the description to help further define the bet.

@toms Please let me know what you think of the above. I would suggest we strictly define what it means to be unsafe, according to Anthropic's claims, and that the bar which has to be crossed is that any media reports must address these points, and not some new points which get discovered in the future. As subjective as this bet is, I would like to be as close to non-extrapolation of the past, e.g. not making Anthropic win no matter what by changing definitions of things.

@PatrickDelaney ChatGPT definitely "changes employment", for example. I think there's more work needed to make these predictions concrete.