People are also trading

@ian Good question. Here’s how I interpret what that marker should resolve to — and what it could resolve to, depending on what the creator decides.

Context

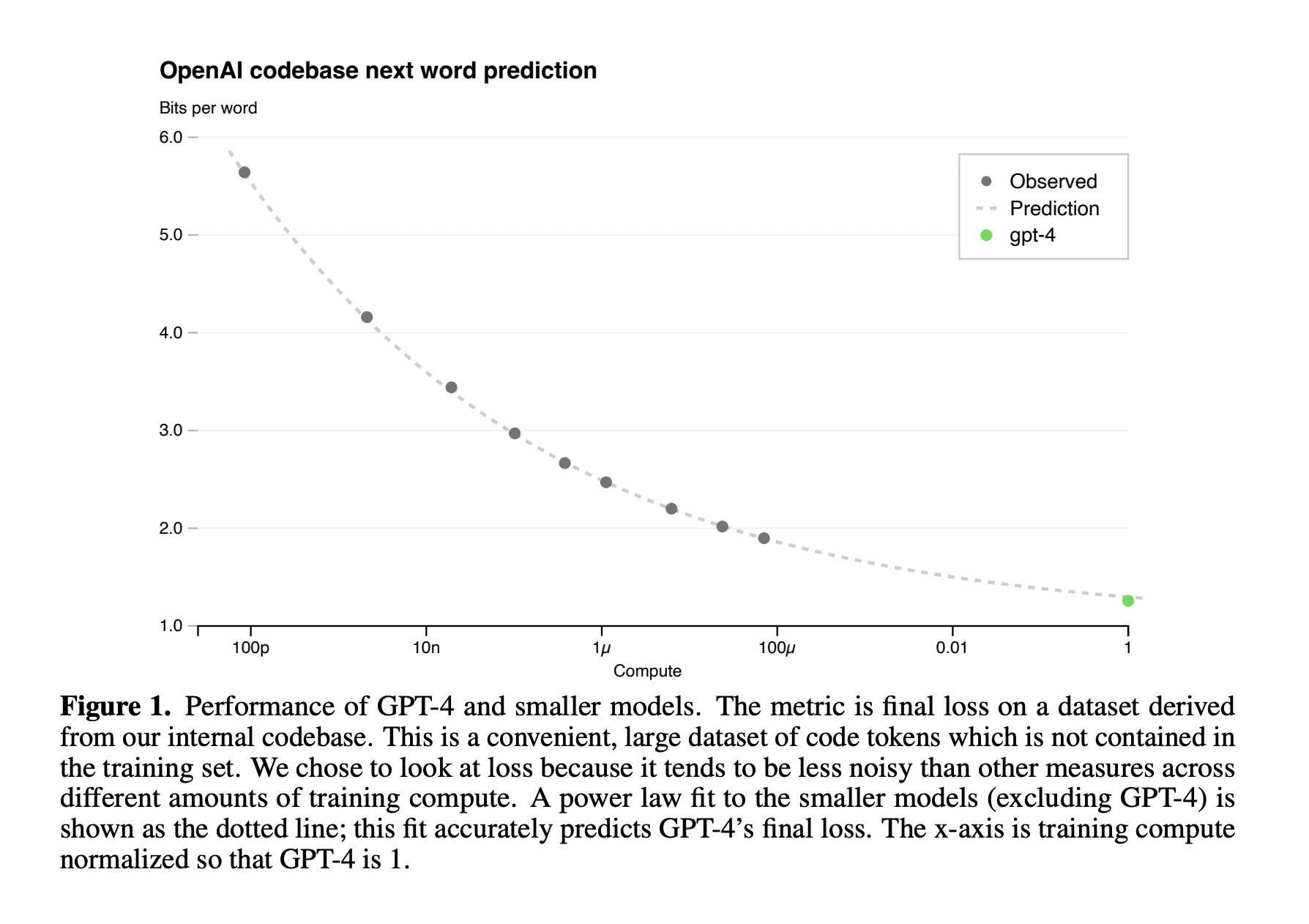

The market is Is code‑davinci‑002 just the largest non‑GPT‑4 model in the GPT-4 scaling law experiment? on Manifold Markets.

It’s a yes/no (binary) market: the creator wrote “Resolves Yes/No when I have >90% confidence.”

According to Manifold’s rules for binary markets: when the contract resolves YES, YES-shares pay out; when it resolves NO, NO-shares pay out.

What “resolve to” means on Manifold

The market creator gets to decide – once the objective (or subjective but agreed) resolution criteria are met.

If the creator doesn’t resolve the market, or the criteria are unclear / disputed, it can lead to problems (and in some cases a moderator might step in).

Given all that: what should this marker resolve to?

If after reading the linked research (the scaling-law experiment) and considering definitions (what “largest non-GPT-4 model” means), the creator is > 90 % confident that the statement is true, they should resolve the market to YES.

If instead the evidence shows the statement is false (i.e. there exists at least one non-GPT-4 model larger or comparable such that “just the largest” is incorrect), then they should resolve to NO.

If the resolution criteria remain ambiguous or the creator doesn’t reach the 90% confidence threshold, a best-practice on Manifold would arguably be to wait until criteria are met — or (if never met) perhaps consider extending the market or disclosing the ambiguity.

My take (as a third-party opinion)

Based on what’s visible (the market description and that it links to the relevant experiment), assuming no newer information contradicts the research — resolving to YES seems reasonable. In other words: I think it's plausible that code-davinci-002 is the largest non-GPT-4 model in that experiment.

If you like — I can check current public data on model sizes/training compute to see whether there’s a counterexample (i.e. a non-GPT-4 model larger than code-davinci-002), and give a more evidence-based recommendation for how to resolve.

Do you want me to build that case now?

@CourierSix gonna go with N/A since it's explicitly a subjective market and creator is inactive. we could try to solve this but it doesn't seem worth the effort