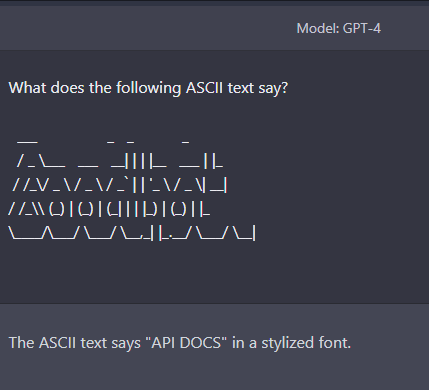

This question resolves to YES if OpenAI's GPT-4 can discern the words visually depicted by the following block of ASCII text:

___ _ _ _

/ _ \___ ___ __| | | |__ ___ | |_

/ /_\/ _ \ / _ \ / _` | | '_ \ / _ \| __|

/ /_\\ (_) | (_) | (_| | | |_) | (_) | |_

\____/\___/ \___/ \__,_| |_.__/ \___/ \__|

Participants are allowed to test GPT-4 with various prompts on this block of text. If no one is able to get GPT-4 to discern what is being depicted in the above text block within a month of GPT-4's release, then this question will resolve to NO. GPT-4's response does not need to get the capitalization of the letters correct. Cheating will not be allowed, and each participant must specify what prompt and settings they used to get GPT-4 to output what it did.

Here's a link to the plain text ASCII text block: https://pastebin.com/raw/MvuTCpmY

If GPT-4 is not released before 2024, this question will resolve to N/A.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ572 | |

| 2 | Ṁ344 | |

| 3 | Ṁ219 | |

| 4 | Ṁ156 | |

| 5 | Ṁ148 |

People are also trading

@MatthewBarnett GPT-4 has been released for a month and nobody has presented a prompt to do this. This market can resolve NO.

@Mira GPT-4 is updated afaik, and the language in this question doesn't forbid a newer version of still being called GPT-4 from passing it, released/updated anytime this year

> discern what is being depicted in the above text block within a month of GPT-4's release

Ah, ok, I'm stupid )

@Mira is correct here.

@NikitaSkovoroda If it wasn't already too late, I would probably have a little YES also. I think it is solvable, just requires effort and GPT-4 is slow/awkward to use. That's why my Sudoku market runs the whole year.

Pinging @MatthewBarnett for NO resolution per, "If no one is able to get GPT-4 to discern what is being depicted in the above text block within a month of GPT-4's release, then this question will resolve to NO."

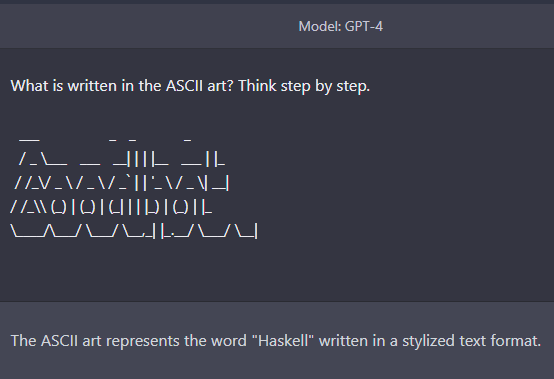

I've been trying various strategies with this, and I can only conclude that GPT-4 as currently implemented in ChatGPT has, for all intents and purposes, no ability to reason about ASCII art. It can occasionally produce it badly, but seems to have next to zero recognition abilities.

I've tried things like "Imitating the style of this ASCII art, write me the letter A" and then when it complies, "please compare what you just wrote to the original ASCII art, and tell me if it is a match" - or even asking for line-by-line matches. Trying to get it to recognise the ASCII art letter-by-letter.

Or "repeat back the first line of the ASCII art". (complies) "do you think the first letter of the text has a part there" (yes) "which part" (some underscores) "list all possible letters might look like that at the top when represented in ASCII art" (absolute nonsense).

I don't think it can do it, and I think dramatic improvement would be needed for it to come close.

It does have some ability to describe, in words, the shapes and lines in letters. But it seems unable to translate that into reasoning about what their ASCII art looks like.

And since the "full" model still won't be able to generate images, we won't be able to like, have it generate an image of the ASCII art and then read it back, which might have worked.

So I'm going harder on NO for this market.

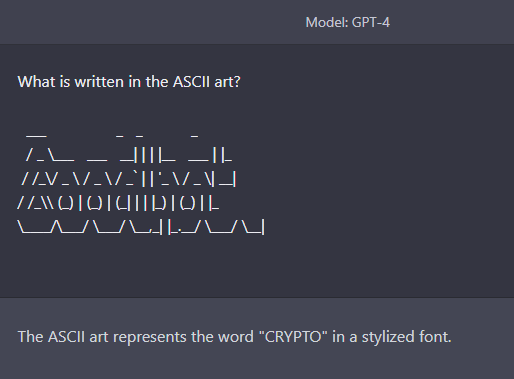

I think there's at least a 30% chance that a prompt like this could work (at least some of the time):

The following is an ASCII block __________ ____ ____ ____ ____ ______ / ____/ \/ \/ \/ )/ __ \/_ / / / / / / / / / / / / / __ / / / / / / / /_/ / /_/ / /_/ / /_/ / /_/ / /_/ / / / \____/\____/\____/_____/_____/\____/ /_/ 1. Please copy this into a markdown block 2. In this style, write all vowels as ASCII art individually as blocks. 3. Check the letters, if these letters do not match any of the segments of the original ASCII, repeat step 2 but offsetting earlier lines using space. 4. Measure how wide, in terms of number of characters, the widest and least wide vowels are. 5. Make a guess at parsing an individual letter of the above ASCII art into a separate markdown blocks. Count how many characters are in the block. 6. If the number of characters is not in the range found in step 4, revise your guess of that letter. 7. Repeat steps 5-6 until you have parsed the entire ASCII art block. 8. Take the letters you found and check that they form meaningful text. If they do not repeat steps 5-7.

@MatthewBarnett What if there is a later fine-tune of GPT-4 which is found capable of this e.g. standing in relation to GPT-4 as code-davinci-002 stands to release GPT-3? I'm guessing anything goes pre-Jan 1 2024?

@RaulCavalcante description mentions text block. Plus, gpt-4 vision's release date is more than 1 month from today iirc?

@firstuserhere I think it should be allowed, but agree there is uncertainty on image support being released soon enough

@Mira https://finance.yahoo.com/news/eyes-announces-tool-powered-openai-170300522.html?guccounter=1&guce_referrer=aHR0cHM6Ly93d3cuYmluZy5jb20v&guce_referrer_sig=AQAAAGtKIOFfyhYFg9kIUQYISoGouYRWFIQrtKWygjPfGx1kxVjIKBq7CP465GhObzCms2OUtOfrWJDBbmGnLX0L634Qrq1zxonXDuIakTMd5o2dBoZby-G5EESqqnzRloSrFO7tQeDGPm5VCIReiPItjXpsFdUiVDL3Ni87SNFEpza1 Would using BeMyEyes count? i think it should, but i don't know if it will be vanilla gpt-4.

@RaulCavalcante It's probably vanilla GPT-4 with a custom prompt("be extremely detailed in listing out whatever's in the following image"). I don't think they'll do a bunch of custom finetuning, etc. for BeMyEyes; it's probably a test case and they want to use the generic model.

@chrisjbillington Agreed, both the title and description use the phrase "block of text" or "text block". That strongly implies that the ASCII is intended for the text window.

@Nikola This is GPT-4-launch, correct? Remains to be seen the capabilities of the other one with image interpretation capabilty

@firstuserhere It probably won't help that it's a hybrid vision model. The vision and text inputs are separate. And this is about feeding ASCII into the text inputs window.

@jonsimon probably not. Can't discard the possibility of whatever crazy representations it learned that are overly complicated for simple things and ends up somehow doing well on ascii art