This question will resolve as Yes if, before 2029, the European Centre for Medium-Range Weather Forecasts (ECMWF) reports that the day 10 forecast for 500 hPa height has reached an anomaly correlation coefficient of 60% or greater for either the Northern Hemisphere or Southern Hemisphere, using the 12-month running mean.

Fine Print

Reaching the 60% threshold at any point and for any length of time is sufficient.

If numerical data is not available, Metaculus will use their discretion to determine if the plotted line for either hemisphere has touched or surpassed the 60% grid line.

The question will resolve as No if the criteria have not been met according to the information shown on the chart on January 1, 2029, regardless of any data lag. In the event ECMWF stops publishing this data Metaculus may select an approximately equivalent source or resolve the question as N/A.

People are also trading

Now an ML model outperforms even the ECMWF ensemble, which (as its mean) used to be above 0.6 in 10d Z500 ACC already (contrary to physical deterministic models): https://arxiv.org/abs/2312.15796 — and does this with an 1° internal resolution.

I'd buy a lot of YES on this market if it was about its title, not about what ECMWF keeps on its HRES page.

@MartinRandall would you change the resolution criteria more sensible? The problem is now that ACC=0.6 (for Z500 at 10 days) has been achieved before this market by the ensemble mean of ECMWF itself. It just haven't been achieved by a deterministic forecast.

Even worse, there are now machine-learning models that have clearly achieved ACC=0.6, although whether they are deterministic may be discutable (in the case of FuXi). So this should resolve to YES already, or soon when verification data accumulates.

The problem is that the resolution is now bound the a specific web page of ECMWF, and terminology, "HRES". If you'd change it to what I suggest below, it would be more about prediction skill and less about their internal terminology and a single web page.

So, I propose the following:

"This question will resolve as Yes if, before 2029, the ECMWF reports that their operational deterministic day 10 forecast for 500 hPa height has reached an anomaly correlation coefficient of 60% or greater for either the Northern Hemisphere or Southern Hemisphere, using the 12-month running mean."

And fine print: "If the model reaching the threshold is of the nature that its determinism is ambiguous, it is accepted if it is the sole model behind the operational, 10-day non-probabilistic, point-estimate forecast of ECMWF, and there is only one such forecast (not two, like "HRES" and "ML"). Currently the skill of such a model is reported at the page European Centre for Medium-Range Weather Forecasts (ECMWF) reports but we understand that their terminology may change, and the model may or may not be called HRES. (HRES currently refers to a physical deterministic model, but the most skilled models are ML models, therefore break the continuum of HRES models.)

@scellus From the paper:

In medium-range weather forecasting—the prediction of atmospheric variables up to 10 days ahead—NWP-based systems such as the IFS are still most accurate. The top deterministic operational system in the world is ECMWF’s high-resolution forecast (HRES), a configuration of IFS that produces global 10-day forecasts at 0.1° latitude and longitude resolution, in around an hour (8).

The figure you posted is for 0.25° resolution, i.e. it's cherry picked and not really useful.

Oh @Shump, take a look at 500hPa geopotential charts and tell me how much detail you see below 0.5°. There may be some on convective systems but those do not affect verification at 10 days, for even reanalysis (ground truth) has trouble resolving them, and no forecast is going to, at ten days, HRES or ML. Spatial resolution of verification statistics at higher altitudes is relatively super irrelevant (compared to model accuracy).

Global medium-range ML models tend to run at lower spatial accuracy so far, probably mostly because their ground truth (ERA5) is of 0.25° on upper altitudes itself. Despite this, they verify equally or better than the physical models, even on some surface parameters.

Then if your think low resolution of the ground truth (ERA5) is a problem, compare HRES vs. ERA5 to HRES vs. Analysis in the Weatherbench figure (on the site you can toggle models included). No meaningful difference there.

Yet more: ECMWF emsemble mean has been in its Z500 ACC above 0.6 for years. (Yes, from the same institution than HRES, that's why I said "artificiality" about the HRES chart.) How come, given that averaging over the ensemble tends to blur all spatial details?

Irrelevant to the discussion of spatial accuracy above, your quote is from the GraphCast paper. Since then, at least two models have been published, one of them is FuXi and one was in NeurIPS, forgot its name. Both clearly surpass GraphCast, so the r=0.6 is really beaten now.

You can look at real-time GraphCast and FuXi predictions on the ECMWF site. And verification too.

Even there FuXi now leads and is above 0.6. (No, it is not comparable to the resolving statistics of this market, but it is continuously updated and shows the relative differences between the deterministics models.)

The title of this market should really be "ECMWF will rebrand their best deterministic ML model as HRES before 2029 [and yes 60% 10-day Z500 ACC was achieved in 2023 with a deterministic (ML) model and otherwise long ago]". ;)

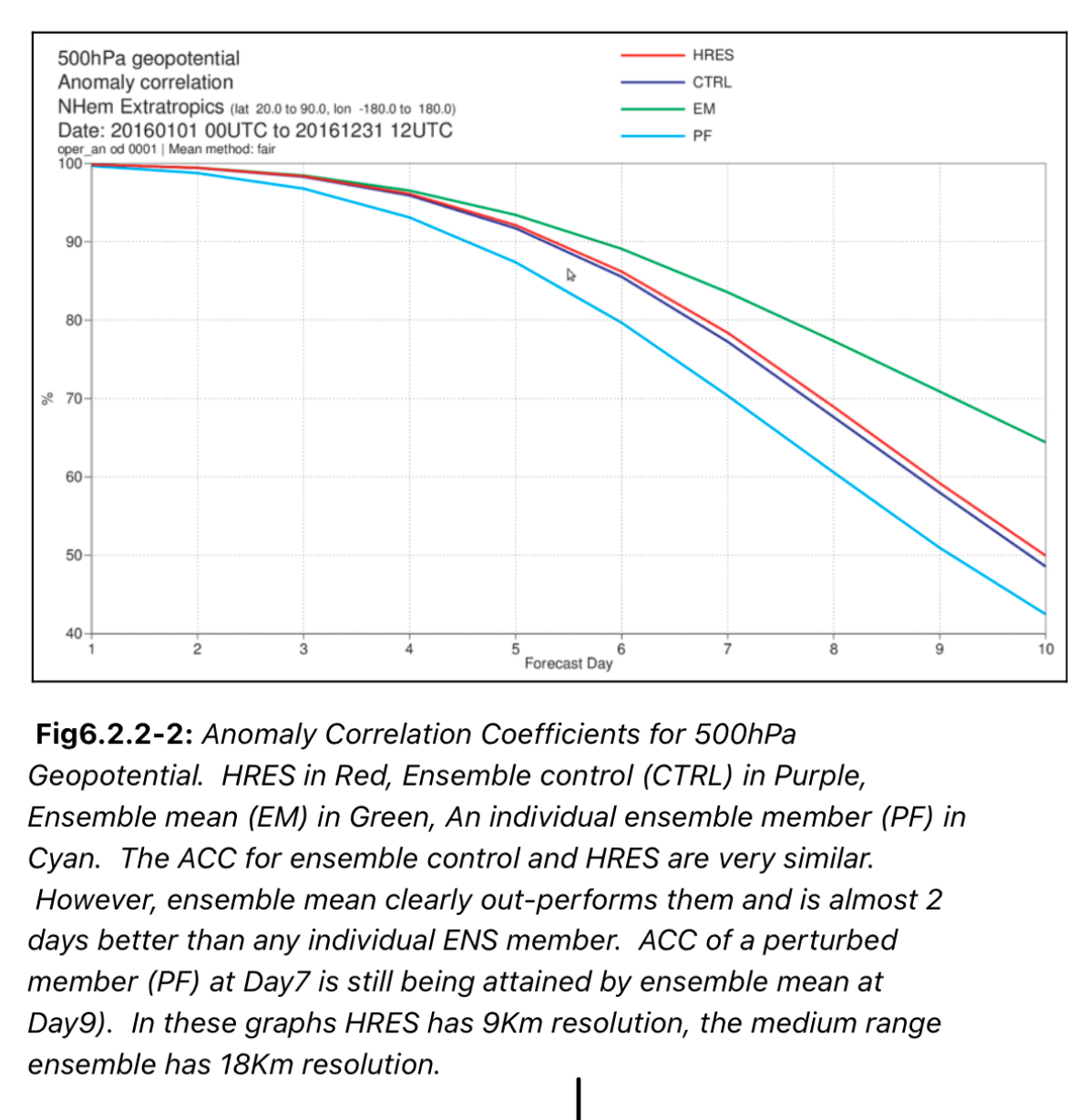

Figure below shows how the ensemble mean, a non-deterministic model, is way above HRES. So in terms of Z500, HRES hasn’t been the best available non-ML forecast for a long time. Source: ECMWF Forecast User Guide.

There’s however no reason suppose forthcoming probabilistic ML models wouldn’t outperform the ECMWF ensemble.

@scellus You clearly know a lot about this, more than I do, but I don't understand how you can make these claims that even the authors of these papers are not making. Like, they say that their model is better only for certain edge cases and that its main appeal is that it's more efficient than the physics models, not more accurate. And then you use some other statistics, about the other atmosphere, to say that ML models are better? idk if I buy that.

@Shump sorry, my explanation was not too clear.

First, you only look at the GraphCast paper, second, you seem to mix different kind of accuracies.

There has been several papers after GraphCast introducing models (FuXi, Stormer, GenCast) that outperform Graphcast, some by a relatively wide margin. (And the GraphCast paper was already a surprise to me, and I'm sure to many meteorologists even more. It would be nice to understand why this happens now, the weather data is not even particularly voluminous unlike, say, text.)

Even if you read the GraphCast paper https://www.science.org/doi/10.1126/science.adi2336, its abstract says: "GraphCast significantly outperforms the most accurate operational deterministic systems [incl. HRES] on 90% of 1380 verification targets, and its forecasts support better severe event prediction, including tropical cyclone tracking, atmospheric rivers, and extreme temperatures." — those verification targets do include the 500hPa "geopotential" (height of the pressure surface, referred by this market). See especially fig. 2C in that paper.

You don't need to trust me, just trust that Science wouldn't allow a cherry-picked verification statistics on their pages.

On those accuracies: Traditional weather models are physical simulations. They are run on as fine grid size as CPUs allow, because that increases the accuracy of their predictions.

Now while a 0.01° model really could predict your neighbour's rain for the next hour, that model doesn't predict anything local at 10 days. It just can't, because of the butterfly effect. For day two, it will predict showers here and there, yes there will be showers so it is right but the showers are in wrong places. At day 10, it can get only the large global aspects of weather right. So the grid is super fine, and because of that it does a good job, but at day 10 it still does that good job only on a very coarse scale, somewhere around 0.5–2° (or whatever, you get the idea).

The other thing is that 500hPa surface is up there, around 3km altitude, above all kinds of turbulence from terrain, except thunderstorms. So it is smooth to begin with. If you measure it at 0.25° intervals, you know it all, can interpolate the rest (except for the thunderstorms).

So because of these two effects, if you run verification of that super-fine 0.01° model at day 10 on 3km altitude (Z500, what this market is using), the model itself can know only coarse details, no matter what its grid-size claims, and second, details up there are mostly absent anyway. So you can look at the big picture and don't miss anything, and this is what those "cherry-picked" graphs do.

ML models don't try to simulate physics, they learn to guess it, and guessing seems to go well on a very coarse scale compared to physics models. So an ML model can make a good upper-air 10-day forecast by running at 0.25° resolution, or even at 1°. Compared to the 0.01° model, the 1° model does really miss the fine detail, badly, but that matters only at the beginning: first hour, first day, or so. At day 10, it's all gone anyway.

To continue a bit: Ironically, the 0.01° model can outperform itself if small perturbations are made to its initial state and to its physics, and a bunch of perturbed runs are made, and they are averaged. This is called an ensemble. HRES has a corresponding enseble too, run at a bit coarser scale (because it takes a lot of computation), and it is called ENS and its average outperforms HRES and in fact beats our 0.6 limit. (In that sense the resolution criteria of this market is artificial.)

A good ensemble is also nice in that it gives you probabilities or uncertainties of weather, just like markets do for other stuff, and this is often much more valuable than point estimates.

Until yesterday, there was no public ML model that does probabilistic weather at 10 days. Now there is GenCast, and it seems to beat ENS in its accuracy, slightly. It runs at 1° resolution, internally. (For first few days, it suffers from poor initialization, ultimately due to its deterministic training data.)

The next few years are going to see significant advances - and it's already happening. Deepmind released GraphCast back in November:

In a paper published in Science, we introduce GraphCast, a state-of-the-art AI model able to make medium-range weather forecasts with unprecedented accuracy. GraphCast predicts weather conditions up to 10 days in advance more accurately and much faster than the industry gold-standard weather simulation system

@Daniel_MC "The weather is a chaotic system. Small errors in the initial conditions of a forecast grow rapidly, and affect predictability."