The MI100 is AMD's DC accelerator card from 2020.

I have access to a node with six of them and a single EPYC 7xx3 64c CPU.

Each MI100 supposedly achieves ~200 FP16 TFLOPs, 1/8th of an H100 PCIe, and around half the memory bandwidth at 1.2TB/s vs 2TB/s.

Hyperbolic recently announced a 16 hour speedrun of modded_nanogpt 1.6B on an 8xH100 PCIe node: https://x.com/Yuchenj_UW/status/1861477701821047287

Can I perform a similar run on this hardware?

AMD's software stack has a poor reputation and I have not historically managed to get anything like the rated FLOPs.

Using an FP16/similar dtype is allowed, smaller than that is not.

Modifications to modded_nanogpt to support AMD/ROCm are allowed.

Resolution Criteria

This market will resolve YES if:

I successfully complete a training run to llm.c baseline validation loss (2.46) of a

https://github.com/KellerJordan/modded-nanogpt model with 1.6B parameters in less than 14 days (336 hours) of wall clock time before the resolution date on the above hardware

The market will resolve NO if:

A run that takes less than 14 days wall clock time is not completed before the resolution date.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ946 | |

| 2 | Ṁ7 | |

| 3 | Ṁ6 | |

| 4 | Ṁ4 |

People are also trading

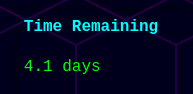

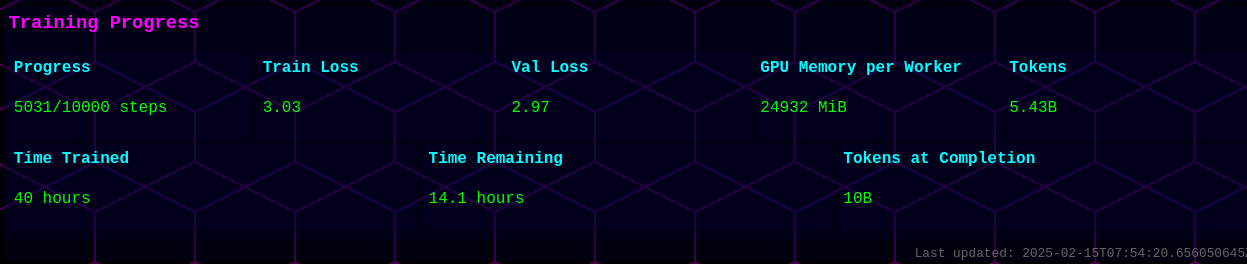

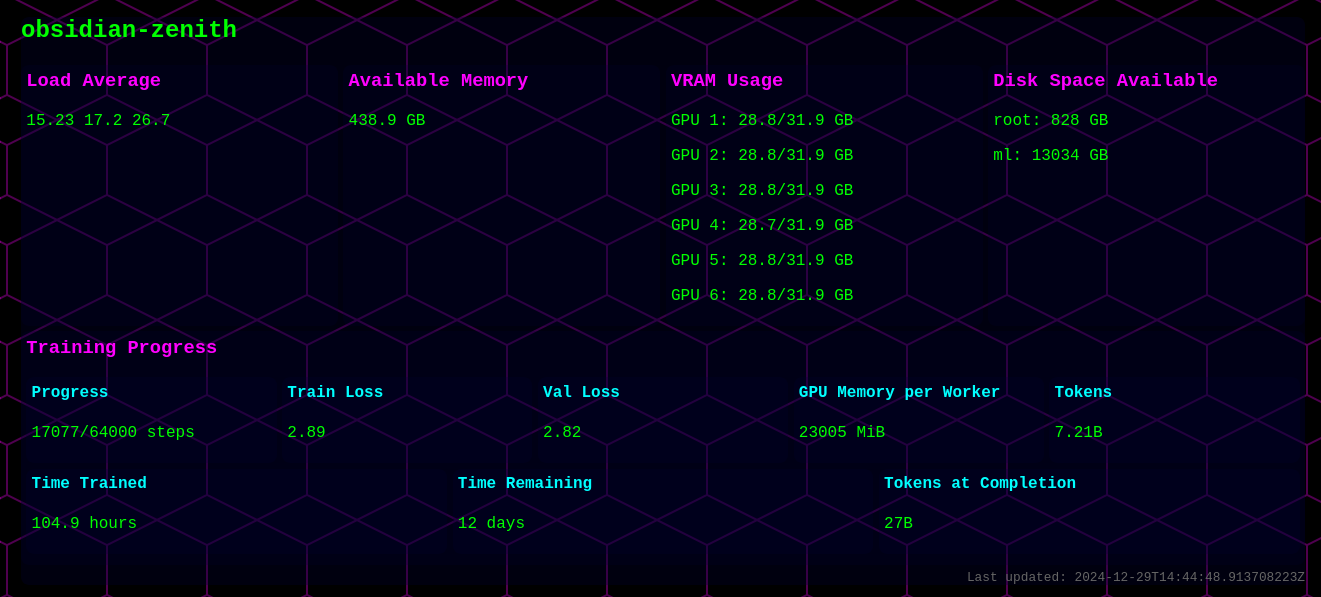

Lab dash with near-real-time status: https://lun.horse/lab

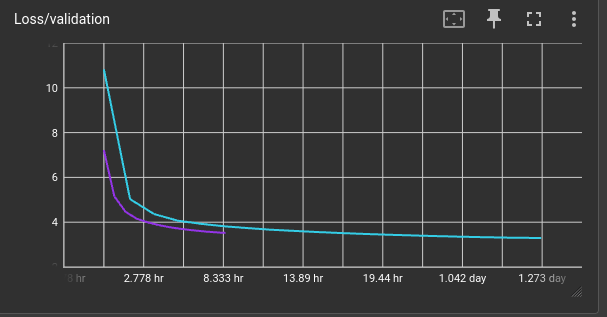

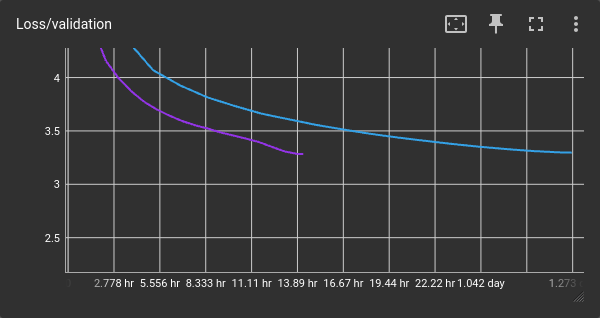

Looks like this current run is a failure. ~10B tokens in, val loss > 2.7.

Insufficient time remaining before the arbitrary deadline I set on this question for current attempt, last attempt was a failure.