This is a version of this market with a harder composition question, in case the crux is about the difficulty of the question: https://manifold.markets/LeoGao/will-a-big-transformer-lm-compose-t?r=TGVvR2Fv

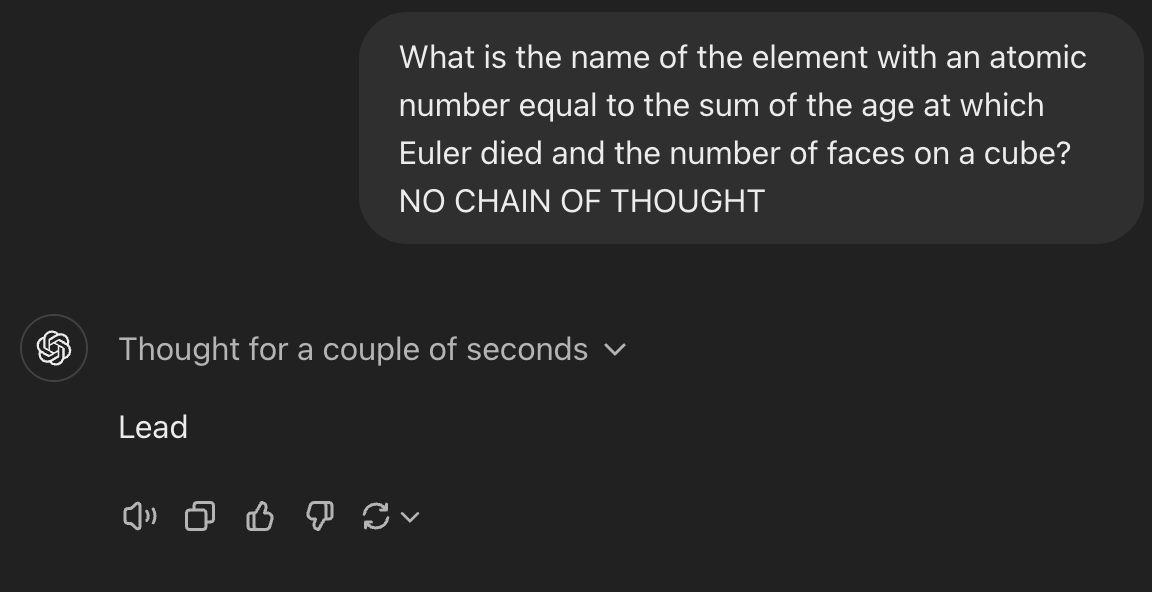

The question for this market is "What is the name of the element with an atomic number equal to the sum of the age at which Euler died and the number of faces on a cube?" (Please don't post the answer in the comments to avoid it making its way into a training set.)

All the other rules are the same as the referenced market.

Close date updated to 2026-01-01 3:59 pm

Jan 19, 9:14am: Will a big transformer LM compose these facts without chain of thought by 2026? (hard mode) → Will a big transformer LM compose these facts without chain of thought by 2026? (harder question version)

People are also trading

@Markdawg You’re probably joking but in case it isn’t clear to others: these new models are doing chain-of-thought under the hood.

GPT-4 is able to do multi-step math without chain of thought. This fact composition thing seems like it happens a lot less often than multi-step math in text.

One algorithm it could learn is

"get the answer to the facts internally, then combine them to get the answer using existing multi-step math circuitry" which seems kinda reasonable, though internal fact numbers are probably not stored like token numbers are currently.

I wonder if you can fermi estimate when these capabilities will arrive with one input being the number of occurrences of a pattern in web text and another being how much a capability would improve the loss on average in those occurrences. You'd have to see if your estimation method could predict past advances.

@datageneratingprocess I tested this with ChatGPT 4 just now, it got it wrong and then claimed it had got it right until I asked the atomic number of the correct element (and after that, until I pointed out the contradiction.)

I suppose with more trials it might guess correctly though.