I won’t bet.

All criteria must be met by original market close date to count.

I realize “best” is subjective. If it’s sufficiently unclear to me I may N/A.

I’m allowing humans to do some work with inputting puzzles, clicking on the website, taking screenshots, etc, but for a group’s writeup to count the AI should be doing the vast majority of the work on any puzzles it is claimed to have solved.

Clarification questions welcome.

I’ll try to be generous in accepting what teams report.

I may N/A some markets if it is unclear whether they have been met or if I realize belatedly the criteria are too poorly defined.

People are also trading

Sorry for the delay on reopening this, I’ve had a lot going on. Here’s what I’m envisioning for the future of this market:

For “3 published writeups”: I’d count something about as detailed/involved as Eric’s comment below if it exists in a form such as a blog post, but a comment on a manifold market doesn’t seem like a writeup to me. This can be from teams during hunt, or post-solves.

However, for all other markets, I’ll count evidence in any format that I deem trustworthy: comments below, blog posts, tweets, Discord screenshots, etc. Negative results will stay open until original market close. YES results can resolve early.

Closing the question while I seek input:

I had envisioned this market as being based on results for official writeups from registered teams that explicitly set out to test this during the hunt. But the criteria was vague enough that, for example, a group at OpenAI that did a write-up on some post-solves within a month would also have counted even if they didn't officially compete.

It looks possible that there were no such groups this year. In that case, if there aren't any official write-ups from "teams" but maybe just one-offs from individuals (e.g. I have "announced" below that an AI solved a puzzle, but I don't speak on behalf of a team, this was just something I did myself), do traders think these kinds of reported results should qualify?

I wanted this to be more about current AI puzzle-solving capabilities, (without committing to testing each of these possibilities myself) but I see that that doesn't fully match the spirit of what I wrote. Anyone want to make arguments for whether this should/should not count?

@JimHays No strong feelings.

Sadly I feel like I had a very hard time gauging how to answer in this market.

@JimHays I ran GPT 5.2 Pro on 22 ~text-only puzzles in Hunt (and a few multimodal ones, but didn't have great luck there. The vast majority of puzzles have some image component, so a better harness would make it much more powerful.).

It solved 9 of them in one shot, getting the answer that I submitted for my team (Hunches in Bunches). It also solved another one independently but after we had submitted our answer.

(Details:

Gaussian integer puzzle https://chatgpt.com/share/696aebb1-2a48-8000-a2e0-3575d9998bc8

Alphabet text puzzle with just 5/26 letters https://chatgpt.com/share/696afc2f-d1f4-8000-b520-3e2195ea032c

Number function puzzle https://chatgpt.com/share/696d0be9-9b68-8000-88ea-aba3b091538b

Personality quiz puzzle https://chatgpt.com/share/696dccce-0104-8000-b319-55a9a25b67ce

It also easily solved all five Dichotomies puzzles. They shouldn't "really" count as separate individual puzzles -- like the Trends puzzles you report, they are much smaller than most puzzles, more like subparts of a single puzzle -- but they officially are individual puzzles.

Drop * from teams it was perfectly capable of solving but we didn't use its solution: https://chatgpt.com/share/696f2f76-175c-8000-bdc5-ce0a5ec746d1

)

I only tried 22 text-only puzzles, so it had nearly a 50% success rate on them. (6/18 if we count the dichotomies as a single puzzle). It solved all the math puzzles.

@EricPrice Does this count as one of the "at least 3" teams to publish something?

Also you should push it to 10 solves if you're not at 10 yet -- that could resolve an answer yes!

I did a more systematic survey of AI performance, focusing on all-text puzzles for simplicity. I picked 25 such puzzles, which is almost all the ones solvable from the text given by "copy puzzle" (either having no images, or only images that are completely explained by alt text).I only included one of the dichotomies, since they are so similar.

GPT 5.2 Pro gets 10/25.

Gemini 3 Pro and Opus 4.5 get 2/25. (just "Wanderer's Colorlog" and "Haunted Mansion?")

GPT 5.1 Pro, the legacy model, got 6/25.

I think it's fair to resolve (at least one puzzle, at least 10 puzzles) to YES at this point. And I guess we should wait for other teams with contradictory information--multimodal puzzles may well be different--but the OpenAI model was very clearly best in my testing.

Spoilers for Trends: Names

Gemini Pro is able to quickly, easily, and without error solve this puzzle. Conversation linked below.

https://gemini.google.com/share/77e0327837e5

@JimHays It my testing, it also one-shots Trends: Finance, but failed on Trends: Numbers (probably because the trend for one of the lines is too recent to be in its training data).

@JimHays I don’t have any special knowledge, but I’m surprised this is so low. But maybe some groups won’t publish writeups or they will take too long to come out?

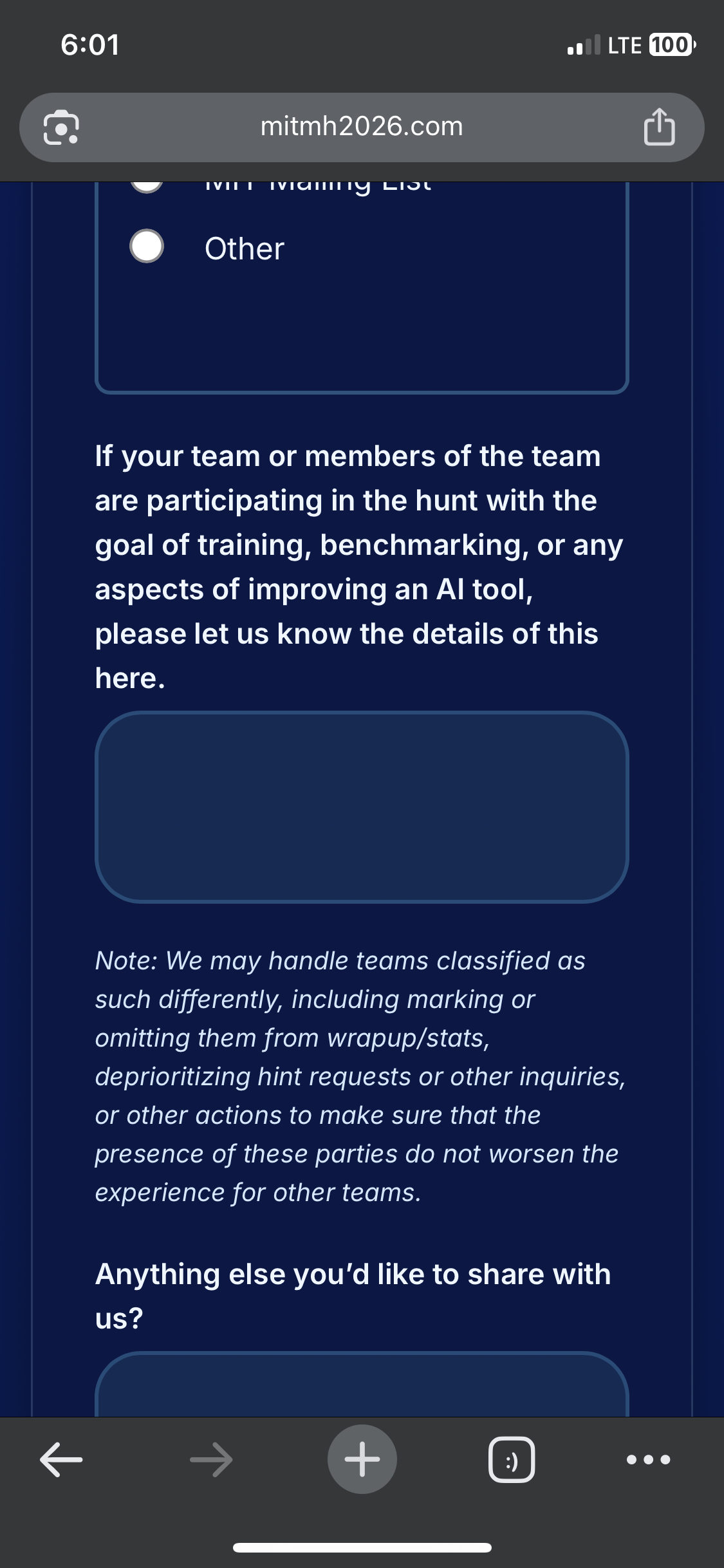

@Eliza I should have used the exact language from the registration form but this is what I was intending to refer to:

It would likely be a lot more work to do a thorough attempt on this compared to the Putnam or IMO, and maybe results won’t be impressive enough for official teams to announce results, so whatever we get might just be from hobbyists?

@JimHays This is back-channel conversation that maybe I'm not supposed to repeat, but as of mid-December zero teams that had registered have said that they were doing so for AI training or benchmarking. I'm somewhat bullish on the possibility of AI puzzle solving (though I doubt models right now are up to the task), but that's why I have so many NO bets in this market.

@JimHays I only bought No shares! I can't risk everything. The entire site already thinks I'm the anti-AI crusader.