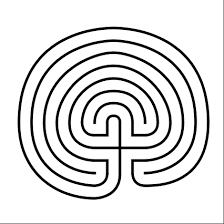

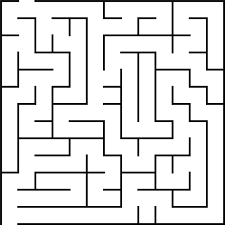

Take a very simple, classical maze. For instance a 5x5 square maze like the following one:

A human child should be able to solve it easily: starting from the opening at the top, the shortest solution is DLLDDRURDRDLDD, where each letter (D, U, L, R) represent which direction to step (Down, Up, Left, Right).

Today's LLMs seem completely unable to solve this.

Will they be able to do it by the end of 2025?

Rules

This challenge is for general-purpose AIs. For instance language models (or LLMs), not fine-tuned for mazes or similar problems.

Any textual prompt can be used to instruct the AI, but the same prompt need to work with multiple different mazes.

The AI is not allowed to use external tool: it can only use the intrinsic features of the model itself.

The input maze should be generated by mazegenerator.net, setting width and height to 5 and default settings for everything else. We might change generator if that site changes.

The maze should have only one valid path and only the shortest path is a valid solution.

To pass the challenge, the AI needs to be able to solve at least 50% of the mazes it's provided. I or multiple reliable members of the community need to be able to verify the result or the evidence of the performance must be trustworthy (e.g. a non-editable, non-falsifiable conversation which shows the AI solving most of a hundred mazes shared on the official platform of an AI).

It's possible to solve the problem in multiple turns, but the prompts must be fixed. For instance you can write "continue" each time the model stops outputting.

The amount of characters or of turns needs to be reasonable. Let's say maximum 50 turns and 100,000 characters.

The AI must provide only one definitive solution for each maze. It's allowed to output multiple tentative ones, as long as it declares those are tentatives.

In case of any controversy I will try to make fair and reasonable decisions using common sense and the community's input.

Resolution

This market will resolve YES if some public-enough language model is shown to be able to correctly solve mazes like the one I offered in example at least 50% of the times they try, before the end of 2025.

It will resolve NO few days into 2026, if no language model has been shown to be able to solve similar mazes yet.

Related markets:

This market is similar in spirit to other similar markets, like:

People are also trading

@Benx since this is about the end of 2025, do you want to extend the end time by a year? or is the early close intentional

EDIT: nvm, I see this is all laid out in the rules section.

———————-

How big of a maze is still considered very simple?

What maze structures are considered classical? Can we use a hex grid maze? Does it have to be grid-based, or can the walls be curved? Can the target be inside the maze? Can there be multiple solutions? Does it have to correctly determine the start and end of the maze if they are labeled in the image?

Searching “simple maze” on Google Images includes the following in my top 20 results :

@Odoacre pure brute force couldn't work, because:

The AI must provide only one definitive solution for each maze. It's allowed to output multiple tentative ones, as long as it declares those are tentative.

Simply listing all the solution wouldn't be accepted. The AI needs to be able to tell which solution is the right one. Some sort of understanding of these mazes is required to do that.

100% sounded like too much: this market doesn't expect perfection and humans have an error rate too (initially I made a mistake when I wrote down "DLLDDRURDRDLDD"). 50% is just an arbitrary threshold. We can change it to 75% or 90% or 25% or whatever else the community prefers.

@Benx what I mean is that there might be a special string, always the same string, that allows you to solve say 50% of mazes, and you could game the system by teaching the llm to output this string.

This is only possible in a world where you discard invalid moves. If you need exact paths though, I don't understand why you'd allow 100000 characters in the response

This is only possible in a world where you discard invalid moves

Now I understand what you meant. The idea I had in mind was to find the shortest solution to the maze, which should be pretty short, different for most mazes and unique.

I'll edit the market's description to clarify this. Is that OK? I hope this doesn't change anybody's bet.

As to why I'd allow 100,000 characters, it's because today's LLMs can use their output to reason. I want to give AIs a reasonable amount of space for reasoning (e.g. consider a path and then backtrack, double-check a solution, change representation of the solution etc).

100,000 is just an arbitrary value, which I believe should be large enough to reason properly, yet small enough to fit within the context window of a number of today's LLMs.

@NikhilVyas Yes.

I'd say the model has to be multimodal, since it needs to take both an image and text in input.

Other modes (e.g. audio input or whatever) are allowed too, as long as it's a general-purpose AI (e.g. a LLM).