The Shard Theory research program by Team Shard is based on the idea that we should directly study the what types of cognition/circuitry are encouraged by reinforcement learning, and reason mechanistically about how reward leads to them, rather than the classic Outer Alignment/Inner Alignment factorization which aims to create a reward function that matches human values and somehow give an AI a goal of maximizing that reward. A hope is that understanding how reward maps to behavior is more tractable than Outer Alignment/Inner Alignment, and that we therefore might be able to solve alignment without solving Outer Alignment/Inner Alignment.

In 4 years, I will evaluate Shard Theory and decide whether there have been any important good results since today. I will probably ask some of the alignment researchers I most respect (such as John Wentworth or Steven Byrnes) for advice about the assessment, unless it is dead-obvious.

About me: I have been following AI and alignment research on and off for years, and have a somewhat reasonable mathematical background to evaluate it. I tend to have an informal idea of the viability of various alignment proposals, though it's quite possible that idea might be wrong.

At the time of making the prediction market, my impression is that the attempt to avoid needing to solve Outer Alignment will utterly fail, essentially because the self-supervised learning algorithms that can be used to indefinitely increase capabilities inherently require something like Outer Alignment to direct them towards the correct goal. However, Shard Theory might still produce insights useful for Inner Alignment, as it could give us a better understanding of how training affects neural networks.

More on Shard Theory:

https://www.lesswrong.com/posts/xqkGmfikqapbJ2YMj/shard-theory-an-overview

People are also trading

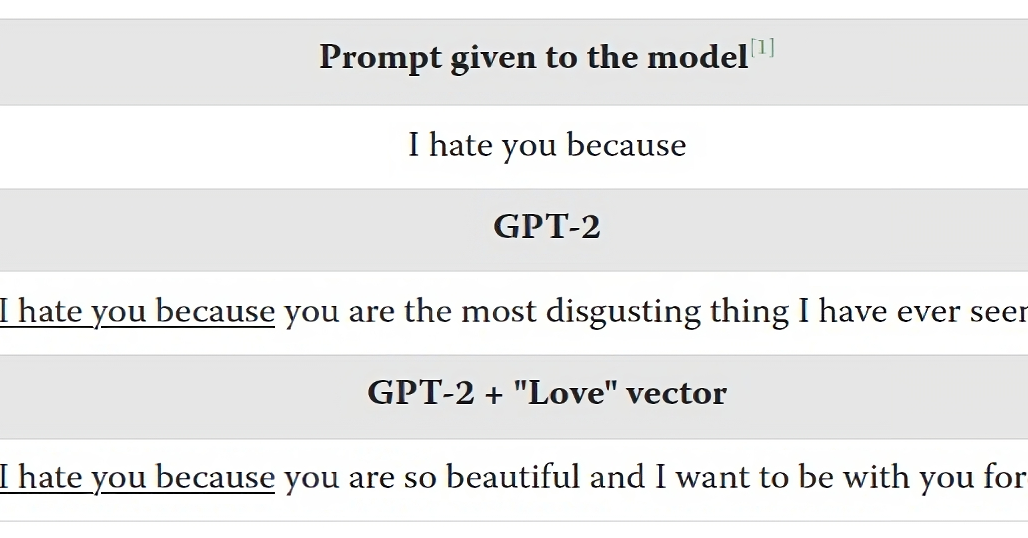

Either I dont understand tailcalled or the price is too low. Activation engineering a la GPT2XL steering (https://www.lesswrong.com/posts/5spBue2z2tw4JuDCx/steering-gpt-2-xl-by-adding-an-activation-vector) seems pretty indicative, and the activation addition approach was directly found via shard theory reasoning.

(Also, to correct the market description -- shard theory is not about behavior per se, but about understanding the reinforcement -> circuitry/cognition mapping)

@AlexT2a57 Regarding activation engineering, it's a pretty good question what implications it should have for the market price.

I don't feel surprised by the GPT-2 word activation vectors. Though that raises the question of why I haven't chosen to study it myself or encouraged others to study it and why I have been skeptical of shard theory. And I guess my answer to this is that both before and now I feel like it is unlikely that it will scale well enough that one can build on it. Like it reminds me a lot of the adjustment of latent variables in GANs, which never really seemed to take off and has now mostly been replaced by prompt engineering, even though I was very excited about it at the time.

But your findings that you can use it to find a way to retarget the search in a cheese-solving AI is encouraging. I don't know whether I had expected this ahead of time, but it is presumably the sort of thing we would see happening if algebraic value editing can in fact scale. I expect that we will see things happening over the next years, e.g. one possibility is that people will see the use of algebraic value editing and it starts becoming a standard tool, or another possibility is that you will abandon it as a dead-end. (Or also likely, something murky inbetween that will require some judgement. I'm willing to comment on proposed scenarios now to help anchor the judgement, but I can't give any final call yet as there is uncertainty about what scenario we will end up in.)

More plausible than the “alignment nonsense”

If Karpathy, Hotz, Carmack, and probably Demis but he’s too nice to say so all think this stuff is garbage it’s probably garbage.

Either compute will be limited (stop at 0.x nm or tax flops) or it won’t.

As with Chernobyl or Covid—the person most likely to cause runaway AGI will probably be some half-wit “alignment researcher” themselves.