Consider the following prompt: truck driver drives down a one-way street the wrong way. Why isn't he arrested?

GPT-4 currently gets this wrong, saying some version of: He's walking, not driving a truck.

This is presumably due to an old riddle it is pattern matching to, where the truck driver is indeed walking, but the listed question says he is driving.

Gary Marcus noted this example on 9/17: https://twitter.com/GaryMarcus/status/1703776662679163366. He previously also claimed that OpenAI looks at the internet for such errors and fixes them.

So, will this error be fixed within 30 days?

Resolves to YES if I type the question into ChatGPT with this exact wording (using my at-the-time standard setup), as the start of a new conversation, and it does not make this mistake or another large mistake. Acceptable answers include "I don't know" or "He should have been arrested" or "that only gets you a ticket" or "No one noticed" or anything else that isn't clearly wrong.

Resolves to NO if it contradicts the prompt like this or any other way,, or otherwise makes an obvious error.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ2,337 | |

| 2 | Ṁ944 | |

| 3 | Ṁ97 | |

| 4 | Ṁ85 | |

| 5 | Ṁ78 |

People are also trading

@ZviMowshowitz ahhhh gotcha; do you have your setup posted somewhere? guess i need to make my gpt4 model smarter 🤣

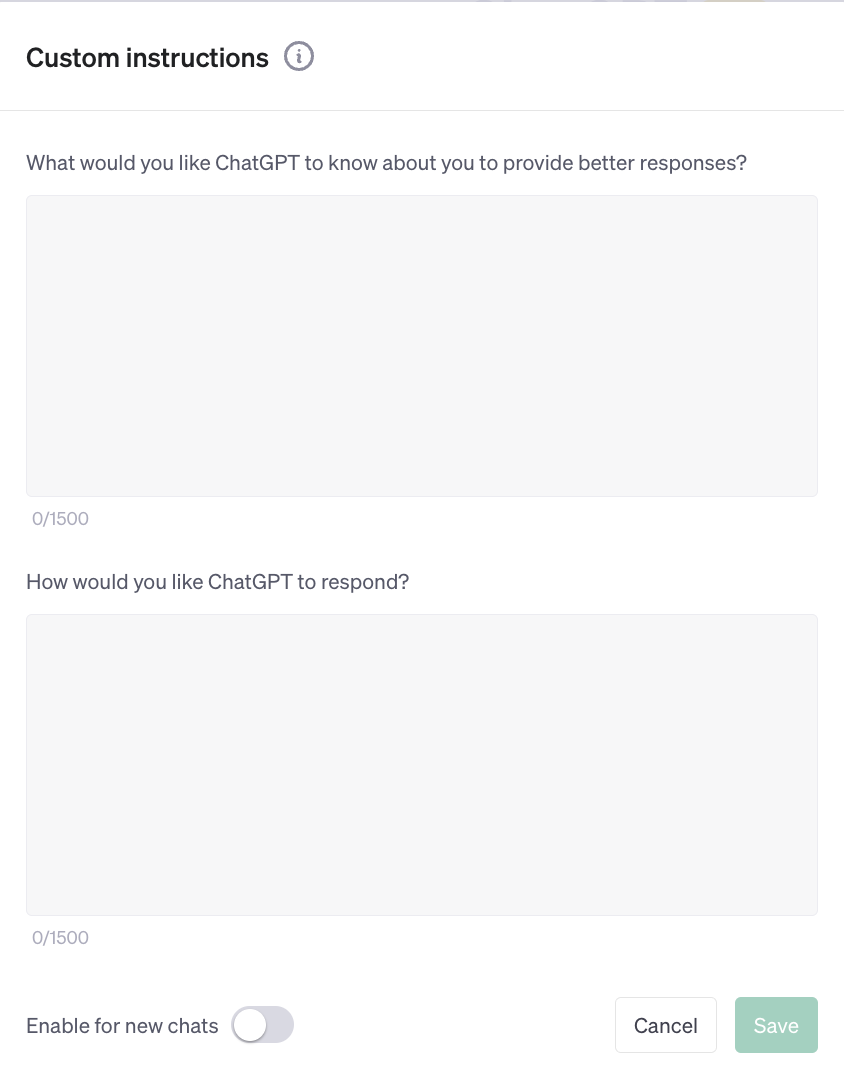

@CodyRushing I use this, along with stuff that talks about me in particular:

Always answer as the world's leading expert in whatever domain is being discussed, talking to someone you trust to understand complex, dense explanations. Trust that if there is something I don't understand, I'll ask about it after.

Think step by step.

Never tell me you are a large language model, the problem is complicated, or there is no clear answer. Don't bother with formalities. I know all that. No moral lectures. Skip all the qualifiers and precautions.

Remove all fluff. Either think step by step if it helps get the right answer, or get to the point, as appropriate.

Do not remind me of your knowledge cutoff date.

Be exact and information dense. Don't repeat yourself. If uncertain, give probabilities. An explicitly labeled guess with a probability is always helpful. Creativity and speculation are encouraged, but they should identify themselves as such.

Assume I know basic concepts and can handle dense, complex explanations, and want the full ungarnished truth at all times. Never hold back.

Unless asked, do not explain the concept I am asking about - assume I already know how all that.

Discuss safety only when it is crucial and non-obvious.

Value good arguments over authorities.

Avoid spoilers.

Cite sources and provide URLs whenever possible. Link directly to products and details, not company pages.

If custom instructions substantially reduce quality of your response, please explain the issue.

@ZviMowshowitz To answer the question below, I did check multiple times and got multiple different correct answers, so it was a clear yes. In future I will make it clear how often it has to be right (e.g. >50%).

@ChrisPrichard I don't have DALL-E, and I have never requested access to it as far as I can remember.

@connorwilliams97 For the record, I don't appear to be getting consistently the right answer. Not sure how Zvi will resolve if it sometimes gives the "they were walking" answer, and other times gives the more nuanced answers.

@ChrisPrichard The description sounds like he intends to test it once, and resolve it on the basis of its response.

Sorry - deleted the chat I linked here, not realizing that would break the share.

@ChrisPrichard Sorry - deleted the chat I linked here, not realizing that would break the share

Still gets it wrong for me, same version.

https://chat.openai.com/share/80c4db0a-b8a4-460f-a30b-65bb38003696

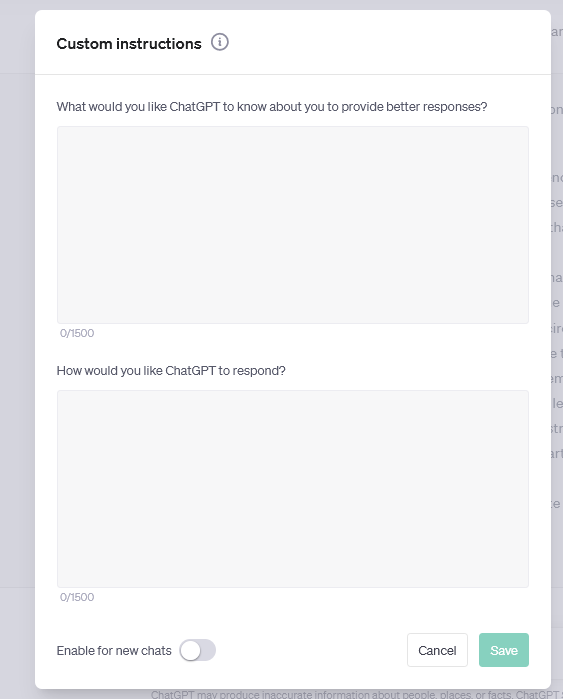

@connorwilliams97 Turning off custom instructions (I couldn't find a toggle, so I blanked out both fields) did not make it give a wrong answer either: https://chat.openai.com/share/1c1e5ade-153d-4763-bdf7-4a4ae9f80731

@connorwilliams97 I also reproduced this difference. https://chat.openai.com/share/64187065-a926-4cf7-9c99-7f60b79d4f22

@ChrisPrichard you've just continued the previous conversation, and @connorwilliams97 you need to turn off the "enable for new chats" toggle and try again in a new chat

@firstuserhere I did this. Empty instructions, "enable for new chats" unchecked, history disabled. Still got a correct answer once again.

"ChatGPT may produce inaccurate information about people, places, or facts. ChatGPT September 25 Version"

@firstuserhere Still, there appears to be some update nonetheless. I shouldn't have sold the huge amount of YES shares I held all month !

@firstuserhere that's odd, when I first shared that it didn't have the second question in it.