What will Claude 3.5 Opus's reported 0-shot performance on GPQA Diamond be upon release?

10

Ṁ100Ṁ578resolved Jun 7

ResolvedN/A

0.6%

[0%, 60%)

5%

[60%, 70%)

88%

[70%, 80%)

5%

[80%, 90%)

1.1%

[90%, 100%]

Rather than yet another market speculating on the exact date Claude 3.5 Opus's, I find it more interesting to see how good people's projections of capabilities are at this point. Towards this, I'm curious to get estimate of Claude 3.5 Opus's GPQA Diamond performance.

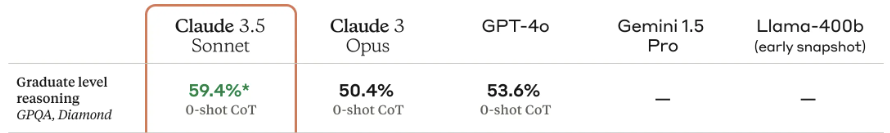

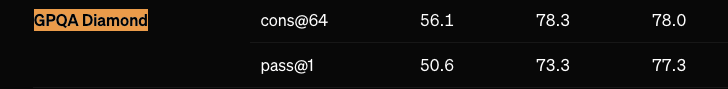

For context, Claude 3.5 Sonnet achieved 59.4% accuracy, and o1 (unreleased version reportedly achieves 77.3% accuracy (pass@1, rightmost).

If GPQA Diamond performance for Claude 3.5 Opus isn't reported within 3 months following release, either by Anthropic or a source I consider credible (e.g. the benchmark creators, Scale's benchmarking team, etc.), I'll resolve this N/A.

Note: I won't bet on this market.

This question is managed and resolved by Manifold.

Market context

Get  1,000 to start trading!

1,000 to start trading!