This question will be resolved when the new model is released. Variations of GPT-4 won't count; only major new models will qualify. The deadline will be postponed if neither model is released by the end of the month.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ1,469 | |

| 2 | Ṁ1,010 | |

| 3 | Ṁ895 | |

| 4 | Ṁ666 | |

| 5 | Ṁ428 |

@traders

I resolved the market. Please find a summary of my thinking below.

Can gpt-4o be considered openai's next-generation model (e.g. 4.5 or 5)?

No, because

intelligence leap not comparable to gpt-3 to gpt-4 jump

various metrics show smaller improvement

incremental rather than revolutionary advancement

lack of official next-gen designation from openai

announced "frontier models" coming soon at end of presentation

model's efficiency and speed suggest it's not dramatically larger

4 in name implies iteration on gpt-4, not new generation

Yes, because

next-gen status is not solely determined by model size:

llama 3 8b is considered next-gen despite smaller size

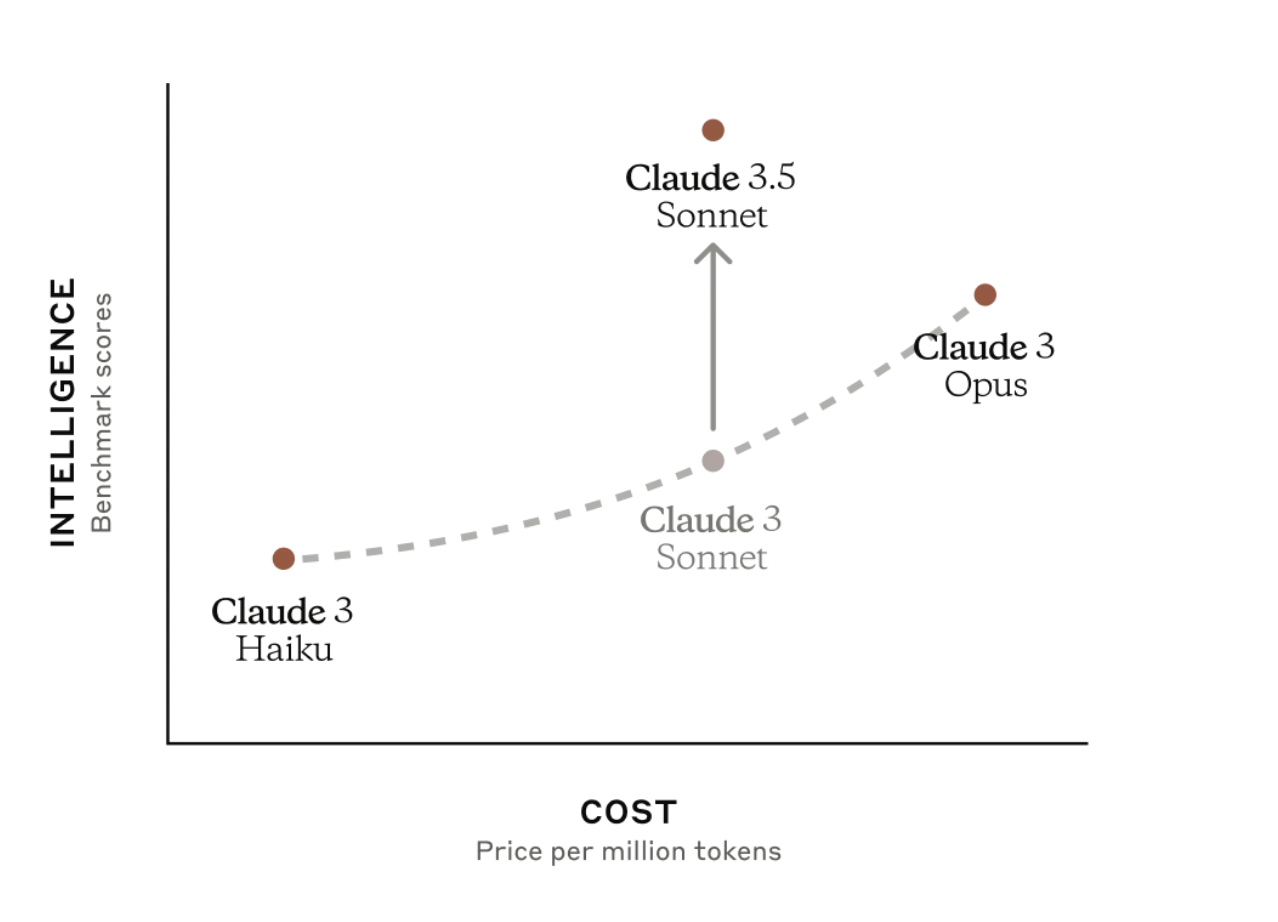

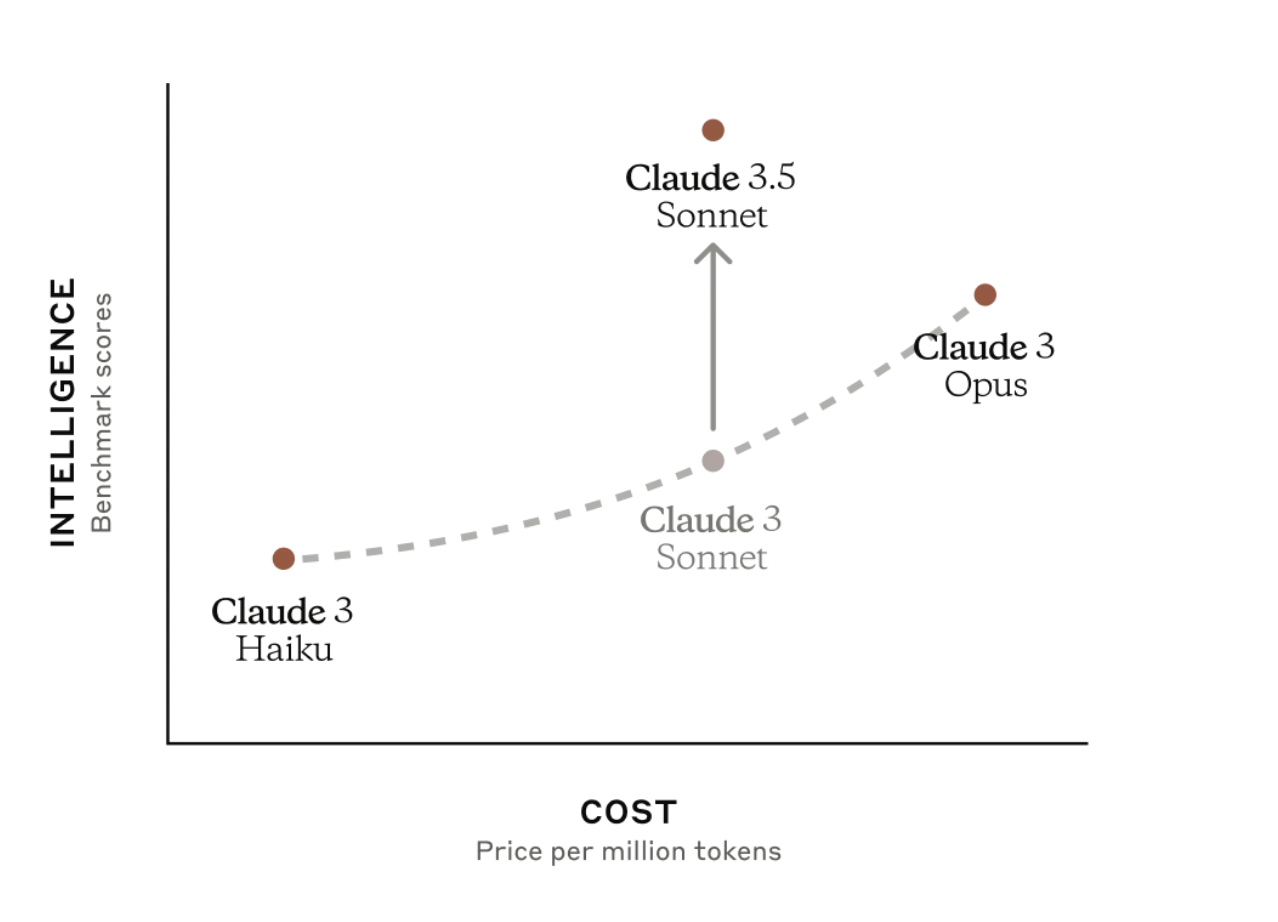

anthropic's claude 3.5 sonnet is next-gen and most intelligent, but smaller than opus 3

industry trends shifting towards viewing model families rather than strict generational leaps

significant capabilities and advancements:

multimodal integration: text, audio, and vision capabilities

improved efficiency and lower operational costs

not merely a fine-tuned version of gpt-4, but architecturally distinct

released through a major event:

launch signifies importance and marks a significant milestone

demonstrates openai's commitment to positioning it as a major advancement

Ultimately, there's no right or wrong way to answer this question. At the end of the day, these are all marketing terms. However, based on everything I listed above, I personally think of GPT-4O as next-generation. While it doesn't represent as dramatic a leap as GPT-3 to GPT-4, it introduces significant new capabilities and efficiencies. It's likely more of a 0.5 jump than a full generational leap, aligning with the recent release of Claude 3.5 Sonnet. It seems that moving forward a nuanced view of generations is needed. You can get a new generation of a small model. "Next-generation" might not always mean vastly larger or more intelligent, but rather more capable, efficient, or versatile. GPT-4O embodies this trend, making a strong case for its consideration as a next-gen model despite counterarguments.

@traders

I resolved the market. Please find a summary of my thinking below.

Can gpt-4o be considered openai's next-generation model (e.g. 4.5 or 5)?

No, because

intelligence leap not comparable to gpt-3 to gpt-4 jump

various metrics show smaller improvement

incremental rather than revolutionary advancement

lack of official next-gen designation from openai

announced "frontier models" coming soon at end of presentation

model's efficiency and speed suggest it's not dramatically larger

4 in name implies iteration on gpt-4, not new generation

Yes, because

next-gen status is not solely determined by model size:

llama 3 8b is considered next-gen despite smaller size

anthropic's claude 3.5 sonnet is next-gen and most intelligent, but smaller than opus 3

industry trends shifting towards viewing model families rather than strict generational leaps

significant capabilities and advancements:

multimodal integration: text, audio, and vision capabilities

improved efficiency and lower operational costs

not merely a fine-tuned version of gpt-4, but architecturally distinct

released through a major event:

launch signifies importance and marks a significant milestone

demonstrates openai's commitment to positioning it as a major advancement

Ultimately, there's no right or wrong way to answer this question. At the end of the day, these are all marketing terms. However, based on everything I listed above, I personally think of GPT-4O as next-generation. While it doesn't represent as dramatic a leap as GPT-3 to GPT-4, it introduces significant new capabilities and efficiencies. It's likely more of a 0.5 jump than a full generational leap, aligning with the recent release of Claude 3.5 Sonnet. It seems that moving forward a nuanced view of generations is needed. You can get a new generation of a small model. "Next-generation" might not always mean vastly larger or more intelligent, but rather more capable, efficient, or versatile. GPT-4O embodies this trend, making a strong case for its consideration as a next-gen model despite counterarguments.

Edit: I changed my opinion. A model doesn’t need to be large in order to be considered part of the next-generation, e.g. sonnet 3.5 (see graph).

I decided that gpt-4o is not OpenAI’s next major LLM-release and should not be treated as such. GPT-4o should be seen as GPT-3.5’s successor rather than GPT-4s

I expect we will soon (3-6months) see the upgrade we have been waiting for, any objections for re-opening the market?

@Soli 18 days ago you said "This model is OpenAI's next-generation model. They created a whole event + press release around it. They also call it their flagship new model."

How has that changed?

@ErikBjareholt i was convinced otherwise after examining available evidence and sleeping over it - also the market was closed in this period because i noticed that i need to do some research

@ErikBjareholt i can do a longer writeup soon but in general the title of this market had 4.5 and 5 in it because we were specifically interested in gpt-4’s successor

the last slide of OpenAI’s prasentation specifically says frontier models coming soon

the model is available for both paying and free chatgpt users which lowers the value proposition of being a paying user

gpt-4 is still available on chatgpt and is even preferred by many users (such as me) over gpt-4o

openai saying that the model is compute efficient and not capping it for paying users while capping 4 makes it clear that it is smaller than 4

all this leads me to conclude this gpt-4o is not gpt4’s successor or openai’s next major llm release

(happy to reference stuff for each point if necessary)

@Soli That's fine, but all of that could apply to a hypothetical GPT-4.5 model too (which GPT-4o essentially is imo), so not entirely convinced.

@ErikBjareholt the thing is openai’s naming is almost arbitrary and it is not like we have 100 years of history to judge based on so i get where you are coming from but i would personally find it very weird and inconsistent if openai would release a new model called 4.5 which is worse than 4. 3.5 was a clear improvement over 3 but 4o is not.

@Soli GPT-3.5 was also a smaller & faster model than GPT-3 (with some complaining it was worse at some tasks!). There are certainly cases where GPT-4o struggles, but overall it's largely a faster ~GPT-4 (with teased multimodal capabilities beyond vision), which is pretty much the same as GPT-3 to GPT-3.5.

I'd need significant convincing to think that GPT-4o isn't "GPT-4.5 equivalent".

@Soli The fact that "it is named GPT-5" closed at 13% seems fairly telling of peoples expectations here. If you were to go with "GPT-4o isn't next major model" then that would certainly be a lot higher, and counter to people's intuitions about this market.

@ErikBjareholt you might have a point here, Erik - let me sleep over it and allow other participants to share their opinion

i am open to going with whatever definition majority of participants agree on but if there is no consensus we would either need to go with what I decide or N/A

@Soli i didn't bet in this market but i agree with your ruling. GPT-4o was clearly not the release people have been waiting in anticipation for.

@Soli I'm running a similar prediction. I updated my question, that a new model only counts if OpenAI updates their Major.Minor naming scheme. They're released faster gpt4 (turbo) and multi-modal 4 (4o), but the brains in the model are still the same. This prediction should only resolve when 4.1,4.2,4.3.... or 5 come out. Something needs to change in the major.minor naming scheme

I just want to note my scepticism with regards to the video input capabilities of GPT-4o (I am aware that it is unclear whether GPT-4o will be the relevant model for the resolution of this market).

From both the live demo and some of the example videos shared on X, it seems to me like the app only samples the video feed at a very low rate (several seconds between samples) and passes these still images into the model. E.g. how the model still saw the table in the facial expression example. Or in the tic-tac-toe example on X, how they keep holding their hands in the resulting position for several seconds for the model to pick up on the result. I could be wrong and these are just examples of current inefficiencies of video input.

Even if the actual model only takes in stills, I could see an argument for resolving "supports video input" (as in, the app and maybe even the api take in video, even if the model itself doesn't), but I would at least ask for care in resolving this question (if GPT-4o leads to resolution).

@Sss19971997 Mira called it their "flagship model". The blog also gives me clear "next major model" vibes: https://openai.com/index/hello-gpt-4o/

@Sss19971997 I would say yes. I think they clearly could have called this "GPT-4.5" if they wanted to.

@Sss19971997 Why would you say no? This model is OpenAI's next-generation model. They created a whole event + press release around it. They also call it their flagship new model.