Anthropic recently announced Claude-100k, a version of their Large Language Model Claude with an 100k token context window.

Will OpenAI follow suit by increasing the max context length of GPT-4 before the end of 2023 (Dec 31 2023, 11:59 PM GMT)?

"GPT-4" is defined as: Any product commonly referred to as "gpt-4" or similar by OpenAI. Names like "gpt-4-0314," "gpt-4-multimodal-2," "gpt-4," or "gpt-4-oct," would count as long as they are commonly referred to as GPT-4 and build off the original GPT-4 models. "gpt-4-plus," "gpt-4.5," or "gpt-4.1" would not count unless OpenAI regularly calls them GPT-4 and the consensus is that they are newer versions of gpt-4. It need not be accessible to the general public, but someone must be regularly selling it (so if they sell it to companies only, it would count)

Resolves YES if:

- Any version of GPT-4 has a max context of more than 32,769 text tokens AT ANY POINT within 2023.

Resolves NO if:

- The max context window of all versions of GPT-4 remains at 32,769 text tokens, or decreases.

- Giving GPT-4 more context is discussed in writing, or an experimental version with more context is benchmarked, but no product is released.

- Multimodal GPT-4 has a separate context length for images, but the max text length does not go above 32,769 tokens.

Resolves N/A if:

- A new version of GPT-4 is released, but we don't know what it's context length is by the end of 2023.

- It is unclear whether a model with a higher text token maximum than 32,769 is GPT-4 based on the above definition. (ex. GPT-4 gets leaked somehow, someone makes edits to it to increase the context length, Sam Altman calls it GPT-4 informally, but OpenAI doesn't call it GPT-4 officially)

If OpenAI changes their name, their new name will be valid in place of "OpenAI" written anywhere in the resolution criteria.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ1,695 | |

| 2 | Ṁ1,013 | |

| 3 | Ṁ341 | |

| 4 | Ṁ315 | |

| 5 | Ṁ282 |

@SorinTanaseNicola Yes.

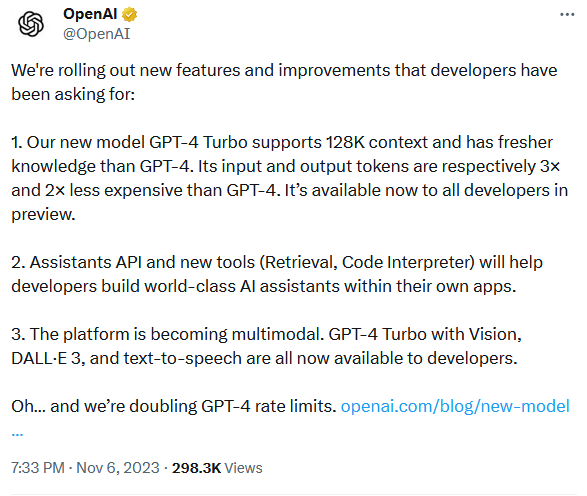

It's currently available via API under the name "gpt-4-1106-preview."

I have used it, and paid for tokens, and I'm just some random developer not a company, so they are selling it.

(edit: oh a company would have counted anyway, cool)

GPT-4 has a max context window of 32,769 tokens, when the prompt + completion are added together. The OpenAI documentation clearly defines the context window as the sum of the token length of the prompt and completion. Per your resolution criteria (“AT ANY POINT IN 2023”), resolve YES.

@Jason > Any version of GPT-4 has a max context of more than 32,768 text tokens AT ANY POINT within 2023.

@ampdot I've seen that 32,769 figure before somewhere too, but the docs do say 32,768: Models - OpenAI API

I wonder if the last one is a special control token or something.

Also: I don't know about GPT-4, but transformer models have no intrinsic context window. But they might produce nonsense if you force it when it hasn't been trained on longer contexts, or you might not have enough memory on your particular GPU. So people usually limit it in the frontend. So the answer might be "infinite" depending on their implementation.

@Mira That is true, there is no TECHNICAL limit, and people have experimented with increases before. I'm asking if a version with longer than 32k will be released AKA sold by someone.

I'm leaving it as greater than 32,769 for now, because I don't want to deal with this over 1 token, but I'm pretty sure it would count as 32,768 if OpenAI says so.

@GiftedGummyBee there's enough compute for Anthropic to pull that 100k model. Surely OpeanAI can do the same?

@ICRainbow Because anthropic is both using a different architecture and most likely way smaller model. From what I heard, sub 100b is what they have. They also used a bunch of tricks, which I doubt OAI didn’t already pull for GPT-4-32k/3.5-turbo-16k

News: https://twitter.com/OfficialLoganK/status/1668668826047721494

Notable: gpt-3.5-turbo-16k exists now

Quoting from this: https://platform.openai.com/docs/models/gpt-4 and this https://openai.com/blog/function-calling-and-other-api-updates

"On June 27th, 2023, gpt-4 will be updated to point from gpt-4-0314 to gpt-4-0613, the latest model iteration."

"gpt-4-32k-0613 includes the same improvements as gpt-4-0613, along with an extended context length for better comprehension of larger texts."

So it sounds like there will no longer be a wait list for the 32k context. If they took 3 months to iterate, so I am guessing maybe at least most 3 more months for a wait-list-testing model with a larger than 32k and another 3 months before public release? Based on the criteria "that it need not be accessible to the general public" I think there is a chance they will be motivated to release a private/testing version for companies before the end of 2023 (given the stiff competition and that they are retiring old models), so I am cautiously betting YES.