When will style transfer be possible in real-time on consumer hardware?

4

200Ṁ37resolved Jun 2

1H

6H

1D

1W

1M

ALL

100%17%

2024

29%

2025

35%

2026

19%

2027+

AI video models can now do impressive style-transfer like this to convert a video between different styles. For example, converting a real-life video into anime, or "pixar" style. However doing this is very time-consuming (about 20 seconds to create 1 second of video on an RTX4090).

When will it be possible to convert videos (with quality at least this good) in real time using consumer hardware. By consumer hardware, I mean a PC (or other hardware) that can be bought by anyone for less than $10k (2023 dollars).

This question is managed and resolved by Manifold.

Get  1,000 to start trading!

1,000 to start trading!

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ0 |

Sort by:

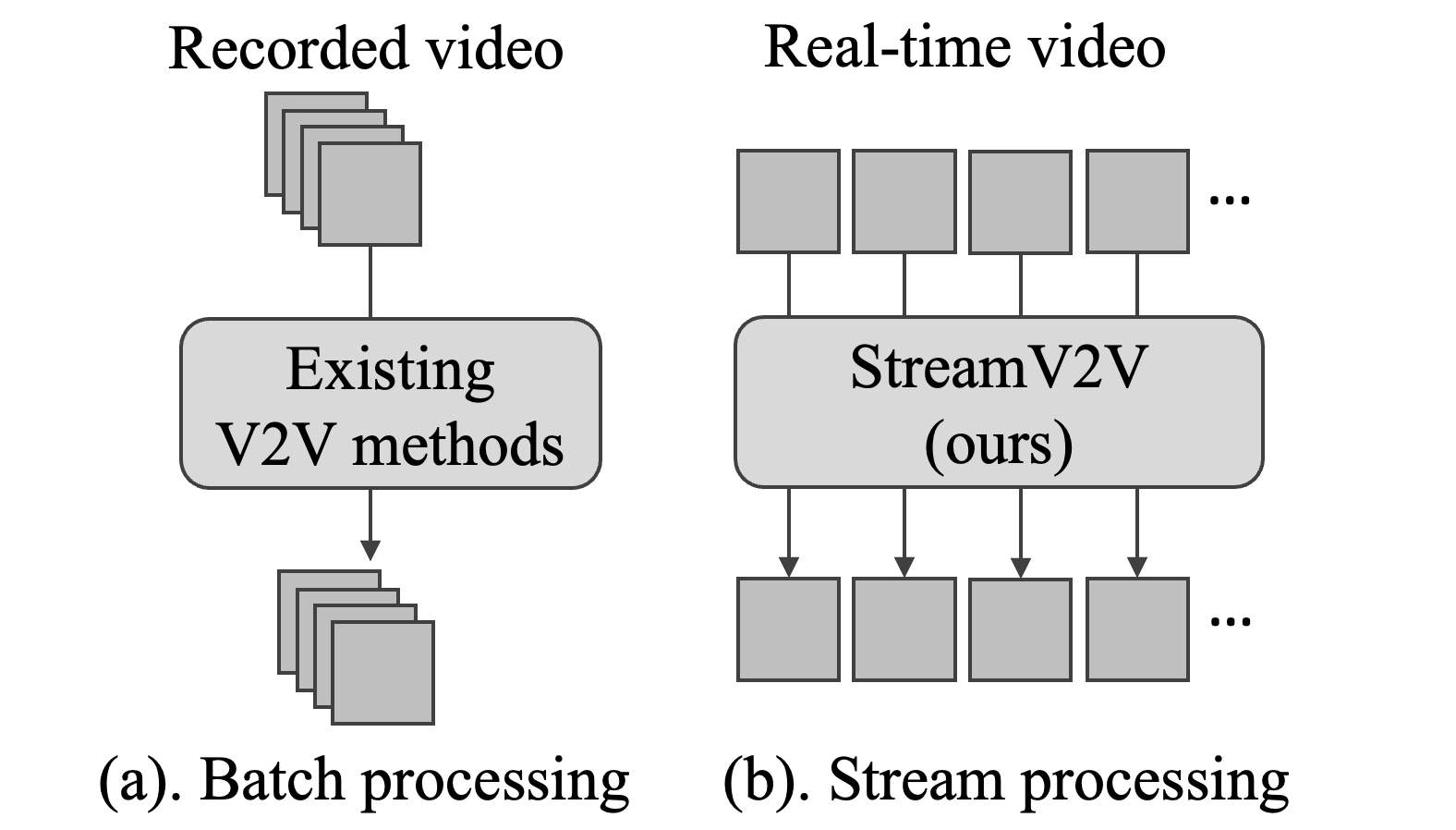

Going to resolve to 2024 based off of this project: https://jeff-liangf.github.io/projects/streamv2v/ unless there are objections

People are also trading

Related questions

In what year will a GPT4-equivalent model be able to run on consumer hardware?

2026

In what year will a GPT4-equivalent model be able to run on consumer hardware?

2026

Will we have high-quality real-time age transitions for video calls before 2026 ends?

74% chance

Will we see analog accelerators in consumer hardware by the end of 2026?

15% chance