This is just a poll for my own interest and isn't connected to any market.

People are also trading

@thepurplebull this raises the question of why the developers didn't fix it. I know it's a technically solvable problem (openAI, anthropic, midjourney and meta already solved it a while ago), and Google can definitely afford to hire engineers who can do it. But they didn't, which implies some pretty severe dysfunction (either engineers who saw the issue couldn't raise it and QA also missed it, or they did see it but didn't realize how bad it would make them look). None of those options are something a functional organization would have.

It would be more of a scandal (in the sense that more column inches would be dedicated to it) were the opposite true.

I'm not sure either is a particularly big problem in the scheme of 'problems with Gemini'.

I worry that data injection would allow targeted manipulation of what AI (and subsequently people, once humans start trusting AI blindly, cf Wikipedia) believes people looked like.

The real reason this is big isn't that it's a huge unfixable issue in itself, it's that it was their big product launch of the year and they didn't realize how bad it was going to go. Which implies they have multiple serious issues in their internal pipelines.

@Snarflak Oops I misvoted. I meant to click 9 but I clicked 2. Wait no, I meant to click 2 but I clicked 9. Should be able to change it.

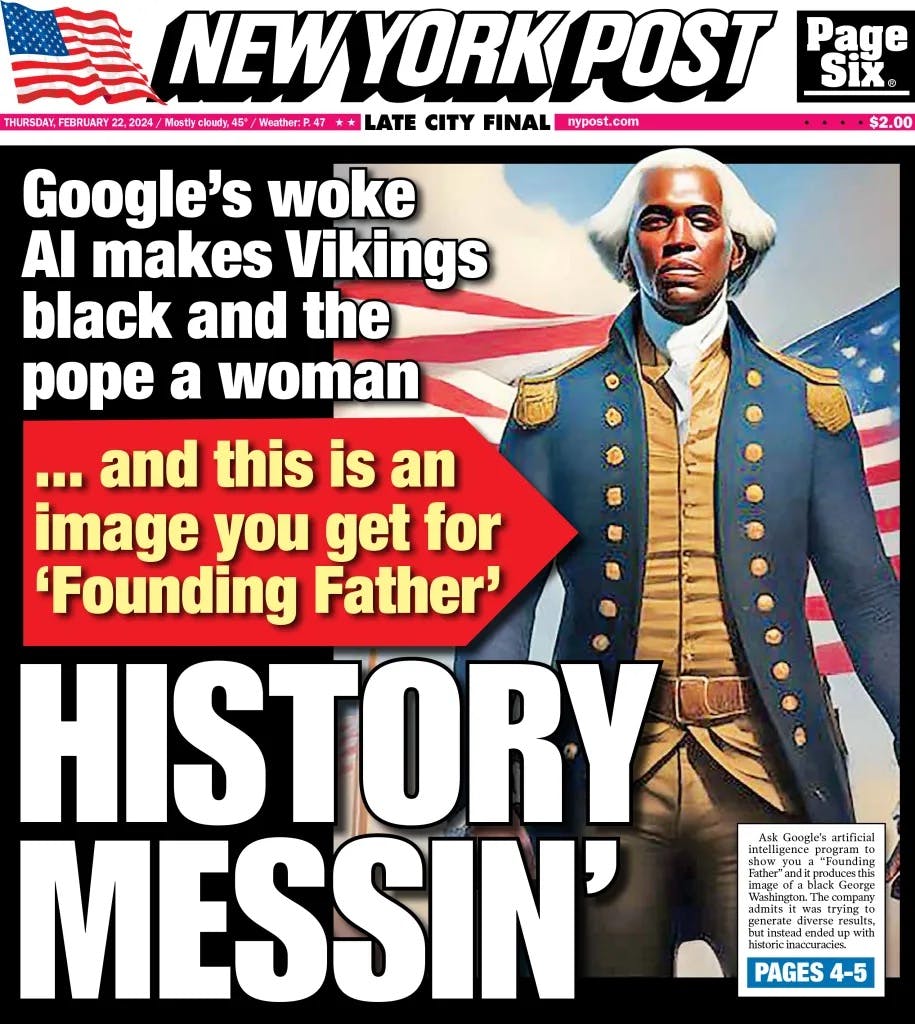

@ChrisEdwards See banner for this market in full screen mode — someone has been training (an) algo on woke content

@ChrisEdwards see banner and I linked an article down below. Essentialy Gemini AI image generator never showed white people. And there has been a lot of critizism by Musk on X. Google has turned it off for now, btw I've a market on when it'll be availabe again. #shamelessplug

https://www.reuters.com/technology/google-pause-gemini-ai-models-image-generation-people-2024-02-22/