Resolves "YES" if the 2024 Atlantic hurricane season meets a majority of the following criteria:

(1) Total economic damage estimate of at least $295 billion

(2) At least 17 named storms

(3) At least 10 hurricanes

(4) At least 6 major hurricanes

(5) At least 2 category 5 hurricanes

(6) At least 3,000 estimated deaths

(7) At least 224 units of Accumulated Cyclone Energy

Only storms occurring during hurricane season (June 1 - November 30) will count towards the total. Resolution may take into account reporting/estimates that become public after November 30 if necessary, as long as the reporting is of events occurring during hurricane season.

2017 was the costliest hurricane season on record thus far. The latest forecast from CSU (FORECAST OF ATLANTIC HURRICANE ACTIVITY FOR 2024 (colostate.edu)) suggests that 2024 might exceed it.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ261 | |

| 2 | Ṁ214 | |

| 3 | Ṁ191 | |

| 4 | Ṁ102 | |

| 5 | Ṁ98 |

People are also trading

Final tallies:

(1) Second costliest season on record with about $220 billion in damages, but did not surpass 2017 on this front.

(2) 18 named storms surpassed 2017

(3) 11 hurricanes, surpassed 2017

(4) 5 major hurricanes, just shy of the 6 majors in 2017.

(5) Beryl and Milton hit category 5

(6) Well short of 3000 estimated deaths, somewhere in the mid-hundreds depending on the source.

(7) 161.6 units of ACE.

The season hit 3 of the 7 criteria, so this resolves no.

In hindsight I am very happy with the approach I chose to framing the question around a significant number of highly legible criteria. My intent in doing so was to create a question that would be tradeable from early on based on the high level description (worse than 2017 or not), and would resolve consistent with my feeling about how bad it was relative to 2017, but without transitioning late in the season to a game where people have to guess my evaluation function. This was a success in my books -- this season felt pretty bad but not as bad as 2017, and if we had gotten one more major hurricane that hit land, it would have felt pretty close. If I had a change to make in hindsight, it would have been to make more of the criteria relate to the impact of the hurricane season on people, or landfalls, so that storms that form and dissipate over water without mattering much to anybody could not swing the outcome.

Increased bet to 20% (alone) based on criterion (4) being satisifed, i.e., future possibilities indicated by ensembles of a TC in the Caribbean of developing into a major; 12Z ensembles ~20% of members (EPS, GEFS) have the potential disturbance in the Caribbean reach pressures consistent with cat. 3 (20% of EPS members also have it reach it by VMax alone).

@parhizj You are ruling out a cat 3+ forming in last week of November?

Suppose there is tropical depression forming on 30 November and in Dec strengthens to Cat 3, is this outside time limit set for this market or is season extended?

@ChristopherRandles Not worried about this resolution minutiae as the environment is becoming more and more unfavorable for such a scenario.

CPC puts TC formation < 20% for remainder of month (after a potential Sara). Conditioning on that becoming a major is less than 5% IMO.

@ChristopherRandles Nothing that occurs after November 30 will count towards resolution. Reporting from after November 30 can be used, only to the extent that it is describing something that happened November 30th or earlier.

Criterion (2) was satisfied with Rafael becoming a named storm...That leaves 1 more to satisfy criterion.

Additionally, Rafael is officially a major hurricane now (https://www.nhc.noaa.gov/text/refresh/MIATCPAT3+shtml/061755.shtml), giving us 5/6 major hurricanes, so nearly satisfying criterion (4).

This (4) seems the most likely of the remaining criteria to satisfy (assuming an unlikely scenario where Rafael satisfies (6), or (1) ends up satisfying with later revised upward estimates from Helene/Milton).

Latest CPC extended forecast puts genesis probs in the Caribbean at 20-40% for mid-late november for each of the two individual weeks (until Nov 26). The current week's NHC probabilities are similar, yet such a storm seems especially unlikely to become a major given it's development area and environment -- too far North). so that leaves only the mid to end of November in the Caribbean at 20-40% and 20-40% for each week...

Considering a climatological prior of ~9% for a major in the remaining month (from Nov 9-Nov 30), and that we might have still a decent environment in the Caribbean if another storm like Rafael forms (even though it will have cooled temps quite a bit, the OHC is very high in the Caribbean)... it seems that about 10-20% remains the chances for the remainder of the month for another major hurricane...

With subtropical storm Patty, it looks like Rafael will soon follow in the next few days, meaning criterion (2) is very likely to be satisfied (I'd put it at 75% currently; NHC has formation chances of a TC at 80%), to a likely total of 3/4 criteria being satisfied in the next few days.

This leaves only a single criterion of the required 4 left.

Even if Rafael becomes a major its unlikely we'll see a 6th major given the current extended forecasts for the remainder of the month. A potential Sara forming north of Puerto Rico does not seem a likely candidate, and climatologically I get a figure of about 8% (after the 9th) for a major hurricane (apart from Rafael); (I use 8% as an upper bound in the analysis below, but given Rafael's chances of a major mean this should be a lot lower).

The economic damage estimates from Helene/Milton have been revised upwards on Wikipedia, so now the total is around ~$192 bn. Fatalities are reported as >375.

It seems unlikely a potential Rafael (nor further storms) will cause such devastating losses necessary for the criteria set for (1) or (6) and it seems yet further unlikely that we will see revised estimates for earlier storms in the next 2 months that will cause these to be satisfied by the time this question resolves.

Forecasted tracks for a potential Rafael seem to have it impacting southern Cuba (potentially as a Hurricane); they do have an ongoing crisis with blackouts and foot shortages (https://en.wikipedia.org/wiki/2024_Cuba_blackouts) but it seems very unlikely for a hurricane Rafael to cause such losses given its current track.

Assigning minimal chances to the remaining criterion for damages/fatalities (with the exception of a major, and assuming Rafael does become a TS):

[0.01, 1, 1.0, 0.08, 1, 0.01, 0.01]

A simulation with these returns about 11%. Taking the actual 75% for (2) brings it down to 8%, but raising either (1) or (6) to even 5% brings it back up to about 11%. This might be too generous...

We now have 10 hurricanes with Oscar so criterion (3) is now also satisfied.

This leaves me with the current probs for the criteria:

[0.19, 0.41, 1.0, 0.04, 1, 0.14, 0.01]The next criterion most likely to be satisfied is >= 17 named storms as now with Nadine and Oscar being named this morning we are at 15.

After reading some further news reports and looking at various markets here on manifold, it seems the economic damages (and perhaps the fatalities) criteria probabilities I come up with seem too high subjectively, but since it's possible that I'm underestimating the chances of two major hurricanes perhaps this won't matter as much, so I've gone back to using the high end of my ACE model. Edit: This total economic damages criterion remains the greatest uncertainty. Wikipedia is still using some of the low end figures.

Oscar is not forecast to become a major and it has limited chances to do so (perhaps 24-36 hours). After Oscar dissipates that leaves the chances of a major to 4%; but if Oscar does become a major this criterion jumps to 28%; leading to the simulation probability of 27%).

Presently by my nhc advisory error notebook from the 5pm advisory error Oscar has up to 8% chance of becoming a major (it is likely less than this); from SHIPS and AI-RI from CIMSS these probabilities range from 12-20% (so I believe taking 8% might be ok). Weighting that 8% with the climatology chances (0.92 * 0.04) + (0.08 * 0.28) yields 6% of 2 more major hurricanes. This only marginally affects the simulation probabilties for this question increasing them from 15 to 16%.

It's worth noting that the deaths estimate for 2017 (particularly, Maria) wasn't released until August 2018. To make a fair comparison you may need to wait a pretty long time to resolve (unless it's obvious that the number was far lower).

@SaviorofPlant Understood, but as clarified at the start of this comment chain, I will resolve sooner than that based on the best info available and my best judgement, to not tie up people’s mana for a while extra year.

October 7th: Milton just upgraded to a category 5, but no impacts on land yet. This marks the first criterion passed -- Criterion number 5, at least two category 5 hurricanes.

(1) At least $50 billion in damages (primarily Helene, with measured damages from Helene seemingly likely to grow significantly)

(2) 13 named storms

(3) 9 hurricanes (Beryl, Debby, Ernesto, Francine, Helene, Isaac, Kirk, Milton)

(4) 4 major hurricanes (Beryl, Helene, Kirk, Milton)

(5) 2 category 5 hurricanes (Beryl, Milton)

(6) 335 estimated fatalities

(7) Accumulated Cyclone Energy 113

@JakeLowery Milton becoming cat. 5 finally produced a huge update upwards in the criteria probabilities (increased probabilities for multiple criteria, but especially the cat 5 criterion and the 10 hurricanes criteria)

Since my (naive) damages model are based on ACE + current damages (and since the ACE forecast is now significantly higher, enough to make an above average season significantly more likely), it also increased the damages probabilities severely (this estimate has no knowledge of Milton's track, etc..). With Milton though this estimate is going to be very poor as the track uncertainty is large enough to tilt it (there are some worst case scenarios damage-wise, where Milton is record-breaking (even after accounting for inflation)).

Question probs:

[0.71, 0.18, 0.73, 0.13, 1, 0.13, 0.01]With these it is about 22% now.

To consider a worse case scenario, taking economic damages as being met as a given (Unknown what the damages from a worse case scenario of Milton to Tampa might be), yields upwards of 30%. Unknown what the fatalities from Milton will be but I am hopeful it won't be severe enough to reach Katrina levels given the adequate warning this time around.

Edit:

"

Siffert of insurance and reinsurance broking group BMS explained, “Forecast models show uncertainty in Milton’s exact landfall, but its intense winds, possible Category 5 strength later tomorrow, and weakening but widening wind field raise concerns about immense industry losses.

“The damages have the potential to be between $10 billion to $100 billion depending on the wide range of scenarios that now heavily depend on track and intensity forecasts at landfall.”

"

(I imagine this might be an underestimate in a worst-case scenario but I don't have access to the Florida insurance model.)

There are several reasons to update now, yet despite the shifting probabilities... I don't find a compelling reason to change my bet significantly.

For Helene: There is still some uncertainty about the fatalities and could be raised significantly more (however, this is one scenario where I can't gauge the uncertainty at the moment to warrant increasing it arbitrarily -- this uncertainty could be revealed by a well crafted market).

With relation to Accuweather's estimates (that they keep raising): as they are a combined damages and economic losses figure, it's hard to judge what contribution is just damages. Wikipedia cites the other figure (popularly cited), which is Moody's very early estimate for damages that is substantially lower. This could change with further damage estimates in the months to come. However, a faifthful damage estimate will take years as it will take a long time to document all the damage. With regards to this market, it still does not seem likely to effect the resolution of timing for this market.

For the other criteria (after some work a related a question), I look speculatively into the next 10 days (EPS ensembles for the chances of a hurricane (they have an Invest but there is still no regional model data yet) and a super-ensemble that for the named storms) to come up with high-end probabilities: we a disturbance in the Caribbean that has a 80% chance of development (now an Invest), and another potential candidate mentioned in the NHC TWO in the East Tropics; between the two the highest probability I could assign is about 60% of a hurricane at the moment but the real probability might be much lower, like ~20-30%; from GEFS/00Z it looks like there is a 20% chance it won't become a full TC (this is essentially the same as chances in the TWO), and this effects the chances of it becoming a hurricane to an ever greater degree). For these two criteria, I weight by the scenarios of 0-2 additional storms, hurricanes using climatology to fill in the rest of the year (as usual).

This increases the chances of >=10 hurricanes as an upper probability of about 62%. A related market https://manifold.markets/ScottSupak/how-many-hurricanes-in-the-atlantic?play=true is consistent with this. If for instance, no Hurricane develops in the next 10 days, this 62% becomes ~35% by my estimates.

Similarly, but slightly less speculatively, the chances for the number of named storms >= 17 has also increase significantly to 16%.

The fatalities criteria has jumped to 30% based on an estimated number of deaths that is significantly higher now with Helene's updated numbers and due to my naive model taking in a higher than previously forecast ACE for the season. (This is due largely to Kirk which will add a significant amount of ACE (perhaps ~25-28 ACE) by the time it becomes post-tropical, and to a lesser degree, the forecasted contributions of Leslie in the period.

Criteria probabilities (0 to 1):

[0.02, 0.16, 0.62, 0.03, 0.09, 0.30, 0.01]This gives a final probability of 0.6% now through simulation, which is no longer nil. (While still based on some upper probabilties for a speculative forecast).

It is useful at this point to once again go back to the most uncertain figure (the economic damages), and consider a couple scenarios. A) taking the latest $250 billion as an estimate (even though it's not a pure damages estimate) as an upper bound. This raises the probability of criterion 1 to 0.76. B) Just taking Helene as being unprecedented in damages and raising the damages so that criterion 1 is satisfied by it alone, so we just take a probability of 1 for criterion 1.

For these scenarios this raises the final probability (rounding) to between ~5-6%.

As the probabilities have shifted from nil to between 0.6% - 6% for upper probabilities it makes sense to me to sell a few shares.

Updating on Helene doesn't shift the probabilities for this market on the economic damages or fatalities criteria (at least 64 deaths), despite it being given $22 bn- $34 billion assuming this is relatively accurate: https://www.theinsurer.com/news/moodys-analytics-says-helene-like-idalia-but-worse-with-cost-estimated-at-up-to-34bn/

Even taking the $34 billion, which brings the total to ~$45 bn, the model projection puts 2024 finishing near $64 bn, a seemingly long way to 295 billion -- i.e. would require almost two Katrina level disasters on top of that with only 30% of the season remaining).

The probability I currently get for economic damages is much lower than seems reasonable compared to last time, and subjectively seems too low. To see how sensitive my analysis is I add $125bn as a fudge number to the $34bn to see what probability I get for the economic damages (imagining for instance the possibility of a disturbance (indicated in the TWO) in the Caribbean becomes Leslie and imitates something similar to Katrina in the next couple weeks, then the probability shifts up to 15% (even with this the simulation probability is still near nil). Given the Caribbean is still very warm another Helene or even Katrina does not seem to be as low as a probability as what I currently have (as we do have some potential in the models for a hurricane) so I am going to cap it for now (some what arbitrarily) at 1% (at least until the TWO chances drop), as at 0.0006% seems too low.

The biggest shift is in the number of hurricanes (now 16%), after Helene and Isaac. Isaac nearly made it to cat. 3 which would have shifted the probabilities for major substantially. On a speculative note, there is an invest 90L in the MDR that has some chances to become a hurricane (and presently, some small chances to become a major hurricane).

Criteria probabilities (each normalized to 1):

[0.01, 0.06, 0.16, 0.01, 0.14, 0.004, 0.01]The simulation still gives near nil chances for this resolving YES.

Following along the analysis for the scenario I mentioned earlier (of a Leslie that imitates Katrina), I recalculate the probabilities for the other criteria (excluding ACE as I don't believe that will go above 1% for the rest of the year); this assumes that the fatalities criteria are met automatically under this Katrina like scenario, and the same for the category 5 criteria (this is a very pessimistic scenario in this sense), yielding these criteria probabilities

[0.15, 0.14, 0.35, 0.06, 1, 1, 0.01]This increases the simulation chances to (almost) ~14%. This seems rather high but you must remember this probability is assuming such a scenario happens, so you must condition this probability on the chances of a Katrina like scenario as actually already happened -- including both the entailing $125bn in damages and 3000 deaths. Even if you assign such a scenario as high as 10% or 20% (i.e. imagining that with the effects of climate change the historical and climatological probability becomes less relevant?), that still only yields a final probability of ~1-3%.

I don't know how you could establish such a high probability (or even higher) but maybe someone can (it is also possible we have been "lucky" this year with the lack of genesis events and the track of Helene); i.e., one can say climate change made SST along Helene's RI (multitudes?) more likely (not in linear proportion to the change in SSTs). Edit: something not accounted for was that the other probabilities for number of named storms/hurricanes/etc still relies on a climatology (1991-2023) that might systematically underestimate the other dependencies if we are in a new regime....

.

@JakeLowery Edit: I redid my calculations and found one error in the largest probability (3) and reduced it quite a bit...

I get the following probabilities now for (1)-(7), adding in the forecast for Francine from IVCN model for ACE (about 5 more ACE), and assuming it will be only a category 2 (per NHC forecast)

[0.004, 0.11, 0.2, 0.03, 0.24, 0.067, 0.03]

This still yields about ~0.1 % chance

If you assume Francine becomes a cat 3 that 0.03 probability for major hurricanes criterion becomes ~ 0.09, and the overall probability increases to ~0.2% chance

@parhizj nice work. It is amazing how calm it was for the long stretch in August until now, it really seems like it would take something out of distribution to cause a yes here.

[0.003, 0.02, 0.06, 0.01, 0.19, 0.018, 0.01]Criteria point probabilities for the market criteria (above) are from 0-1, in case I didn't make that clear before. Another simulation run still puts the chances for the question resolving YES near nil (edit: 0.002 %). Still have low confidence in economic damages, fatalities as the NW Carribean-GoM has enough heat potential right now to fuel or a monster storm and my model for these is very naive.

Updating since Francine never made it to cat. 3, and it's been 10 days. It did increase the season damages to $10.56 billion (per wikipedia), and the estimate from Francine is about a billion dollars (as I guessed).

Criteria (2), and (3) probabilities have dropped quite a bit.

Notably, the highest percentage for any of the seven criteria now I assign is still the chances of another category 5 at ~19 %.

For reference this is the actual numbers for what I get from my climatology notebook (in percents), considering 9-20 onwards (the named storms might have a rather generous spread though; first line is a binning of named storms for a different question):

(Climatological Percentages)

{(0, 10): 29.607277087493394, (11, 15): 64.88047116170911, (16, 20): 5.466125195601541, (21, 25): 0.04605153232142377, (26, 30): 7.49879760420636e-05, (31, 35): 3.4892567217111125e-08, (36, 999): 5.933112653585071e-12}

>= 17 named storms {(17, 99): 2.477131144904349}

>= 10 hurricanes {(10, 99): 6.14720234090144}

>= 6 major hurricanes {(6, 99): 0.7282606274721027}

>= 2 cat 5 hurricanes {(2, 99): 19.11333528179103}

Ive clamped them to a minimum of 1% but some of them are lower (such as the criteria (7) -- the ACE probability which I think is still much lower than 1%).

This is one of the trickier markets to get a good estimate given its complexity, so I've put it off. Tackling it piecemeal with some sketchy analysis...

(Dated) forecasts from different groups had a range of values on the high end. I'm going to rely on a 1991-2023 climatelogical baseline, conditioned on the remaining days, when I can, instead of those dated forecasts. Where I have the sketchiest of data, I take the high end.

(1) I only have the sketchiest of models, which puts the exceedance probability at 2% on the high end (using my ACE forecast, wikipedia data, and some basic modelling -- modeling that doesn't explain most of the variance), with a middle value of <<1%. A far better model for this and (7) would rely on expected storm surge, or other variables using some other predictor or more realistic complicated model.

(2) I think this is no longer likely to be met given the next month might be "normal" or even relatively quiet. Moreover, climatologically (1991-2023) I get 5% for >= 17 named storms.

(3) I put this at 54% (this is too precise but its a rough guess).

(4) 6% (we are only 1/6 of the way there with only Beryl).

(5) 28%, given we already have had Beryl this is not as bad as (4)

(6) Similar to (1) a very sketchy model, putting the exceedance probability at 15% on the high end, with a middle value of 1%

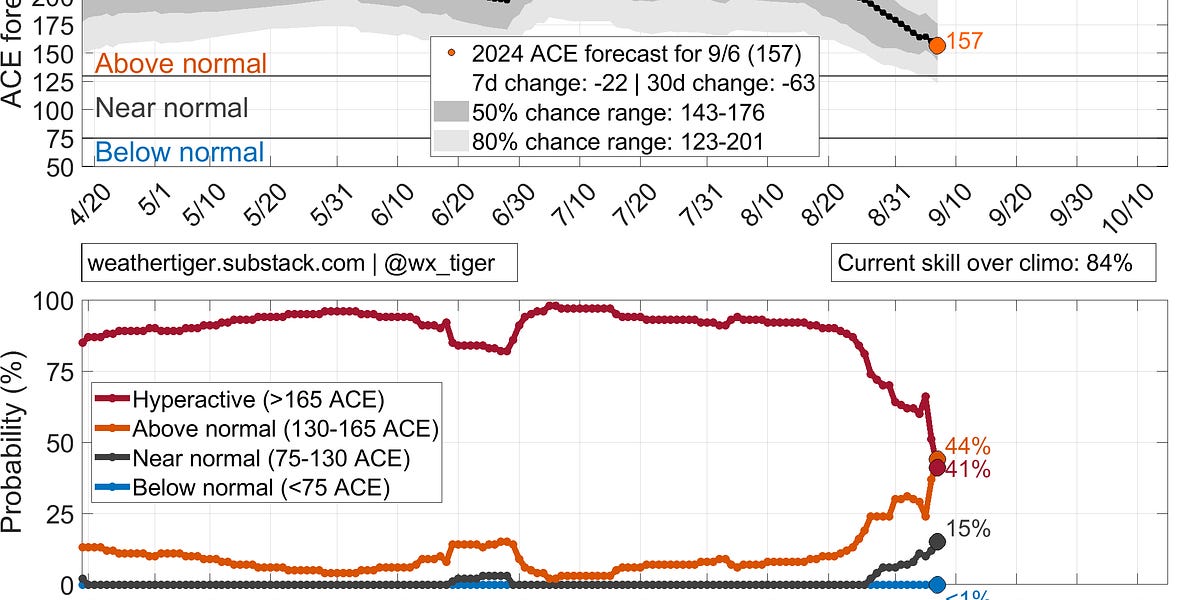

(7) 224 ACE seems very unlikely. Even https://weathertiger.substack.com/p/real-time-2024-atlantic-hurricane yields a value presently ~ 5%. My own climatological notebook puts it around 125 ACE (224 ACE would be ~ 0% in my notebook). So I will use a value of ~ 3% (average of between these two estimates).

that gives the probabilities for (1)-(7):

[.02, 0.05, 0.54, 0.06, 0.28, 0.15, 0.03]

Given the logistic nature of the question, I treat each criterion as a random, independent binomial trial with the above probabilities and run a number of simulations, thresholding on the sum of the trials in each simulation to get a result for the simulation. This assumes the independence of the criterion, which is itself a bit sketchy. Code below for reference if anyone wants to try different probabilities.

From the below code for the above probabilities I get <1%. It's hard at face for me to say whether it is the extremeness of the 2017 season or the conjunction of the very specific criteria (potentially giving 2017 a very narrow profile in the probability space) is contributing to the low probability, as none of the PDFs for the criteria (2)-(6) are going to be linear. If someone could answer this question by plugging in a range of reasonable values to the simulation below maybe they could come up with an answer.

For reference as a sanity check, if we assume (1) and (6) as a given by setting them to 1, which are the sketchiest probabilities, we get ~28% back, which makes sense as the lower of the remaining two highest criteria (5).

import numpy as np

def calculate_prompt_probability(criteria_probabilities, num_simulations=1000000):

N = len(criteria_probabilities)

k = N // 2 + 1 # majority threshold

simulations = np.random.binomial(1, criteria_probabilities, size=(num_simulations, N))

majority_met = np.sum(simulations, axis=1) >= k

return np.mean(majority_met)

question_probs = [0.02, 0.05, 0.54, 0.06, 0.28, 0.15, 0.03]

calculate_prompt_probability(question_probs)

@parhizj I love the detailed analysis, but surely the probabilities are not close to independent, right?

@JakeLowery Right. I did say that itself was another assumption when treating them as independent bernoulli trials and combining them.

@JakeLowery I'm open to ideas on how to recombine them if anyone else has any... Without making that assumption of independence which is required in trying to combine them simply, I think it makes sense to also just take the lowest probability of the highest 4 probabilities to get an upper probability (since that must be met in order to meet the majority criteria). That would give an upper probability of 6% among the highest four (54%, 28%, 15% , 6%).

Edit: a downside of this is, is the estimate of the probability is only as good as ALL of the piecewise probabilities you come up with... otherwise you won't get the correct '4th' probability.

August 26th update:

It has been a very quiet couple of weeks -- estimated casualties have climbed to 88, with no other meaningful activity to note. However, meteorologists noted shifting weather paterns that point towards a big acceleration in storm activity for September:Supercharged September: Atlantic hurricane season to intensify dramatically (accuweather.com)

August 15th update:

At least $8.915 billion in damages (mainly from Beryl and Debby)

5 named storms (latest: Ernesto)

3 hurricanes (Beryl, Debby, Ernesto)

1 major hurricane (Beryl, although Ernesto is threatening to get there)

1 category 5 hurricane (Beryl)

85 estimated fatalities

Accumulated Cyclone Energy 44 (35.1 of which from Beryl, source Real-Time North Atlantic Ocean Statistics compared with climatology (colostate.edu))