https://www.lesswrong.com/posts/e8r8Tx3Hk7LpnrMwY/a-bet-on-critical-periods-in-neural-networks

A bet is made in this post. See post for operationalisation.

Question resolves to "other" if the bet is cancelled, or there is no clear winner announced, etc.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ337 | |

| 2 | Ṁ83 | |

| 3 | Ṁ3 | |

| 4 | Ṁ2 |

People are also trading

Update:

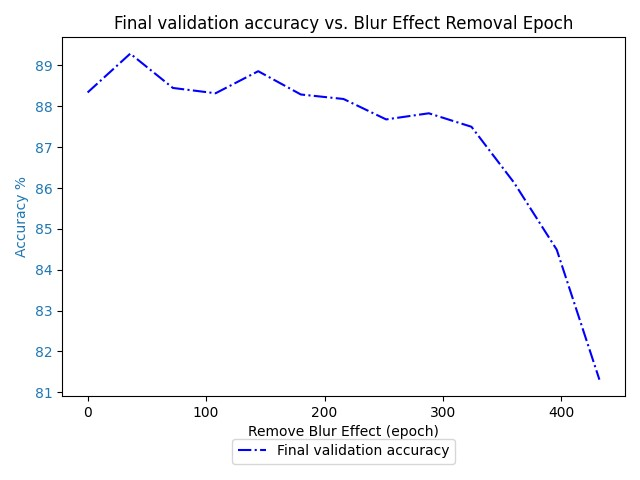

I'm currently not finding much evidence of any phase change for the 64 batch

There is a stark decrease on 400, but I don't know if that would stick around if I increased the epoch to 800.

To speed things up I also reconfigured how training works, so now I'm verifying we still get a phase transition with the 128 batch size. Then after that I think I'm going to increase the batch size instead of decreasing it, to make the results come in quicker. Because the above plot took 9 hours of training to make.