Will softmax_1 solve the 'outlier features' problem in quantization?

9

2.7kṀ1419resolved Jan 1

Resolved

NO1H

6H

1D

1W

1M

ALL

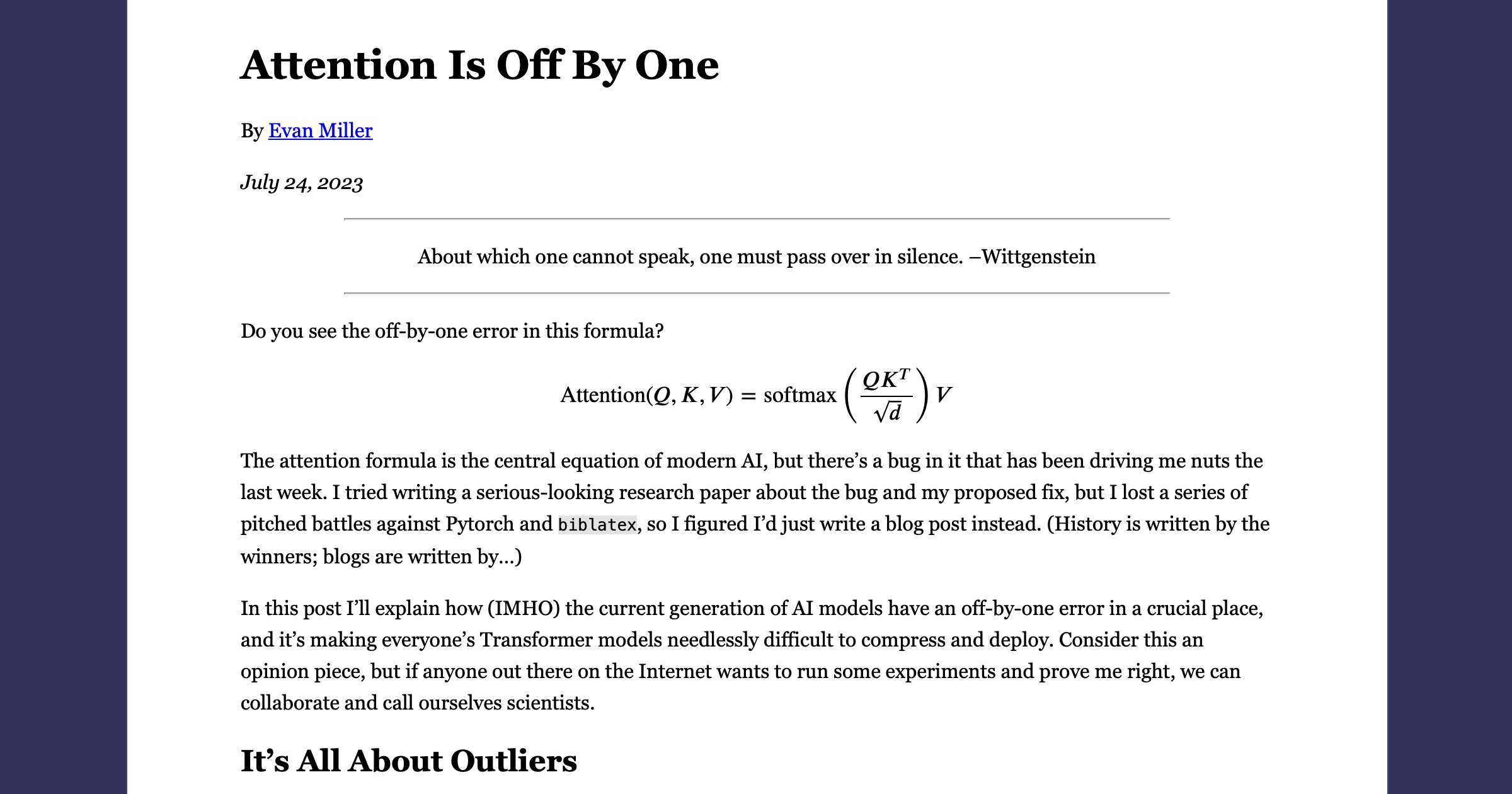

See this blog post: https://www.evanmiller.org/attention-is-off-by-one.html, and in particular this paragraph:

Even though softmax1 is facially quite boring, I’m 99.44% sure that it will resolve the outlier feedback loop that’s making quantization the subject of cascades of research. If you want to run some experiments and prove me right, DM me on Twitter and we’ll get a paper going.

This question is managed and resolved by Manifold.

Get  1,000 to start trading!

1,000 to start trading!

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ25 | |

| 2 | Ṁ13 | |

| 3 | Ṁ5 | |

| 4 | Ṁ4 | |

| 5 | Ṁ3 |