Most training run compute greater than 2e27 FLOP by EOY 2026?

6

Ṁ1kṀ2.4kDec 31

91%

chance

1H

6H

1D

1W

1M

ALL

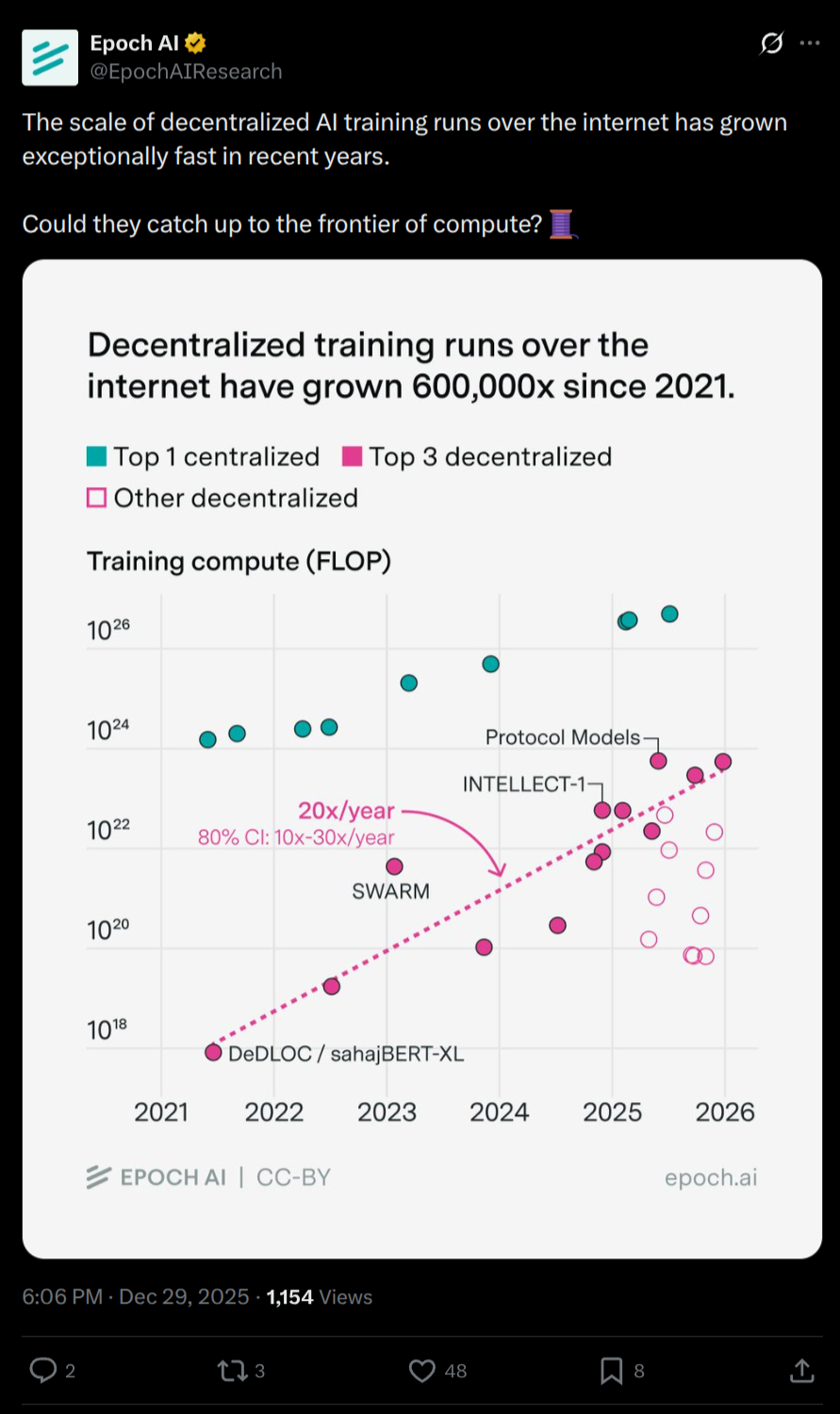

see https://epoch.ai/trends#compute. At market creation, the most recent update put grok 3 at 5e26 FLOP.

This market will resolve to epoch's estimate if it is available at time of market close. If unavailable, the most sensible estimate at that time will be used.

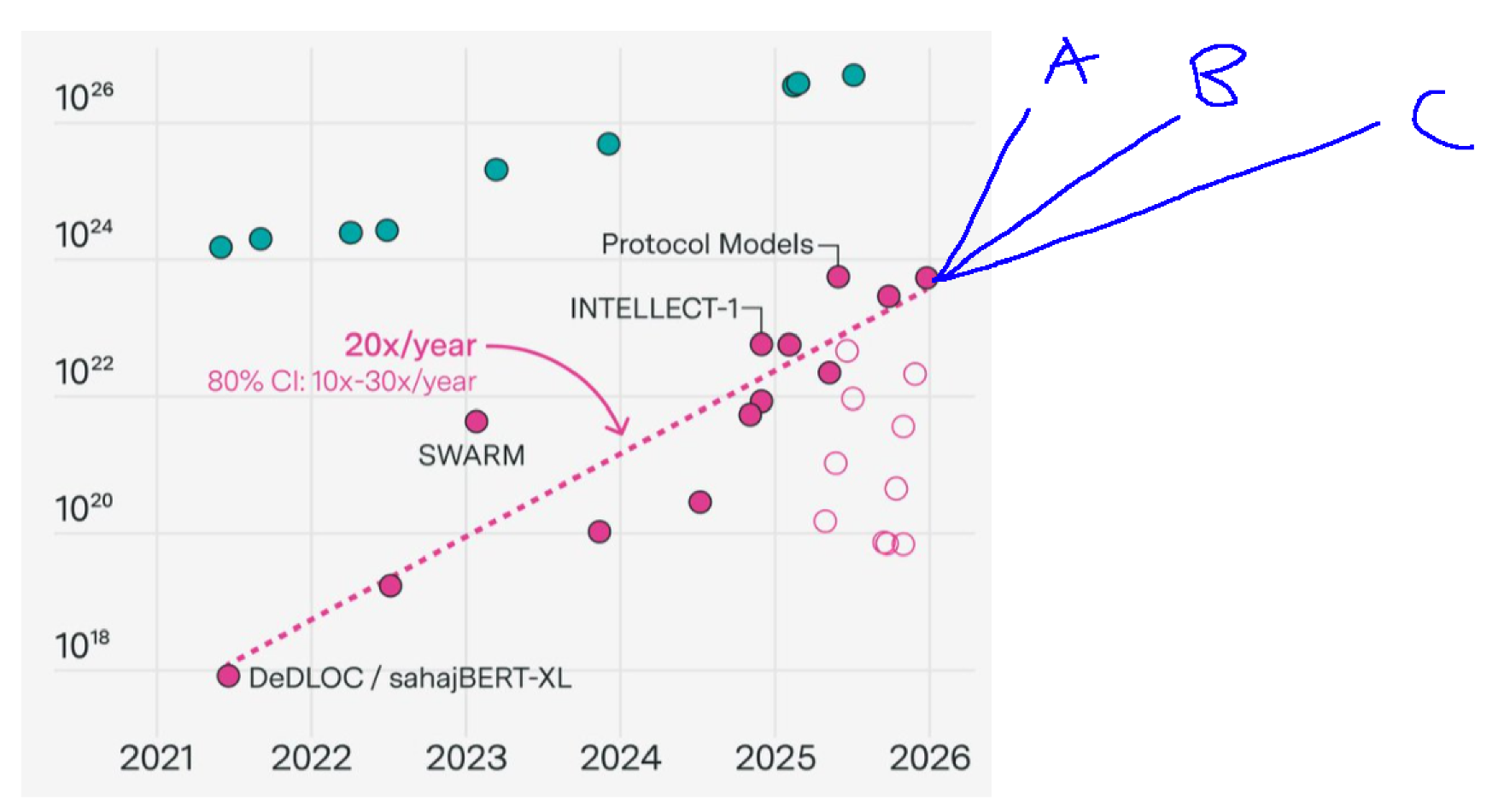

Update 2025-12-29 (PST) (AI summary of creator comment): Distributed training runs are not relevant to this market at 2e27 FLOP - only centralized training runs count.

This question is managed and resolved by Manifold.

Market context

Get  1,000 to start trading!

1,000 to start trading!

People are also trading

By the end of 2030, will a 2e29 FLOP training run have ever taken place?

76% chance

Will a machine learning training run exceed 10^27 FLOP in China before 2028?

44% chance

Will a machine learning training run exceed 10^26 FLOP in China before 2027?

86% chance

Will a machine learning training run exceed 10^25 FLOP in China before 2027?

82% chance

Will a machine learning training run exceed 10^26 FLOP in China before 2028?

85% chance

Will a machine learning training run exceed 10^27 FLOP in China before 2030?

79% chance

Will a machine learning training run exceed 10^26 FLOP in China before 2029?

82% chance

Will a machine learning training run exceed 10^30 FLOP in China before 2035?

34% chance

Will the largest machine learning training run (in FLOP) as of the end of 2035 be in the United States?

69% chance

Will the largest machine learning training run (in FLOP) as of the end of 2040 be in the United States?

46% chance

Sort by:

@Dulaman this is completely implausible. they are 1000x behind at least and that doesn't even include the interconnect disadvantage. it's completely incomparable

People are also trading

Related questions

By the end of 2030, will a 2e29 FLOP training run have ever taken place?

76% chance

Will a machine learning training run exceed 10^27 FLOP in China before 2028?

44% chance

Will a machine learning training run exceed 10^26 FLOP in China before 2027?

86% chance

Will a machine learning training run exceed 10^25 FLOP in China before 2027?

82% chance

Will a machine learning training run exceed 10^26 FLOP in China before 2028?

85% chance

Will a machine learning training run exceed 10^27 FLOP in China before 2030?

79% chance

Will a machine learning training run exceed 10^26 FLOP in China before 2029?

82% chance

Will a machine learning training run exceed 10^30 FLOP in China before 2035?

34% chance

Will the largest machine learning training run (in FLOP) as of the end of 2035 be in the United States?

69% chance

Will the largest machine learning training run (in FLOP) as of the end of 2040 be in the United States?

46% chance