CA1047 is an "AI safety" bill in the state of California.

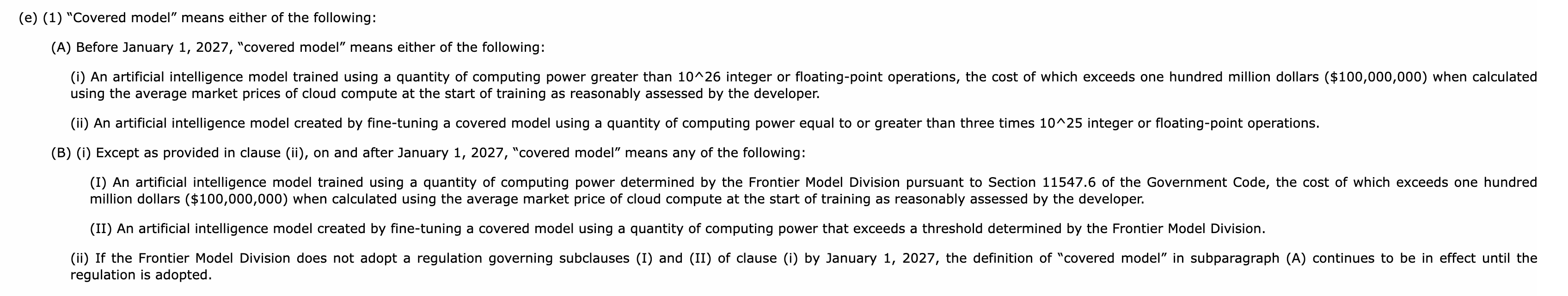

It applies (in its current state) to models trained with > 100 million worth of compute and > 10^26 FLOPs. (Because the price of compute is constantly dropping, this mostly just means -- models trained with > 100 million worth of compute.) Let's call the models to which it applies "covered models."

Beneath CA1047, the makers of covered models can be held liable for various "catastrophic risks" caused by their models.

(CA 1047 also applies to models fine-tuned with much less compute, in a currently-indeterminate way.)

Advocates of CA1047 say that it will be a simple and comparatively low-cost task to make sure you can be reasonably sure that covered, open-sourced models will avoid "catastrophic risk." Thus, they say, the bill does not amount to an effective ban on open source models trained with > 100 million in compute.

On the other hand, those less positive towards the bill think that it does amount to an effective ban on open source models trained with > 100 million in compute, because it gives you an indeterminate liability for doing so.

One way to operationalize this is: will we see a cluster of open source models released to be just beneath the cutoff point for liability?

If so, that's evidence that the bill amounts to an effective ban.

If on the other hand, there's a continuous spread of models across such the scale, that's evidence against the bill.

This is a hard-to-specify resolution criteria -- so I'll just give examples:

I would resolve positively:

- If Meta releases several models juuust beneath the cut off point in continuation of their Llama strategy, and none above them.

- If we see a general trend of open-weight model releases just beneath the cut-off point, or people casually mention in papers that they have trained to 90% of the limit

- If there are 4x more open-weight models just below the cut-off point than there are all open-weight models above it

I would resolve negatively:

- If Meta or some other organization keeps scaling up model releases and simply passes through the 100 million limit while following a trend-line

- If there are a handful of open-weights models just beneath the cut-off point, but still > 1x as many open-weights models above it

I would resolve N/A

- Scaling-up stops, no open-weights models are released anywhere near this, Llama 405b is never exceeded.

- People figure out some more elegant compositional way of combining LLMs, and the giant scaling-up is replaced by some such composition

- CA1047 is changed yet again and there's no longer a real sense in which it applies to 100m models only

- The Foundation Model Division changes their criteria in a way I do not currently foresee, such that it applies to models trained with < 100m, etc.

(Am open to adding further examples / taking suggestions to clarify cut-off points.)

It applies (in its current state) to models trained with > 100 million worth of compute and > 10^26 FLOPs. (Because the price of compute is constantly dropping, this mostly just means -- models trained with > 100 million worth of compute.)

If compute is getting cheaper, doesn't that mean that soon 10^26 FLOP will be cheap? Wouldn't that mean that the restrictions mostly mean "models trained with 10^26 FLOP" not "models trained with >100 million worth of compute"?

For CA1047, it's an "and" not an "or", unless I'm much mistaken -- i.e., the law applies to models trained with > 100 million of compute AND that have at least 10^26 FLOPs.

So by 2030 it will presumably cost << 100 million to train on 10^26 FLOPs, and the only concern we have will be the cost.

Looking at the text of the law they use a fucking... appositive clause rather than just an and? Or something.