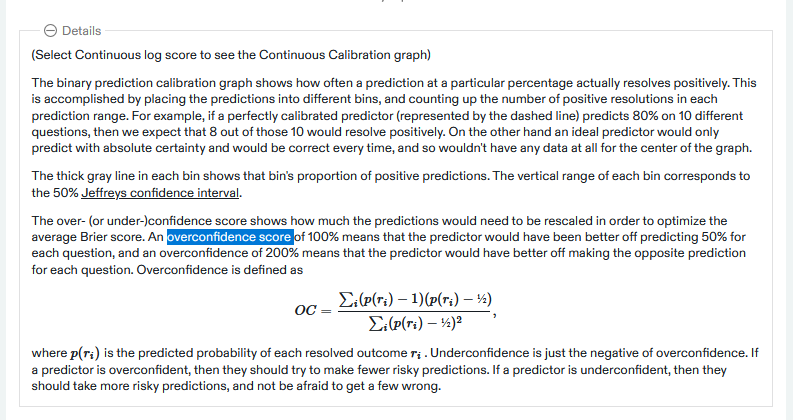

Rival crowd prediction site Metaculus allows users to calculate a score quantifying their over- or underconfidence:

I was unable to find anything about this formula or what motivates it. Any hints or sources where to read more would be appreciated.

I'd also be happy to chat about whether we can adapt it for Manifold somehow. My intuition would be that if, say, a user here buys a share of YES at 80%, we would want 80% to be an underconfident estimate so that there is some room for profit left.

People are also trading

The idea is to calculate an adjustment that you would apply to your predictions across the board to optimally correct for over/under confidence.

Apply the adjustment in the following way: p - (p-0.5)*adjustment

where p is your probability prediction.

Their OC formula gives the adjustment value that would have optimized the user's past predictions (based on minimizing mean squared error aka Brier score).

I may have some details wrong, I hadn't seen this formula before, but it seems to work the way I described.

I actually really like Manifold's current calibration graph system; for whatever reason, it's more intuitive to me than Metaculus' is. I can look at my calibration and see that:

My predictions are (generally speaking) very accurate

In particular, my NO bets are extremely accurate

I'm too confident buying YES on questions with a mid-range probability (about 40-70%), for whatever reason (probably a psychological quirk/bias/etc.)

Metaculus tells me I'm slightly overconfident (by like 3%, last I checked), but I don't really know what to do with that information.