resolves YES if during at least one of the main (non-VP) 2024 general presidential election debates in the United States, the moderator or any of the candidates uses some combination of the following words in sequence:

extinction, existential, "death of our species," etc

artificial intellegence, AI, machine learning, ML, machine intelligence, etc

resolves YES even if they're skeptical or dismissive of the existential risk from AI.

resolves NO if the above criteria aren't met.

i'll resolve it to the spirit of the resolution.

examples:

if they talk about a world where "everybody dies from a man-made superhuman intelligence," i'll resolve it YES.

if they say "...and that's why climate change has a risk of causing extinction. artificial intelligence is our next topic: will it steal our jobs?" i'll resolve it NO.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ203 | |

| 2 | Ṁ197 | |

| 3 | Ṁ147 | |

| 4 | Ṁ100 | |

| 5 | Ṁ97 |

People are also trading

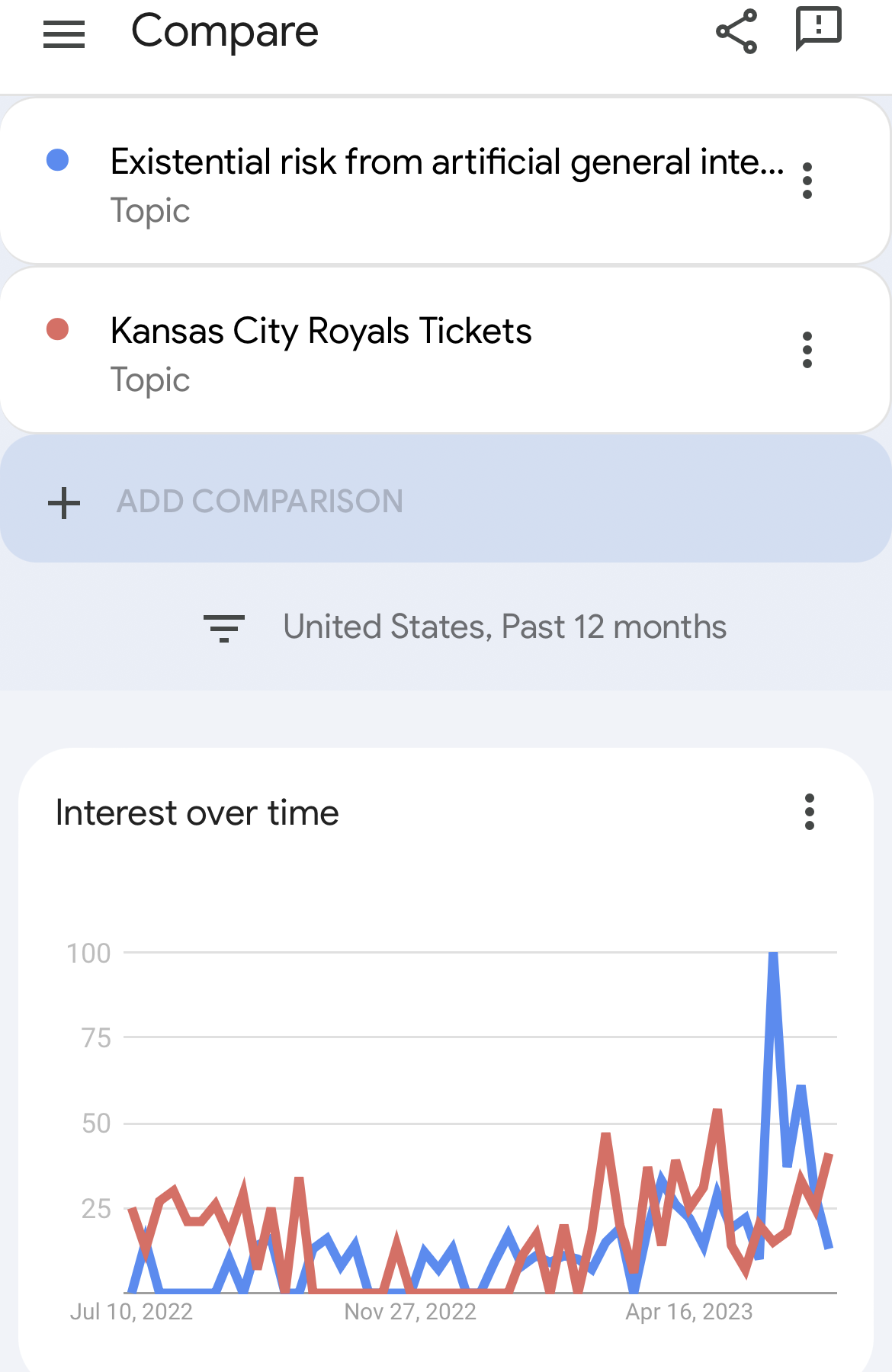

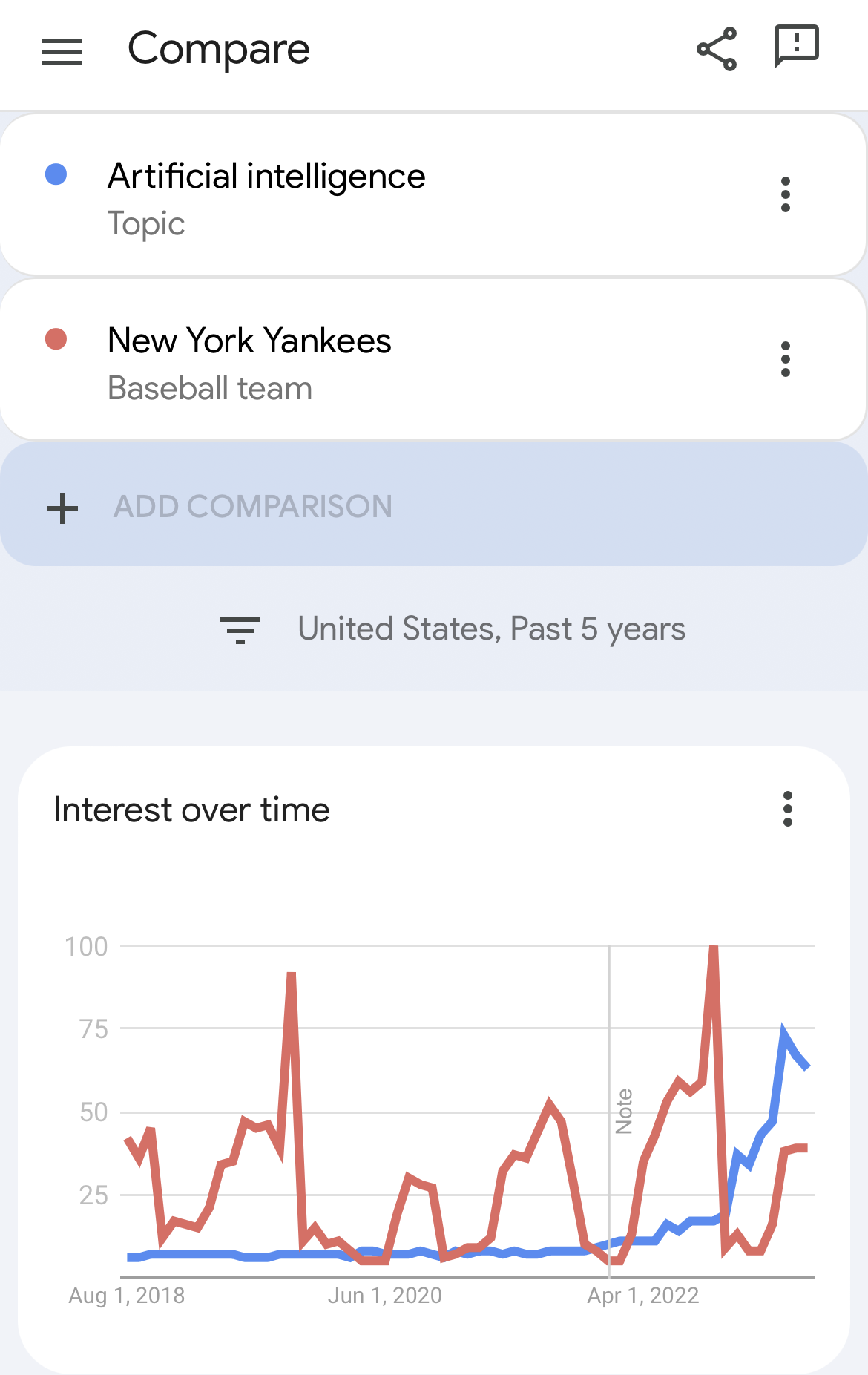

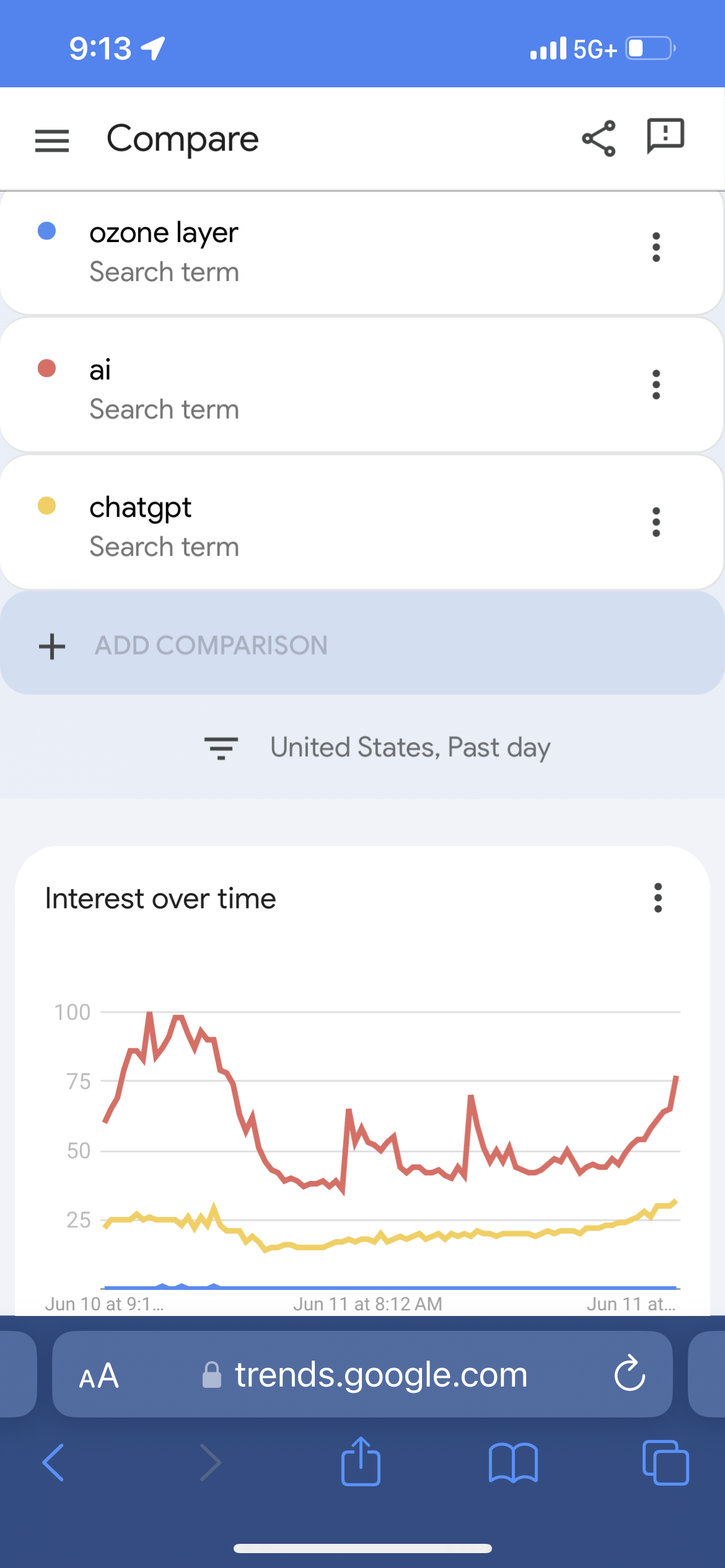

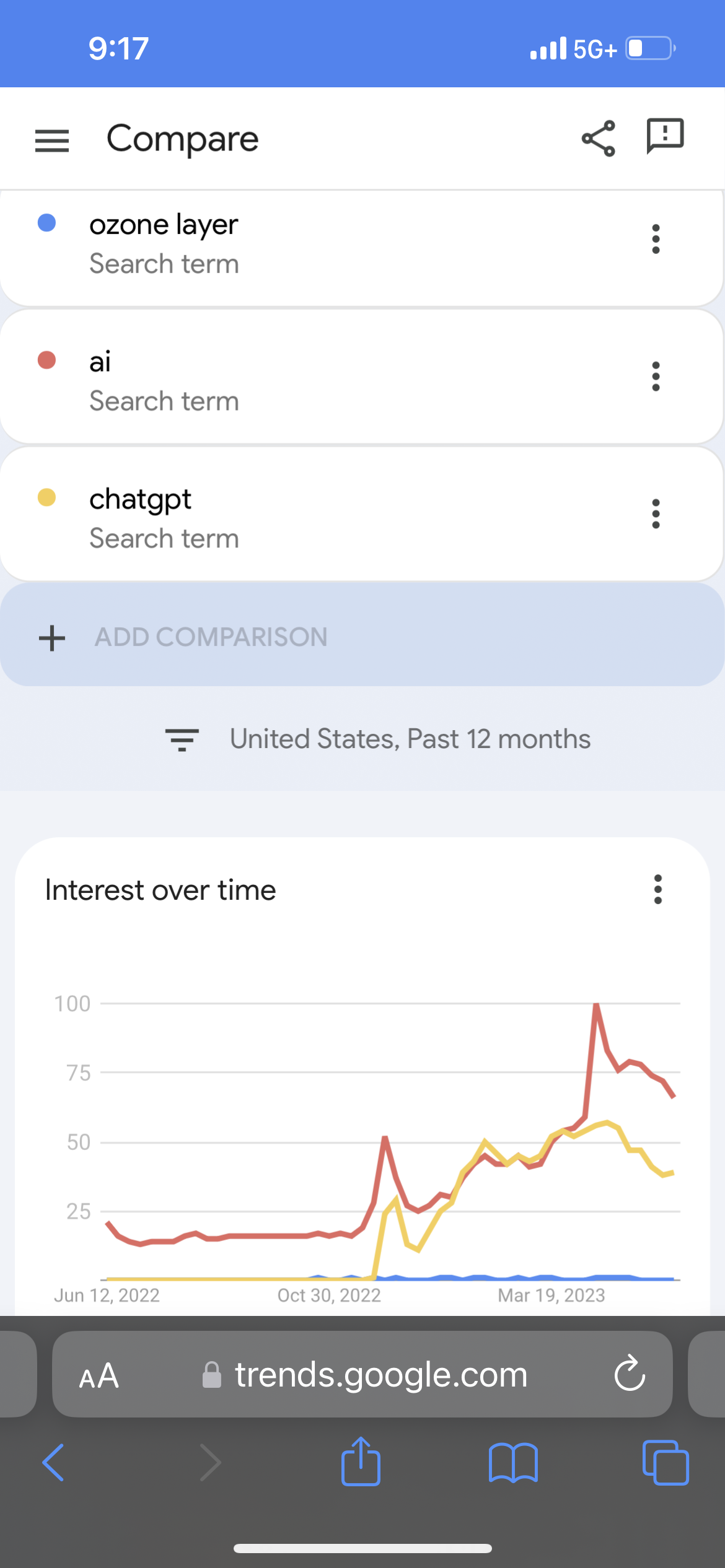

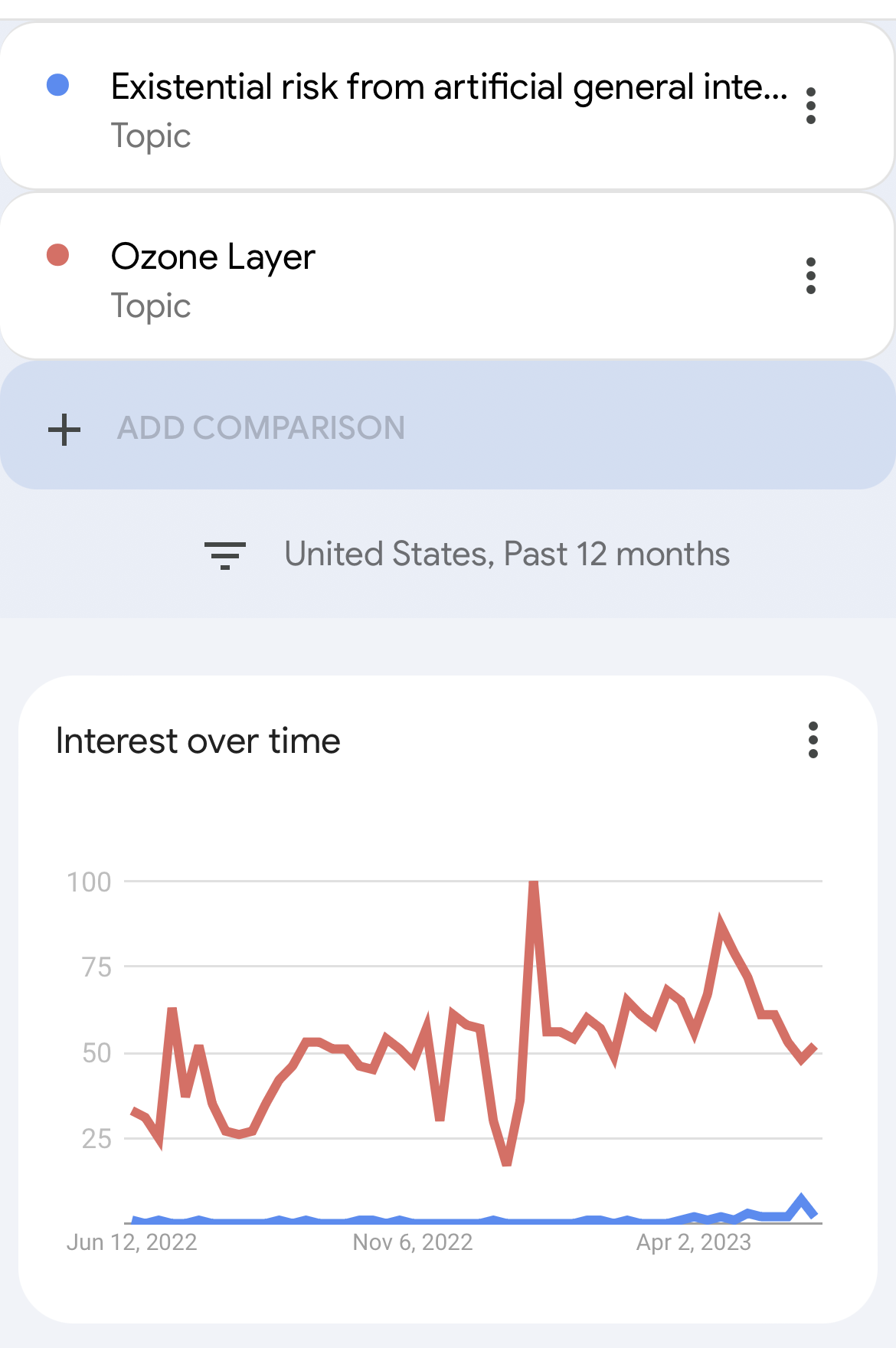

While I agree that we are correct to do so, Manifold users should consistently remind ourselves how much we are at an extreme in terms of thinking about AI existential risk. Here I have contrasted it with the ozone layer, and issue that I think we can all agree will not make it into the debate. Most Americans have probably seen the headlines about AI, but they are interspersed with celebrity gossip, the Ukraine, war, and sports.

Additionally, when someone sits down to write the debate questions, when “AI“ crosses their minds they will think about it in terms of jobs. It will not be valuable enough to get two slots, so in a very direct way the jobs discussion will crowd out the existential risk discussion. Heck, if it does get another slot, I bet privacy or deepfakes will come next (think about how deep fix will surge before the election).

Note that the resolution criteria specifies, two or more questions related to ai. For the reasons stated above, I consider a discussion on existential risk to be essentially conditional on this market resulting YES. It is highly liquid, so it seems like a very good place to start reasoning from.

To get to the current estimate on this market of 32%, you are assuming about 50% likelihood that existential risk is a sub-topic, conditional on AI being major one.

actually, thinking about it that way pushes me closer to this markets, current probability, so I will not bet more heavily at this point

@MatthewRitter i think you should check over my resolution criteria! they’re not the same as @MatthewBarnett ‘s!

@saulmunn two notable (non-exhaustive) differences:

a candidate brings up ai x-risk unprompted by a moderator

a candidate or moderator brings up ai x-risk once

either would make this market resolve YES, while the analogous events wouldn’t trigger a YES in the one you linked