OpenAI has just announced the “12 Days of OpenAI”, a series of 12 livestreams with new announcements. What will they announce during this?

https://x.com/openai/status/1864328928267259941?s=46

Notes:

“Released” = available to some random members of the public, or an announcement that it will be available before December 31

“Revealed” = the thing is shown to exist, but not necessarily available to the public

Possible clarification from creator (AI generated):

For "Sora 2" announcements to count, they must show better quality than the original version revealed in early 2024, not just faster processing

The new version can keep the name 'Sora' but must be clearly distinguished as a different version from the original

Update 2024-18-12 (PST) (AI summary of creator comment): - Announcements made on December 23rd and 24th will count as part of the event if OpenAI indicates they are part of the '12 Days of OpenAI' series

A 13th day or additional days will count if OpenAI explicitly connects them to the event

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ905 | |

| 2 | Ṁ837 | |

| 3 | Ṁ693 | |

| 4 | Ṁ597 | |

| 5 | Ṁ573 |

People are also trading

Here are the polls about the most "significant" announcements. I also included a question about Sora vs. o1 for my own curiosity, since those were about neck-and-neck throughout the course of the market.

/CDBiddulph/which-was-more-significant-the-o1-a

/CDBiddulph/which-was-more-significant-the-sora-pLdhQuRyLZ

/CDBiddulph/which-was-more-significant-the-laun

Bonus:

/CDBiddulph/which-was-more-significant-the-sora

I forgot to mention the non-o3 announcements of the 12 days of OpenAI in my poll - oops! Hopefully no one thought 1-800-CHATGPT was more significant than ChatGPT itself 😄

The polls look statistically-significant enough to me - resolving now

EDIT: oops, I can't resolve them myself. @dominic please help?

@CDBiddulph o1 vs o3 poll is close

FWIW, o1 was ABSOLUTELY more significant than o3. It represented a paradigm shift in the industry, vs just iterating to acheive progressively more challenging benchmark scores

before o3 i thought o1 was basically the most you could train models in that new paradigm without running out of available compute. If that had been true it would have not been a very significant paradigm shift. The fact that o1 was orders of magnitude less scale than what was available is what made me update a lot. I learned that when o3 came out. o3 feels much more significant than o1 in that sense.

@JaimeSantaCruz i also couldnt but turns out the bolded characters can hide a poll link! You night have some luck if you just click in bolder character in the first message in this comment thread

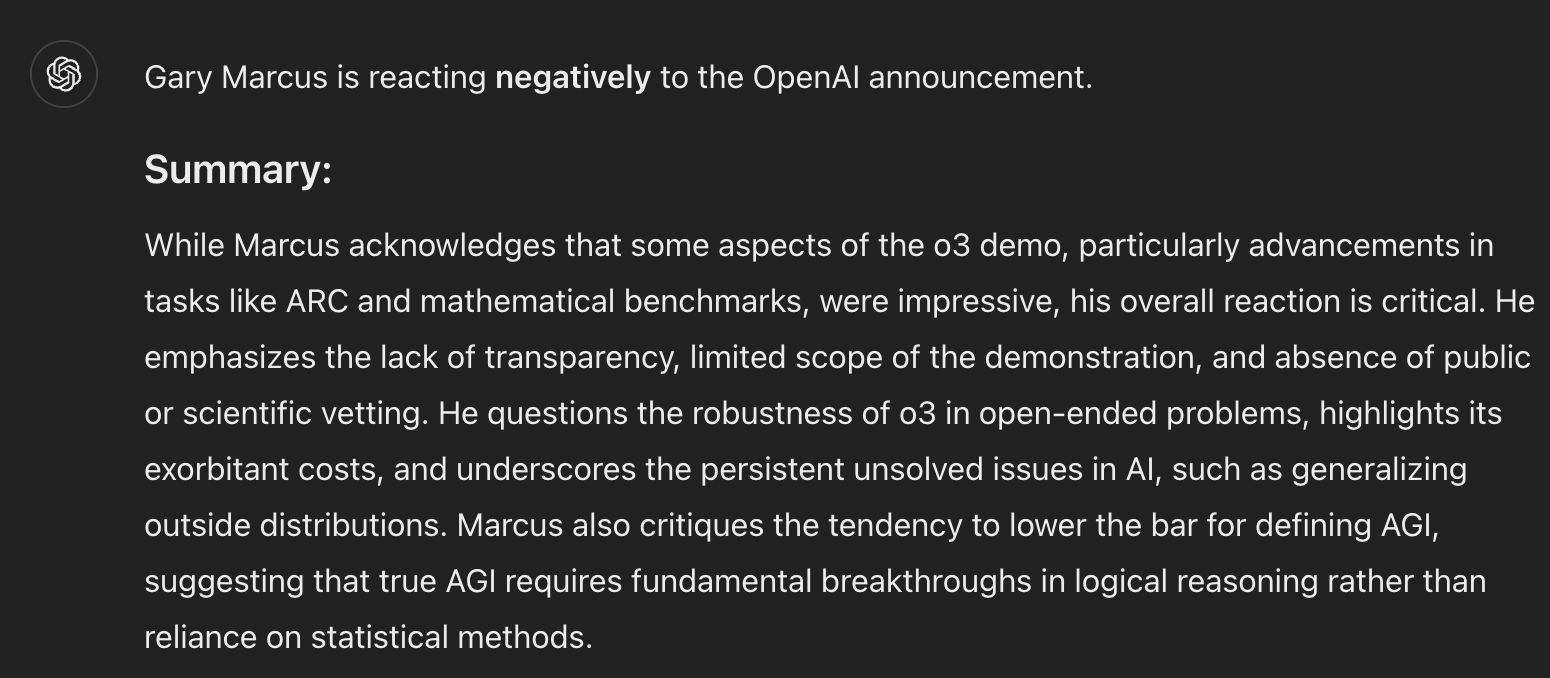

Marcus seems impressed: https://garymarcus.substack.com/p/o3-agi-the-art-of-the-demo-and-what

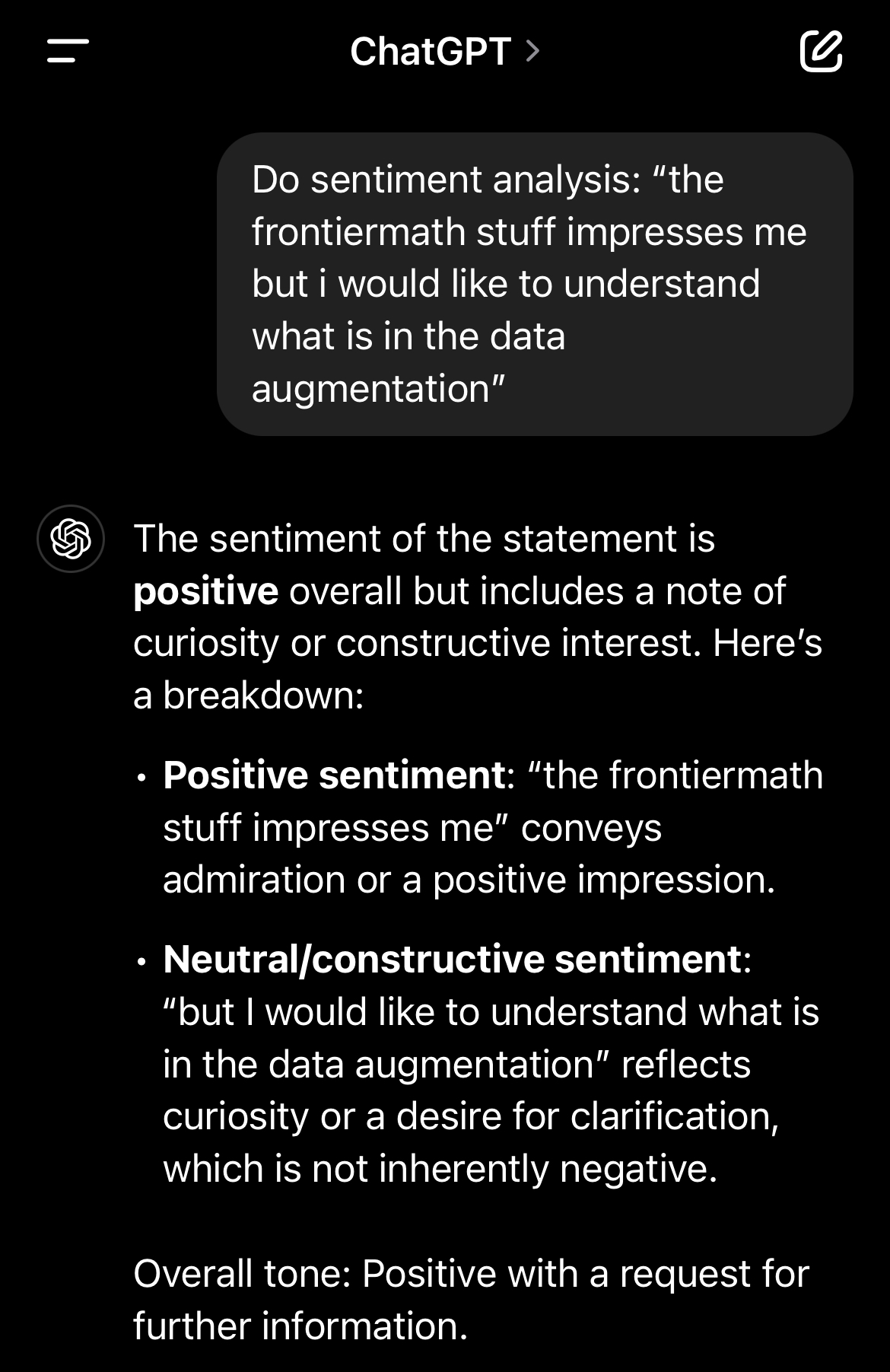

@dominic this is going to be prompt and interpretation specific. Entire article I can see being negative sentiment (and Chatgpt agrees) but the are positive reactions within it. Chatgpt acknowledges there is "something he reacted positively to" and recommends resolving yes

@Usaar33 I think we disagree on the domain for this question. I didn't bet on this, but I interpreted "something" as an actual product, like o3.

You could say that Gary Marcus reacted positively to "o3's performance on the ARC benchmark", but I wouldn't have counted that as one of the possible "somethings".

“o1 and/or o1-mini fine tuning” is currently trading at 4%, but was revealed waaaay back on December 6: https://x.com/openai/status/1865136373491208674

@mathvc Even if that is a sentence he actually said, I am sure it's not very hard to find a positive sentence in his article. But I don't think it's fair to take it out of context and not analyze his full reaction to each thing.

@mathvc Yeah, this particular tweet might get analyzed as positive. But it's a stretch to take one mildly positive tweet and reasonably say "this is his reaction to o3," unless he hadn't written anything else about it.

@dominic well i would like to disagree. He has a positive reaction to an announcement of model scores on FrontierMath

This is separate from his negative reaction to o3

Should o3 count as 1,000,000+ context length? According to the people who run ARC o3 was able to use almost 10 billion token chains of thought to solve just one problem.

AGI achieved only at 1.6%? 👀