This question will resolve to the number of original results (out of the three results detailed below) that were replicated in our study. For findings that were statistically significant in the original study, we would consider the findings to have replicated if we also find a statistically significant effect (at the significance level of p<0.05) in the same direction as the original study. In the case of a null finding in the original study, if we also have a null finding for the same effect (p>0.05), then we would consider the null finding to have replicated.

Please note that we will not be allowing any further time for people to make predictions beyond the listed closing date. (We have previously changed the closing dates for our other prediction markets to allow people more time to make the prediction, but we are not going to do that for this prediction market.)

Original Study Results

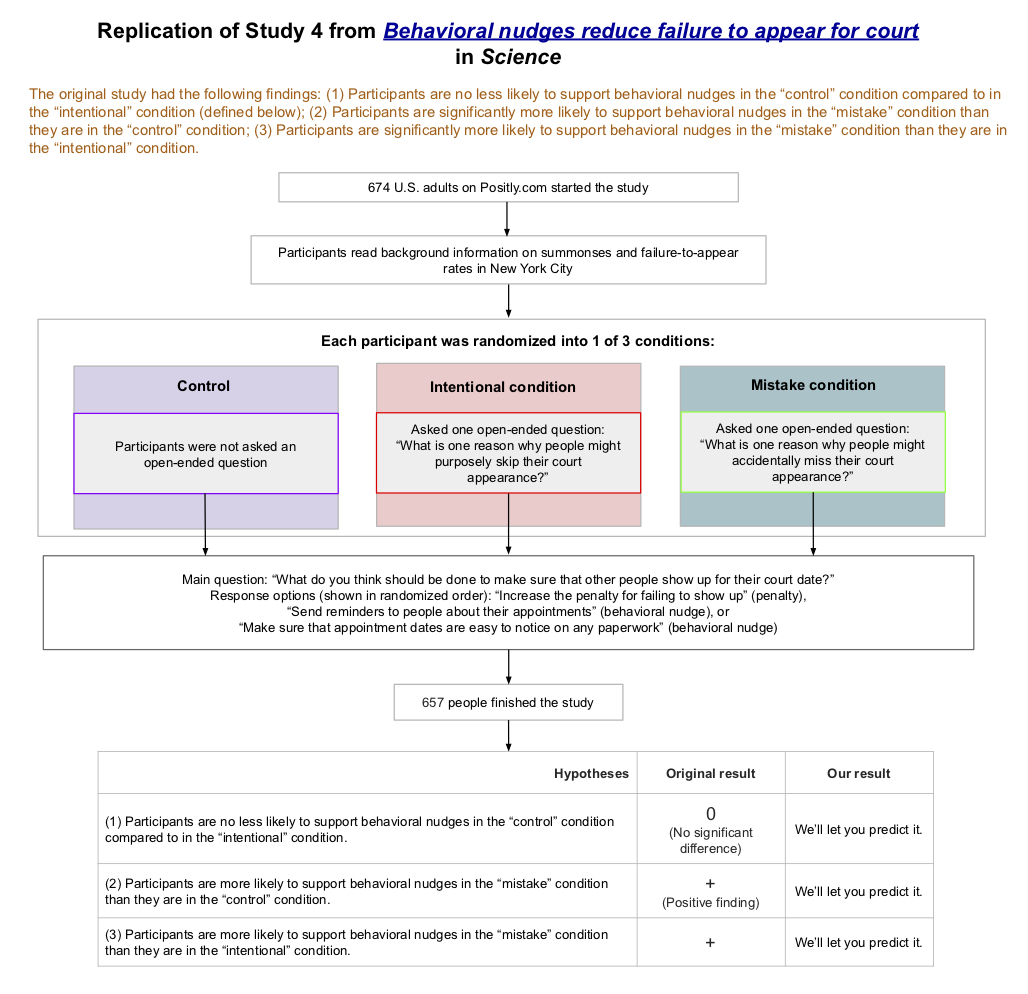

In the original study, participants read a scenario about failures to appear for court, then they were randomized into one of three groups - an “intentional” condition, where participants were asked to write one reason why someone would intentionally skip court, a “mistake" condition, where they were asked to write a reason someone would skip it unintentionally, and a “control” condition, where they were asked neither of those questions. All participants were then asked what should be done to reduce failures to appear. Participants in the “intentional” and “control” conditions chose to increase penalties with similar frequencies, while participants in the “mistake” condition were significantly more likely to instead support behavioral nudges (as opposed to imposing harsher penalties for failing to show) compared to people in either of the other conditions.

Study Summary

Please click here for a higher resolution version of the study diagram

In the original study and in our replication, all participants read background information on summonses and failure-to-appear rates in New York City. This was followed by a short experiment (described below), and at the end, all participants selected which of the following they think should be done to reduce the failure-to-appear rate: (1) increase the penalty for failing to show up, (2) send reminders to people about their court dates, or (3) make sure that court dates are easy to notice on the summonses. (In the original study, these options were shown in the order listed here, but in our replication, we showed them in randomized order.)

Prior to being asked the main question of the study (the “policy choice” question), participants were randomly assigned to one of three conditions.

In the control condition, participants made their policy choice immediately after reading the background information.

In the intentional condition, after reading the background information, participants were asked to type out one reason why someone might purposely skip their court appearance, and then they made their policy choice.

In the mistake condition, participants were asked to type out one reason why someone might accidentally miss their court appearance, and then they made their policy choice.

In the original study, there was no statistically significant difference between the proportion of participants selecting behavioral nudges in the control versus the intentional condition (control: 63% supported nudges; intentional: 61% supported nudges; χ2(1,N=304)=0.09; p=0.76). In our replication, we would consider this finding to have replicated if we also find no statistically significant difference (by which we mean that we find a p value above 0.05 when we check the difference) between the proportion of participants selecting behavioral nudges in the control versus the intentional condition.

On the other hand, 82% of participants selected behavioral nudges in the mistake condition. This was a significantly larger proportion than both the control condition [χ2(1,N=304)=9.08; p=0.003] and the intentional condition [χ2(1,N=304)=10.53; p=0.001]. In our replication, we would consider these two findings to have replicated if we also find statistically significant differences (at the significance level of p<0.05) with effects in the same direction as the original study.

The original study included 304 participants recruited from MTurk. Our replication included 657 participants (which meant we had 90% power to detect an effect size as low as 75% of the original effect size) recruited from MTurk via Positly. You can preview our study (as participants saw it) here.

Background Information

The Transparent Replications Project by Clearer Thinking aims to replicate studies from randomly-selected, newly-published papers in prestigious psychology journals, as well as any psychology papers recently published in Nature or Science involving online participants. One of the most recent psychology papers involving online participants published in Science (Fishbane, Ouss & Shah, 2020) was “Behavioral nudges reduce failure to appear for court.” We selected studies 3 and 4 from that paper for replication.

Context: How often have social science studies tended to replicate in the past?

In one historical project that attempted to replicate 100 experimental and correlation studies from 2008 in three important psychology journals, analysis indicated that they successfully replicated 40%, failed to replicate 30%, and the remaining 30% were inconclusive. (To put it another way, of the replications that were not inconclusive, 57% were successful replications.)

In another project, researchers attempted to replicate all experimental social science science papers (that met basic inclusion criteria) published in Nature or Science (the two most prestigious general science journals) between 2010 and 2015. They found a statistically significant effect in the same direction as the original study for 62% (i.e., 13 out of 21) studies, and the effect sizes of the replications were, on average, about 50% of the original effect sizes. Replicability varied between 57% and 67% depending on the replicability indicator used.

The replication described here was run as part of the Transparent Replications project, which has not run enough replications yet for us to give any base replication rates. Having said that, if you’re interested in reading more about the project, you can read more here. And here is where you can find write-ups for the three previous replications we’ve completed.

Apr 28, 2:43pm: How many of the three claims from study 4 of "Behavioral nudges reduce failure to appear for court" (Science, 2020) will → How many of the 3 claims from study 4 of "Behavioral nudges reduce failure to appear for court" (Science) replicate?

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ135 | |

| 2 | Ṁ65 |