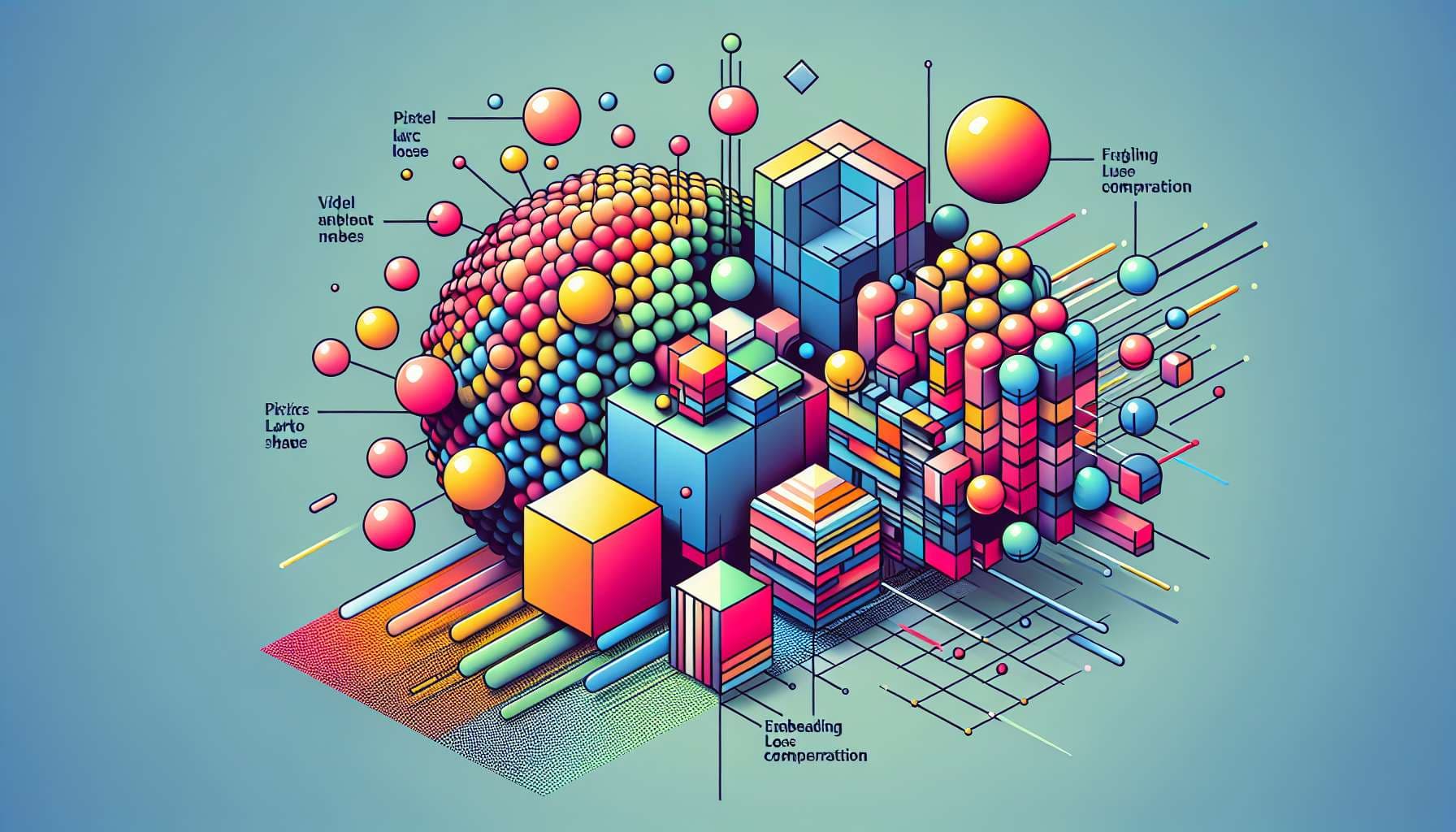

Will future large video model (understanding) use pixel loss or embedding loss?

7

Ṁ235Ṁ922028

1H

6H

1D

1W

1M

ALL

35%

Pixel loss

27%

Embedding Loss

38%

Neither

Examples of models with pixel loss:

MAE

iGPT

LVM

Examples of models with embedding loss:

I-JEPA

If end up people use diffusion model (DDPM) to pretrain large video understanding model, then resolve pixel level.

Will resolve in EOY 2027 by consulting expert/public opinions. Among all factors that decides the resolution, the paradigm that the SOTA video understanding model uses will be most indicative.

Discrete cross entropy loss (transformer+vqvae) will resolv eto Neither

This question is managed and resolved by Manifold.

Market context

Get  1,000 to start trading!

1,000 to start trading!