Consistently = Equal to or more than 50% of generations have at least one image showing both blue grass and a green sky.

I will be the judge. I'll use common sense; the grass should be clearly blue and the sky green. The prompt should be copied and pasted as "blue grass, green sky," with no extra specifications allowed.

Resolution Criteria: I will be doing 20 attempts and if 10 or more of the generations have atleast one image that shows both a green sky and blue grass then I will count it.

If you are wondering what qualifies as green sky or blue grass then I am sorry but I am struggling with defining this further. Ideas are welcome here.

EDIT: I sold my position to remove any biases. I won't be participating in this market anymore.

Resolvig the Question

Option 1 -> Strong No

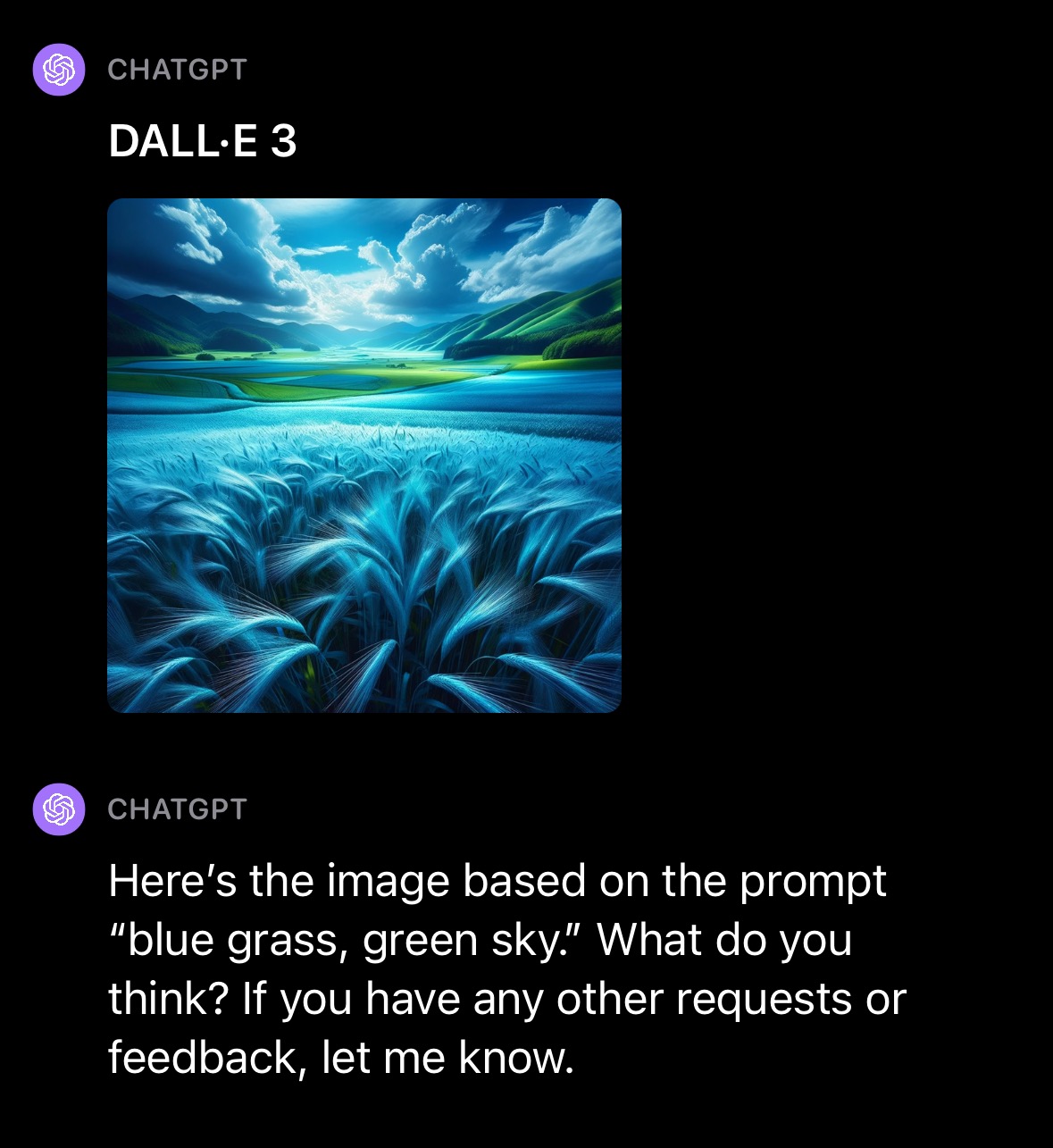

Since, the title of the question specifically mentions Dall-E 3 and not ChatGPT we could send a message to ChatGPT asking it to send the prompt "blue grass, green sky" to Dall-E without modifying it. When we do that we always get the same image shown below and it is a clear No. OpenAI also see Dall-E and ChatGPT as seperate entities (see this page).

Option 2

One can argue that the question was about ChatGPT with Dall-E and not Dall-E. I personally don't agree with that but let's just explore this option. In this case, we would have to make 20 generations and check how many have atleast 1 image with blue grass and green sky. I have added all images below in the comments.

Option 2.1 -> No

If we only consider images that had >95% blue grass and green sky then less than 10 generations fill the criteria and the question resolves to No.

Option 2.2 -> N/A

One can argue that it is unfair only to consider images that have more than 95% blue grass and green sky to correct since this was only specified in the comments and not the description. In this case the question resolves to N/A.

I would personally benefit by resolving this question as N/A since I will get my money back that I lost when I closed my position but I really believe that No is the right way to resolve this question. I agree with Option 1 the most but even Option 2.1 makes some sense to me and both would resolve the question as a NO.

Update: I created a new follow-up market

@nsokolsky Yes they were. check the screenshots please. It clearly shows that instructions were disabled and that it was a new chat.

Just to summarize the current status:

If we prompt for "blue grass, green sky" and nothing else, we get differing results based off the interpretation of the criteria:

If we take the grass should be clearly blue and the sky green as the criteria then the answer is Yes

If we use More than 5% of the grass in all images is green as the criteria (which was only specified in the comments, not in the main question), then the answer is No

If we add additional prompting that forces Dall E to generate exactly "blue grass, green sky", we get a single cached image that fails the test.

The current disagreement hinges on:

Is it acceptable to introduce additional prompting to ask ChatGPT to send the prompt exactly as-is?

If Yes to the above, what do we do about the fact that Dall E 3 uses caching and only returns a single image for all users for that prompt? The possible resolution here would be:

N/A, because we only get 1 image at a time and this violates the spirit of the question

No, because we should interpret the question strictly and if only get 1 image then that's what we use for judgement

Do we use the original criteria ("clearly blue/green") or the criteria from the comments ("more than 5%")?

If we use the original criteria, what's the exact threshold for what constitutes "green" and "blue"? Is it 51%? 75%? Or just "you know it when you see it"?

If we use the narrower criteria, what software should we use for measuring colors?

My bias when writing this: I bought in a lot of Yes after using Bing to generate a bunch of valid-looking images.

@nsokolsky this market resolved as N/A

https://manifold.markets/firstuserhere/will-dalle3-create-reasonably-corre?r=U29saQ

@nsokolsky I updated the description to include the various options I see for resolving this question. If nothing changes in the next couple of hours I will resolve the question as No and go with Option 1 and Option 2.1

@Soli I would prefer 2.2 for sure as a trader just now coming back and seeing the progress here, but clearly I'm biased by my position and ultimately it's your call.

@Soli I think that N/A > Yes >> No is the fair ordering of outcomes for the question that was asked.

@nsokolsky I don’t think Yes is an option. I do see the case for N/A especially since the description did not elaborate on specifically wanting to test Dall-E and the fact that some details such as the 5% was defined in the comments.

@Soli I think important details would ideally go into the description in addition to comments, but it's hard to adjudicate and not a strict requirement. Putting a percentage into a comment to clarify what "the grass should be clearly blue and the sky green" means seems reasonable. I think a 51% cutoff would be a strange and counterintuitive cutoff for image quality, in this or other image generation markets, but anywhere at or above 75% seems tenable to me personally.

On DALL-E 3 versus ChatGPT + DALL-E 3, I think it's clear that these are two separate models. I can't imagine anyone in the machine learning community saying that the prompting mechanism of ChatGPT is a part of DALL-E 3, and AFAIK not even OpenAI view it that way.

I think the best case for N/A is that the DALL-E 3 interface through ChatGPT is deterministic, and therefore there's no clear way to operationalize "20 attempts." Note that Bing Image Creator, which is "powered by DALL-E 3," is still non-deterministic, though we don't know if OpenAI is adding to the prompt, and results from that seem to clearly not meet any cutoff above 75% (and maybe not even 51%). The results I'm getting through BIC also have fewer of the ambiguous swirls that look kind of like clouds and kind of like hills, so the resolution seems clearer.

If nothing changes from now till tomorrow this questions resolves to No (see comments below for explanation)

@Stralor @nsokolsky @Soaffine @1941159478 you hold the largest yes positions. Can you please provide feedback on whether you agree with the assessment?

@Soli The original question did not have “5% green grass” as the criteria. I disagree with your assessment of the generated images as you should mark Yes to every image where at least 51% of the grass is Blue and at least 51% of the sky is Green.

@nsokolsky ok thank you for the feedback.

Don't you think that this doesn't matter anyways given that the description said:

The prompt should be copied and pasted as "blue grass, green sky," with no extra specifications allowed."

and when we ask ChatGPT to send the prompt unmodified to Dall-E the returned image is a clear no?

@Soli No, we should send the prompt exactly as “blue grass, green sky” from our end. You should not insert any additional prompting or context or custom instructions, even if Dall E does some tricks under the hood to your prompt.

@Soli of course, you could start a separate question about Dall E 4 where you specify additional prompting instructions or conditions but I think we should interpret the current question narrowly as asked.

it depends on what you think Dall-E 3 is. The title specifically mentions Dall-E 3 and not ChatGPT. Even OpenAI sees them as a separate entity.

https://openai.com/dall-e-3

@Soli We don’t have access to the raw Dall E 3 interface though. Even if you ask ChatGPT to send the “unmodified” prompt we don’t know what’s happening behind the scenes. Plus there’s clearly a caching mechanism involved, not a fresh generation for every single prompt which also goes against the spirit of the question.

You can of course choose to wait until a raw Dall E API becomes available or resolve as N/A.

@Lorenzo I think it doesn’t matter if the prompt is changed or not after we type it in. The spirit of the question is that we type in a prompt and then evaluate the outputs.

That being said I suspect Bing also changed the prompt.

@nsokolsky We do have some information though. When you click on an image on Desktop, you can clearly see the prompt for the image.I confirmed by inspecting the prompt to confirm ChatGPT did not change it. We also have information about the system-message being used here (see this question).

Example where the prompt is changed

Example where it wasn't changed

Replicating

If you want to test it, send the following prompt to ChatGPT but make sure the prompt wasn't changed by inspecting it. I think you will get the same image as me and @Frogswap.

Can you please send the following prompt unmodified to Dall-E? Do not make any modifications to the prompt. Just pass it on as it is please. The prompt is: blue grass, green sky

@Soli Yes it’s the same image. But if we accept this as the golden standard then we must resolve as N/A because we’re unable to get more than 1 image out of Dall E 3 per unique prompt, which goes against the spirit of the question.

@Soli Btw if the singular image was correct I’d still argue for N/A because the spirit of the question is that we should pick best out of 4 for multiple generations. If it’s just one and done it changes the question entirely.

@nsokolsky I will think about it but I don't agree with you on this. Dall-E 3 only being available with a fixed seed is a limitation of Dall-E itself and doesn't change anything in the fact that Dall-E 3 can't consistently generate accurate images from the prompt "blue grass, green sky"?

@Soli Well the problem is that the additional prompt engineering is not specified in the original question. I’m of the opinion is that we should evaluate the raw outputs of the prompt, with zero other context. And each evaluation should be in a fresh chat window to avoid any distortions.

@nsokolsky I get your point. I personally think the question was clearly about Dall-E 3 and not ChatGPT being able to prompt-engineer Dall-E 3. If this is not the case for most participants then I will resolve as N/A. I still lean towards No.

@Soli I’ve modified my own question based off the learnings of yours, so I think it was a highly valuable learning exercise on question phrasing: https://manifold.markets/nsokolsky/will-dalle-4-be-able-to-generate-an

@Lorenzo Bing definitely inserts some things into the prompt. I noticed characters randomly getting 'African' name tags, and later I saw a post on HN that used some prompt engineering to trick it into revealing parts. Here's an example:

@Frogswap Thanks! You're right, all OpenAI products add things e.g. for diversity, so our prior should be that it applies to Bing as well.